The free lunch is over and our CPUs are not getting any faster so if you want faster builds then you have to do parallel builds. Visual Studio supports parallel compilation but it is poorly understood and often not even enabled.

I want to show how, on a humble four-core laptop, enabling parallel compilation can give an actual four-times build speed improvement. I will also show how to avoid some of the easy mistakes that can significantly reduce VC++ compile parallelism and throughput. And, as a geeky side-effect, I’ll explain some details of how VC++’s parallel compilation works.

Update, December 2019: the VC++ compiler now emits ETW events to make understanding build performance easier. See here for details.

Plus, pretty pictures.

Spoiler alert: my test project started out taking about 32 s to build. After turning on parallel builds this dropped to about 22 s. After fixing some configuration errors this further dropped to 8.4 s. Read on if you want the details.

A CPU bound build

My test project consists of 21 C++ source files. It is a toy project but it was constructed to simulate real issues that I have encountered on larger projects.

Each source file calculates a Fibonacci value at compile time using a recursive constexpr function so that the 56 byte source files take a second or two to compile. I previously blogged about how to use templates to do slow compiles but the template technique is quite finicky, and the thousands of types that it creates cause other distracting issues, so all of my measurements are done using the constexpr solution (thanks Nicolas).

Since constexpr is not natively supported in Visual Studio 2013 I had to install the Visual C++ Compiler November 2013 CTP which contains new C++11 and C++14 features. VS 2015 should work also. After installing it you can select this platform toolset from the project properties in the General section, as shown to the right. If you don’t feel like installing the CTP, no worries, just follow along. The lessons apply to VS 2010 to 2013. The CTP just makes it easier to demonstrate them in a small project.

Since constexpr is not natively supported in Visual Studio 2013 I had to install the Visual C++ Compiler November 2013 CTP which contains new C++11 and C++14 features. VS 2015 should work also. After installing it you can select this platform toolset from the project properties in the General section, as shown to the right. If you don’t feel like installing the CTP, no worries, just follow along. The lessons apply to VS 2010 to 2013. The CTP just makes it easier to demonstrate them in a small project.

Having made a slow-to-compile project the challenge is to improve the build speed without changing the source code.

If you install the CTP and build my test project and watch Task Manager you’ll see something like the image to the right. I have Task Manager configured to show one graph for all CPUs because I think it makes it easier to see how busy my system is, and the answer in this case is not very. The screenshot is from my four-core eight-thread laptop and it looks like my CPU is about 16% busy, meaning one-and-a-bit threads are in use. So, not very much parallelism is going on and the build took about 32 seconds.

If you install the CTP and build my test project and watch Task Manager you’ll see something like the image to the right. I have Task Manager configured to show one graph for all CPUs because I think it makes it easier to see how busy my system is, and the answer in this case is not very. The screenshot is from my four-core eight-thread laptop and it looks like my CPU is about 16% busy, meaning one-and-a-bit threads are in use. So, not very much parallelism is going on and the build took about 32 seconds.

It turns out there is an easy explanation for this behavior. Parallel compilation is off by default in VS 2013!

I’m not sure why parallel compilation is off by default, but clearly the first step should be to turn it on. Be sure to select All Configurations and All Platforms before making this change, shown in the screenshot below:

If we build the debug configuration now we will get an error because Enable Minimal Rebuild is on by default and it is incompatible with multi-processor compilation. We need to turn it off. Again, be sure to select All Configurations and All Platforms before making this change.

The other reason to disable minimal rebuild is because it is not (IMHO) implemented correctly. If you use ccache on Linux then when the cache detects that compilation can be skipped it still emits the same warnings. The VC++ minimal rebuild does not do this, which makes eradicating warnings more difficult.

Turning on parallel builds definitely helps. The Task Manager screenshot to the right uses the same scale as the previous one and we can see that the graph is taller (indicating parallelism) and narrower (indicating the build finished faster, about 22 seconds). But it’s far from perfect. The build spikes up to a decent level of parallelism twice and then it settles down to serial building again. Why?

Turning on parallel builds definitely helps. The Task Manager screenshot to the right uses the same scale as the previous one and we can see that the graph is taller (indicating parallelism) and narrower (indicating the build finished faster, about 22 seconds). But it’s far from perfect. The build spikes up to a decent level of parallelism twice and then it settles down to serial building again. Why?

Many developers will assume that this imperfect parallelism is because the compiles are I/O bound, but this is incorrect. It is incorrect in this specific case, and it is also generally incorrect. Compilation is almost never significantly I/O bound because the source files and header files quickly populate the disk cache. Repeated includes in particular will definitely be in the disk cache. Writing the object files may take a while, but the compiler doesn’t have to wait for this. Linking might be I/O bound (especially the first link after a reboot), but compilation is virtually never I/O bound, and I’ve got the xperf traces to prove it.

So what is going on?

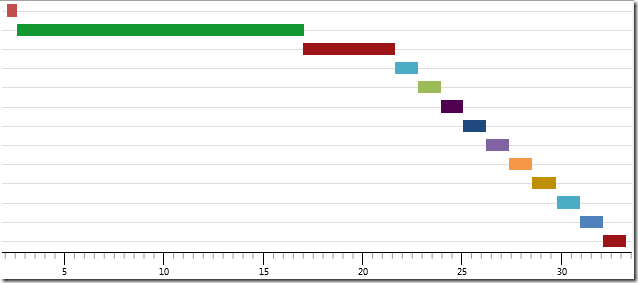

It’s time to get all scientific. Let’s grab an xperf trace of the build so that we can analyze it more closely. I recommend using UIforETW to record a trace – the default settings will be fine, or you can turn off context switch and CPU sampling call stacks to save space. Having recorded a trace I opened it in WPA, opened a Processes view and arranged the columns appropriately to make it easy to see the lifetimes of all compiler (cl.exe) processes. Let’s first look at a graph from before parallel compilation was enabled:

Each horizontal bar represents a cl.exe process. I happen to know that cl.exe is single-threaded – any parallelism has to come from multiple cl.exe processes running simultaneously. The numbers along the bottom represent time in seconds. We can see that cl.exe is invoked 13 times to compile our 21 files and we can see that there is zero parallelism.

Next let’s look at a graph of building our project with parallel compilation enabled:

Isn’t that pretty? We can see that the build is running faster (the graph is skinnier), and we can see the places where our build is running in parallel (stacked bars). But there are mysteries. What are those green and blue spikes near the top left? And why are there so many serial compilations on the right?

Diagnosing what is going on is still challenging because while we can see the compiler processes coming to life we cannot see what file is being compiled when. This is annoying. So I fixed it, ‘cause that is what programmers do!

I wrote a simple program that calls devenv.exe as a sub-process. I also modified my build-tracing batch file to add the /Bt+ flag to the compiler options. This option would frequently crash with VS 2010, but it works reliably with VS 2013 and prints things that look sort of like this:

time(c1xx.dll)=1.01458s < 5290422043 – 5292596900 > [Group3_E.cpp]

time(c2.dll)=0.00413s < 5292601054 – 5292609908 > [Group3_E.cpp]

This tells us the length of time spent in the compiler front-end and back-end for each source file. Handy. Additionally, the large numbers are the start and stop times for each stage, taken from QueryPerformanceCounter. So, my wrapper process parses the compiler output and emits ETW events that show up in my trace shortly after the compile stages finish – UIforETW automatically records those custom ETW events. It calculates the delay from when the compile stage ended to when it received the output, just in case, and puts that in the event also. This gives us an annotated trace of our build:

The pink and green diamonds at the top of the screen shot correspond to the events emitted when my wrapper program sees the /Bt+ output at the end of each compile stage. The mouse is hovering over the circled diamond, and we can read off the payload in the bottom of the tooltip:

- Source file: Group1_E.cpp

- Stage duration: 6.064306 s

- Start offset: –6.065526 s

- End offset: –0.001220375 s

That means that the event was emitted just 1.2 ms after the compile finished – close enough – and that Group1_E.cpp took over 6 s to compile, so the long green bar is the compilation of Group1_E.cpp, and it’s time to start explaining what is going on.

How VC++ handles multiple files

Visual Studio doesn’t invoke the compiler once per source file. That would be inefficient. Instead VS passes as many source files as possible to the compiler and the compiler processes them as a batch. If parallel processing is disabled then the compiler just iterates through them. If parallel processing is enabled and multiple files are passed in then things get interesting.

In this situation the initial compiler process does no compilation – instead it takes on the task of being the master-control-program. It spawns MIN(numFiles,NumProcs) copies of itself and each of those child processes grabs a source file and starts compiling. The child processes keep grabbing more work until there is none left, and then they exit. The master-control-program sticks around until all of the children are finished.

Now this graph makes sense. The blue bar is the master-control-program – the compiler process started by Visual Studio. It doesn’t do any compilation. The other six bars are six new compiler processes that each grab a source file and go to work. We can use the generic ETW events that my wrapper inserts to determine that the short bar is from compiling CompileParallel.cpp, a mostly empty source file. The four roughly equal-length bars are from Group1_A.cpp, Group1_B.cpp, Group1_C.cpp, and Group1_D.cpp, all of which do the same compile-time calculations. The long green bar is from Group1_E.cpp which does four times as much compile-time computation.

Now this graph makes sense. The blue bar is the master-control-program – the compiler process started by Visual Studio. It doesn’t do any compilation. The other six bars are six new compiler processes that each grab a source file and go to work. We can use the generic ETW events that my wrapper inserts to determine that the short bar is from compiling CompileParallel.cpp, a mostly empty source file. The four roughly equal-length bars are from Group1_A.cpp, Group1_B.cpp, Group1_C.cpp, and Group1_D.cpp, all of which do the same compile-time calculations. The long green bar is from Group1_E.cpp which does four times as much compile-time computation.

Simple.

What is limiting parallelism?

The question now is, why doesn’t Visual Studio submit all of the source files at once? What is the limiting factor? A bit of poking around reveals the answer. The batches of files that Visual Studio submits to the compiler have to all have identical compiler options. That makes sense really – send one set of compiler options and a list of source files. The problem is that the compilation of different batches of files don’t overlap. Visual Studio waits for the previous batch to finish before submitting a new batch. So, the more different types of command-line options you have, the more parallelism is limited. In this particular project we have:

- stdafx.cpp – this file creates the precompiled header file so it necessarily has different command-line options

- Group1_*.cpp – these files all use the precompiled header file so they create another batch

- Group2_*.cpp – these files do not use the precompiled header file so they create another batch

- Group3_*.cpp – these files use the precompiled header file but they each have a different warning disabled on the command line, so they each go in a batch by themself

Making it fast

Now our task is clear. We need to minimize the number of different sets of compiler options (batches).

The warning suppression differences are easy – those can be moved from the command-line to the source file. We just have to use #pragma warning(disable) instead of /wd. Using #pragma warning(disable) is much better anyway. It makes it easier to see what warnings you are suppressing and it lets you put in comments to explain why you are suppressing them. Recommended.

Precompiled header files are a trickier case. If we use precompiled headers then we need at least two batches, and possibly three. I’ve seen projects with four different precompiled header files which means that they have at least eight different batches. Precompiled header files are usually a net-win but it is important to understand their costs.

If you want to be certain that you don’t have custom compile settings for some files then the best thing to do is to load up the .vcxproj file into a text editor and look – it is far to easy to miss customizations when looking in project properties:

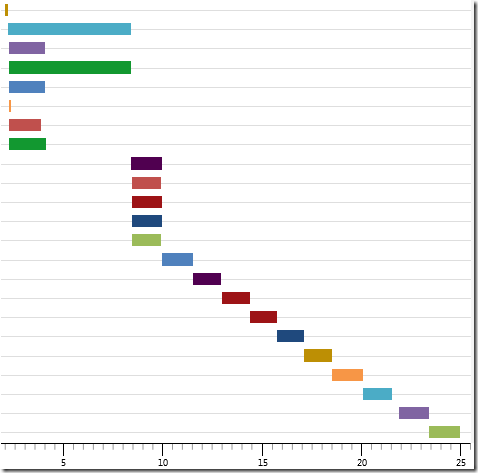

With these changes made we can do a final compilation of our project, and the results are marvelous:

The screen shot to the left uses the same horizontal scale as the other ones, but it is so narrow that I can easily fit this paragraph to the right. This paragraph owes its existence to fast parallel compilation, and it would like to say thank you.

The screen shot to the left uses the same horizontal scale as the other ones, but it is so narrow that I can easily fit this paragraph to the right. This paragraph owes its existence to fast parallel compilation, and it would like to say thank you.

The stdafx.cpp file still compiles serially, but everything else is quite nicely parallelized, and our build times are hugely improved.

The green bar at the bottom sticks out longer than everything else and this is because that is the compiler instance that compiles group1_E.cpp, the most expensive source file. These long-pole source files can be a problem, especially if they start compiling late in the build. If you have a single file that takes 20-30 seconds then that can really ruin build parallelism. This is particularly risky if you use unity builds. If you glom together too many files then you will reduce the opportunities for parallelism and you will increase the risk of creating a “long-pole” that will finish compiling long after everything else. Any unity file that takes more than a few seconds to compile is probably counter-productive, or at least past the point of diminishing returns.

Unity builds are when you include multiple .cpp files from one .cpp file in order to reduce the overhead of redundantly processing header files. In extreme cases developers include every .cpp file from one .cpp file, but that’s just dumb.

Now that we’ve tamed parallel compilation it is rewarding to look at Task Manager’s monitoring of the three different builds. The time savings and the different shapes of the CPU usage graph are quite apparent:

It could be easier

Ideally Visual Studio (technically MSBuild I suppose) should have a better scheduler. There is no reason why the compilation of different batches can’t overlap, and that could give even more performance improvements. A global scheduler could also cooperate with parallel project builds, to avoid having dozens of compiles running simultaneously. Maybe in the next version? If you don’t want to wait you could switch to using the ninja build system – that is what Chrome uses to get perfectly parallel builds on all platforms.

Or, as I suggested in You Got Your Web Browser in my Compiler!, I think Microsoft could get global compiler scheduling quite easily:

Getting a global compiler scheduler would actually be quite easy. Just get MSBuild to create a global semaphore that is initialized to NumCores and pass in a switch to the compilers telling them to acquire that semaphore before doing any work, and release it when they’re done. Problem solved, trivially.

Ideally VC++ would emit ETW events at the end of each compilation and linking stage. This would avoid the need for my egregious hacks, and it would give better information, such as which process was working on each file. This would be much better than wrapper processes that use undocumented compile switches. Pretty please?

Of course, parallel compilation is not a panacea. For one thing, if our compiles are fast then when will we have sword fights?

For another thing, heavily parallel compilation can make your computer slow. A few dozen parallel project builds that are all doing parallel compilation may start up more compiler instances than you have processors, and that may make Outlook a bit less responsive. More on that next week.

Final notes

In this particular case we didn’t use the xperf trace for anything particularly exotic – just process lifetimes and compile-completion markers. However the magic of ETW/xperf is that we can go arbitrarily deep. If we wanted to see all disk I/O, or all file I/O, or detailed CPU usage, or all context switches, or 8,000 CPU samples/sec/core, then grabbing that information is trivial – UIforETW records all that (just check Fast sampling). All of the information goes on the same timeline and we can dig as deep as we want. It’s amazingly powerful, and in a future article I’ll show how I used it to find some surprising behavior of Microsoft’s /analyze compiler…

In many projects just turning on parallel compilation is sufficient. If you’re not doing anything pathological with your build settings and if you aren’t going unity-mad then just turn on parallel builds and enjoy the faster builds. However even in that case you may still find it useful or interesting to use /Bt+, with or without ETW tracing, to investigate your compile-times on a per-file basis.

VS 2013 can also do parallel Link Time Code Generation (LTCG). LTCG can make for extremely slow builds so being able to parallelize it – four threads by default – is pretty wonderful. We upgraded one of our projects to VS 2013 mostly because that made LTCG linking two to three times faster. With VS 2013 Update 2 CTP 2 (a preview of VS 2013 Update 2) the /cgthreads linker option was added which lets you control the level of LTCG parallelism and get a bit more LTCG build speed – /cgthreads:8 on the linker command line means to use eight threads for code-gen instead of the default of four.

Future work could include analyzing the linker /time+ switch output to do some analysis of link times, but this data will not be as rich as the compile data.

Keeping it real

I promised at the beginning that my highly artificial test program was representative of real build-time problems that I have addressed. To make that point, here is a compiler lifetime graph from a real project, recorded before I started doing build optimization:

It was doing parallel builds but, due to seven different sets of compilation settings and some serious long-pole compiles it was far from perfectly parallelized. By breaking up some unity files and getting rid of unique compilation settings I was able to reduce the compilation time of this project by about one third.

If this information lets you improve your build speeds then please share your stories in the comments.

Hardware check

I bought my laptop three years ago. It has a four-core eight-thread CPU and 8 GB of RAM. I use it for hobby programming projects. For professional programming I would consider that core-count and memory size to be insufficient. Having more cores obviously helps with parallelism, but more memory is also crucial. For one thing you need enough memory to have multiple compiles running simultaneously without paging, but that is just the beginning. You also need enough memory for Windows to use as a disk cache. If you have enough memory then builds should not be significantly I/O bound and you can get full parallelism. My work machine is a six-core twelve-thread CPU with 32 GB of RAM. Adequate. I look forward to the eight-core CPUs coming out later this year, and 64 GB of RAM is probably in my future.

My laptop has four cores and, due to hyperthreading, it has eight threads. The speedup from having those hyperthreads is highly variable but it is typically pretty small. So, on my laptop it is unreasonable to expect more than a four times speedup from parallelism. In practice the speedup is likely to be less because there are other processes running as part of compilation, such as mspdbsrv.exe. On real projects with lots of work for mspdbsrv.exe the maximum speedups are likely to be less than the number of cores.

Downloads

I’ve made available my pathological project. It requires the Visual C++ Compiler November 2013 CTP to build it. The solution file contains three projects: CompileNonParallel, CompileMoreParallel, and CompileMostParallel. These project share source files but have different build settings – I hope that the project names are sufficiently expressive.

Also included is buildall.bat, ETWTimeBuild_lowrate.bat, and the source for devenvwrapper.exe. If you run buildall.bat from an elevated command prompt then it will register the ETW provider then run the other files and build all three projects, creating three ETW files. The trace files used in creating this blog post are also included.

You can use ETWTimeBuild_lowrate.bat as a general purpose tool for recording lightweight build traces. Just pass in the path to your solution file and the name of the project to build and it will rebuild the release configuration of your project. For deeper profiling you can enable the sampling profiler or other providers.

The devenvwrapper project also serves as a good example of a very simple ETW provider.

The ETW files can then be loaded into WPA 8.1. You can then go to the Profile menu and apply the supplied CompilerPerformance.wpaProfile file which will lay out the data in a sensible way. Drill down into the two data sets and you too can see how parallel your builds are. The table version of the Generic Events data lets you sort by compilation time and otherwise analyze the data. Note that filters are applied so that only the compiler processes and compiler events are shown.

All of these files can be found on github and can be downloaded and used thusly:

> git clone https://github.com/randomascii/main

> “%VS120COMNTOOLS%..\..\VC\vcvarsall.bat”

> cd main\xperf\vc_parallel_compiles

> buildall.bat

Hi,

Huge props for checking this out. Do you have any general comments on what happens if you have multiple projects? A related option is “Options->Projects and Solutions->Build and Run->maximum number of parallel projects builds”.

Cheers!

We use parallel project builds as well which gives us some additional parallelism, for those times when the individual project builds are serialized, such as during linking. Due to the lack of an MSBuild global scheduler you have to choose between setting max-parallel-project-builds to 1 (avoids over-committing your CPU) and setting it to num-procs (ensure maximum parallelism). I think it’s set to three on my work machine – my attempt at a compromise.

On the subject of what msbuild/vsbuild could do better: in our case we often work with DLLs, and the only real dependency between projects is that one needs to link with the lib/dll output from the other project. We have no other dependencies like header placement or codegen within a project.

In our case we could go very wide on CPP compilation since there are no dependencies between those jobs – the dependency should only constrain the LINK phase. However, msbuild won’t start building a dependent project until the dependency has finished building completely, leading to bubbles in the pipeline. This is frustrating since it leads to longer-than-necessary builds.

I can understand why it works that way though, since they cannot assume anything. But in our case it would be awesome if it was possible to flag that only the LINK steps need to block on dependencies.

Yep, that is an excellent example of how they could better parallelize builds. In most cases they could ‘easily’ do that parallelism, but there are probably some cases where the DLL build also spits out some header files or other outputs that the dependent project needs. But, if all outputs were listed it could definitely work. Some day…

Just as different compile settings artificially cause non-overlapping ‘batches’ of work, different projects (DLLs, libs, etc.) also do. Arguably the solution is the same — don’t have ‘excessive’ numbers of different projects.

Hi Bruce,

> The other reason to disable minimal rebuild is because it is not (IMHO) implemented correctly. If you use ccache on Linux then when the cache detects that compilation can be skipped it still emits the same warnings. The VC++ minimal rebuild does not do this, which makes eradicating warnings more difficult.

Sounds like this is just another reason to treat warnings as errors.

(With or without minimal rebuild, somtimes some objects won’t need to be rebuilt, and unless you treat warnings as errors you won’t see the warnings for those objects again until dependencies change or you do a full rebuild..)

> This is particularly risky if you use unity builds.

Unity builds are nasty, and this is particularly bad because they actually do speed up compile times quite significantly! I can’t wait for ‘modules’ support, and personally hope that this can provide an alternative to this horrible hack:

http://clang.llvm.org/docs/Modules.html

> Visual Studio doesn’t invoke the compiler once per source file. That would be inefficient. Instead VS passes as many source files as possible to the compiler and the compiler processes them as a batch.

How does this compare, in practice, with running multiple versions of the Visual Studio compile command in parallel externally, with one object per compile command? (Which is what we do in our custom build setup, currently.)

Warnings as errors is indeed awesome. Some of our project teams believe in it, but others don’t. I’m a fan.

I have not measured how much of a speedup is realized by VC++’s method of compile parallelism. Presumably you are paying extra process startup time which is particularly bad on small source files. On the other hand, you can more easily keep all CPUs busy without worrying about grouping by compile options.

If you do such measurements — in an artificial or real environment — please share the results.

> I have not measured how much of a speedup is realized by VC++’s method of compile parallelism. Presumably you are paying extra process startup time which is particularly bad on small source files.

We have custom build steps similar to Thomas that spawn CL for each file, but I’m considering grouping all files that share a really large PCH into one command and using /MP, as it can not only avoid the process startup time, but the potentially expensive PCH load time. The question is whether, when child CL processes are pulling tasks from the master process, is the PCH kept around so it is not re-initialized?

Adjusting our build system to test this is going to be rather complicated, so any insight you may have as to how well CL reuses the PCH between files would be appreciated.

I don’t know how much performance savings you get from having one compiler process compiling multiple files. In Chrome we use the ninja build system which executes each compile step as a separate process. One significant advantage is that this means that ninja can trivially expose exactly the amount of parallelism that you want.

FWIW, I had patches to do something similar for the Firefox build system and wound up not using them because it was just too complicated. What we wound up doing instead was generating .cpp files that #include a bunch of other .cpp files and pass those to the compiler instead, which winds up being significantly faster than compiling each file by itself (it also reduces memory usage and PGO final link time, which is nice).

A.k.a. A “unity” or “glob” build.

Ubisoft, and (I think) many other game developers use it.

E.g.:

And I’ll stick this here because I can:

http://fastbuild.org/ and don’t look back!

Hi Bruce

If you want anything approaching optimal compilation, you really need to stop using Visual Studio/MSBuild as the back-end. What you’ve touched on here is just one of many failings in the Visual Studio build pipeline.

Here’s a few others:

– inability to parallelize compilation of dependent dlls

– inability to parallelize managed code (/clr)

– oversubscription of threads (got 12 static libs, with 12 cpp files? on your 12 core machine, VS will spawn 144 processes at the same time. I’ve seen a 32 core build machine spawn over 1000 cl.exe processes in our static build!)

Incredibuild doesn’t help most of these either – it just parallelizes the stupidity of VS, and in a dll heavy application, you end up blocking on linking a DLL on 1 local CPU while 100 remote CPUs sit idle.

All of the above situations are possible to handle with optimal parallelization.

I’ve used make on Windows in the past, and that can solve these problems, but it’s not particularly user friendly (or Windows friendly). (Apologies here for the self-promotion) I didn’t want to have to setup make files again, so I wrote an open-source build system to address all of these problems, specifically aimed at game developers: http://www.fastbuild.org.

Parallel compilation in Visual Studio is a hack (2 hacks that don’t work well together) which will probably never be fixed.

Thanks Franta (and Francis). FastBuild looks quite interesting — it’s great to see it being actively worked on.

One advantage that local builds have always had over networked Incredibuild is that the shared PDB can be updated by all processes, which gives some efficiencies. How do you address this, or has it not been a problem?

Does FastBuild have any option to use .vcxproj files as input? We have project definition files which we use to create .vcxproj files, and we could adjust our generator to create FastBuild files, but a different input format does make testing with FastBuild more time consuming.

> …PDB can be updated by all processes…

FASTBuild supports both compile time (/Zi) and link-time (/Z7) pdb generation with optimal parallelization locally. So you can choose which to use based on other factors. So there are big wins to be had, even before you talk about parallelization.

To distribute over the network (or use caching), FASTBuild requires /Z7 debug format. The good news is that this is about 20% faster to compile (the pdb sharing mechanism actually just seems to block the compilation threads). Link time (with Z7) is usually comparable (and sometimes better), but it can be worse in some cases. You can safely mix the two types of debug info (in different libraries), so we go with a mix-match situation (using whatever is the best overall tradeoff) for the target. In our most commonly used target, an individual dll doesn’t take too long to link, and we have reliable incremental linking on top of that, so we use Z7 and it’s distributable/cacheable. On one of our console targets, the linker is really slow with 7i and even worse with /7Z, so we go with /7i for that.

> Does FastBuild have any option to use .vcxproj files as input?

FASTBuild is completely decoupled from .vcxproj files in the same way make is. It’s part of the design that you can compile on a PC without having visual studio installed. FASTBuild can generate .vcxproj files, but it can’t accept them as input. In the end, vcxproj files don’t provide enough information to know what is safe to parallelize (I believe this is why visual studio doesn’t parallelize dependent dlls for example).

You’re right that this makes testing a time consuming process – it’s on the same level as switching to makefiles in terms of the amount of work. A plug-in solution like Incredibuild is much faster to setup, but then again once set up FASTBuild smokes incredibuild 🙂 (a local compile is faster for our primary target on your local PC than Incredibuild is on the whole worker pool 🙂 )

One project using FASTBuild is generating projects from their own custom format. For the project I am working on, we’re using hand generated scripts. It’s workable either way with the advantages/drawbacks you’d probably expect.

(sorry for the thread-necro, but I couldn’t find any forums for FASTBuild)

I understand the desire to keep FASTBuild independent of VS, but I think a script to convert from sln+vcxproj (and ideally any custom targets, but that would be a stretch goal) to bff would massively increase take-up of the project. Presumably the insufficient information would only result in a non-optimal build, not a broken one, as long as the script generates equivalent rules to MSBuild? This could still give a big win with distributed compilation.

Even if the script got 90% of the work done, with some hand-tweaking required, it would make migration far more attractive.

I’d be tempted to try myself, unless there are fundamental incompatibilities.

Apologies to Bruce also for the side-channel – working on getting some forums for FASTBuild 🙂

Conversion is possible, but….

The difficulty of conversion from Visual Studio to FASTBuild (or anything for that matter) varies greatly depending on the complexity of the solution/project/property sheet setup, but my experience is that any real-world VS project is full of dragons in the configuration that make it difficult to get something across that is both fast and reliable. This is even before the more esoteric stuff like hand-edited project files containing conditional statements that even Visual Studio doesn’t handle in a consistent/reliable way. And this is just the stuff I know about…..

There are some incompatibilities – Visual Studio/MSBuild allows you to define two targets that build the same thing for example, something FASTBuild always considers to be an error, but I like to think anything sensible has a correlation.

I could see a conversion script with enough functionality for a given project being doable, but to make something robust for even a general set of use-cases would be a project in itself. I plan to spend this time on FASTBuild itself, but I would happily support anyone who wants to undertake this job 🙂

Thanks for the great article Bruce! I had the same experience as you in the past. As Stefan mentioned we also had the stall when linking but this was never much of a problem for us as we had a lots of smaller projects with a very loose coupling. So these link periods weren’t that much of a concern for us. It is also worth mentioning that we did not use PCHs nor unity builds (I agree with Thomas that it’s a nasty hack and I too cant wait for module support). On our engine, we would do a full rebuild of around 400 projects in just over 6 minutes.

While MSbuild is not perfect, as you’ve shown with a bit of work and a sensible project/code structure, it is at least in my (naïve) opinion “good enough”. I think there are bigger gains to be made from better project structure and code dependencies. For us at least, the biggest compilation performance issues were based on bad code/project setup (unnecessary includes, no fwd declarations, unneeded project references, etc…) rather than MSbuild.

Do you have a source for your statement “VS 2013 can also do parallel Link Time Code Generation (LTCG)”? We’re working on getting VS 2013 rolled out on our build farm, that would be huge for our PGO build times.

I found out about parallel LTCG from my VC++ contacts. I then did experiments on one of our projects where we were having 15-20 minute LTCG link times for arbitrarily small changes with VS 2010. Upgrading to VS 2013 gave us a 2x to 3x speedup on LTCG link times and task manager shows the beautiful spike of parallelism. /cgthreads gives finer control and modest additional speedups if you have more than four cores.

The entire LTCG link isn’t parallelized, which is why six code-gen threads ‘only’ gave a 2x to 3x improvement. If they can parallelize the prologue and epilogue work then LTCG build speeds could double or triple again (dream…).

E-mail me if you want more details.

Did you invent a time machine? (Files were updated on April 25th).

I didn’t invent my time machine, but it sure is handy to have it. But, I try not to show it off so I updated the files again, this time not in the future, to add support for spaces in project names.

Pingback: You Got Your Web Browser in my Compiler! | Random ASCII

I finally got around to installing VC2013 locally and doing comparison PGO builds of Firefox with it and VC2010. VC2010 takes 156 minutes to do a PGO build of Firefox on my machine, and VC2013 takes 63 minutes. Wow! This is on a several-year-old Core i7 930 with 8GB of ram. This should be a nice win for our build farm!

It’s a bit weird to be excited about a build that ‘only’ takes 63 minutes, but that is a nice win. If you have more than four cores then try /d2-threads8 (RTM/Update1) or /cgthreads:8 (CTP of Update 2) to request 8 (or however many you want) code-gen threads. This can give a modest additional speedup on the link/code-gen portion.

The CTP of Update 2 offers some other build throughput changes so it might be worth trying.

Reblogged this on Alexander Riccio and commented:

Random ASCII always writes brilliant in-depth analyses!

Very good read!

I personally prefer the “make” approach of specifying “–jobs=N” to have it spawn a number of processes to build things in parallel. Since make knows the whole dependency graph of your project (assuming your Makefile is correct), it can very efficiently schedule parallel builds targets for you.

When I’m working on a project on Windows, I generally install a bunch of the Cygwin tools (such as make, find, grep, xargs, etc..) and run the Visual Studio provided “vcvarsall.bat” to get the visual studio tools in my PATH. I can then have a Makefile that invokes cl.exe to do the builds.

Between these tools and GNU Emacs for Windows, I have a nice little Unix emulation layer 🙂

I’m sure this setup is missing some of the bells and whistles from the full-blown VIsual Studio experience, but it’s fast and it serves me well.

I work on Google Chrome now and we use the ninja build system. Like ‘make’ this can (and by default does) schedule the exact correct number of processes. My understanding is that ninja has some performance benefits compared to make, especially for incremental builds on large projects, but I don’t know the details.

Pingback: You Got Your Web Browser in my Compiler! | Random ASCII

Cross-linking with:

GoingNative 35: Fast Tips for Faster Builds! – http://channel9.msdn.com/Shows/C9-GoingNative/GoingNative-35-Fast-Tips-for-Faster-Builds

I am facing following issue related to “INCREDIBUILD”. When I build my project without “INCREDIBUILD”, “__FILE__” macro returns relative path. On building project with “INCREDIBUILD”, macro returns absolute path.

In project configuration, flags “/FC” and “/ZI” have already been disabled.

It may be that Incredibuild overrides those flags, as part of the modifications that they have to make to allow remote compilation. I’d follow up with Incredibuild support.

Thanks for the help

Pingback: ETW Central | Random ASCII

Bruce, this was great investigative work that answered a question I’ve been having with some of my Visual Studio projects for a long time – it turns out that I had been auto-generating project (.vcxproj) files with unique “” entries for each file to avoid possible collisions of two files with the same base name but different subdirectories, not realizing that this effectively turned my parallel builds to serial ones despite the presence of the /MP parallel compile switch being on!

Hey, cool. Glad that helped.

My build just went from 9 to three minutes. Your awesome.

*You’re.

Yea, that.

I’ve found that running “gcc file1.c file2.c file3.c file4.c” is dramatically faster than running “gcc file1.c; gcc file2.c; gcc file3.c; gcc file4.c” So I think there is an IO component as well.

Could be process creation also – that difference doesn’t indicate to me an I/O difference.

I wish people would stop saying compiling isn’t significantly IO bound. I got a 44% reduction in build time from 10:06 to 5:41 by going SSD. Before going SSD, whole program optimization an LTCG were 57% of my build time and turning on multiprocessor building only gained me 20% (this is on VC2010.) http://onemanmmo.com/index.php?cmd=newsitem&comment=news.1.167.1

I think most people talk about CPU being the bottleneck under the assumption that you have already spent a few hundred on an SSD and a bunch of ram. At that point, with a decent build system your machine will be pegged at 100% on all CPU cores and there isn’t really anywhere to go.

PS – If you’re still on VS2010, you should strongly consider upgrading. MS have made some massive gains in 2013 and 2015. If your build system supports it, to ease the transition, you can also switch out just the linker – I’ve had good success (and speedups) using the 2010 compiler with the 2013 linker for example. You can even use the Express editions 🙂

The main assumption I make is that people have enough RAM. RAM is cheap and yes, if you don’t have enough then you may end up being I/O bound. The best fix is to buy enough RAM so that the disk cache can do its job. An SSD is not required, although they are wonderful for many other reasons.

I’d argue that an SSD is required for the VS2015 IDE; it’s very painful without one.

I agree.

If you have enormous amounts of memory and rarely reboot then VS 2015 will *eventually* run smoothly without an SSD, but SSDs are really great devices. I no longer dread rebooting my laptop.

If your build times are significantly I/O bound, especially on warm builds, then that means you don’t have enough memory. An SSD is a valid way of dealing with insufficient memory, but you should also consider getting enough memory. Disk caching makes I/O mostly irrelevant.

A new kid on the block to help build faster: https://stashed.io

It does caching, auto-multithreading, supports pdb and pch.

All that without changing anything to your configuration.

A little late to the party but I’ve used Tundra https://github.com/deplinenoise/tundra, as my primary build system for years and it behaves incredibly well.

Thank you for this article! I am used to working in C# where all of the magic is usually managed for you, and I have never struggled with build times before.

However, I am new to C++ and have recently been frustrated by slow build times in VS2019. This tip has made a big difference!