I recently discovered that Microsoft’s VC++ compiler loads mshtml.dll – also known as Internet Explorer. The compiler does this whenever the /analyze option (requesting static code analysis) is used. I’m no compiler architecture expert, but a compiler that loads Internet Explorer seems peculiar.

This isn’t just a theoretical concern either. I discovered this while investigating why too much static-analysis parallelism causes my machine to become unresponsive for many minutes at a time, and the mshtml window appears to be the part of the cause.

As usual I used xperf/ETW to find the culprit.

Update, November 2015. Despite recent comments on the bug (which, I think, has an awesome title) this bug is still present with VC++ 2015 on Windows 10. That’s pretty lame. I can only assume that the VC++ and Windows teams are arguing about whose fault it is. And VC++ 2017 on Windows 10 also still has the bug – but now I know the internal bug number.

I first noticed this bug in 2011, but I guess that at the time I didn’t have the skills and experience to properly investigate the issue until 2014. This post is much more satisfying than the original.

Does it matter?

I don’t spend my days looking for trouble – it finds me. In this case I was trying to use /analyze on a new codebase.

(see Two Years (and Thousands of Bugs) of Static Analysis for practical advice on using /analyze)

![]() I have parallel compiles enabled, and also parallel project builds. Since both of these parallelization

I have parallel compiles enabled, and also parallel project builds. Since both of these parallelization ![]() options default to numProcs-way parallelism this can lead to numProcs*numProcs compilers running, which on my work machine would mean up to 144 parallel compiles. 144 parallel compiles is excessive, to be clear, but msbuild doesn’t supply a way of getting full parallelism without getting excessive parallelism, so I didn’t have much choice.

options default to numProcs-way parallelism this can lead to numProcs*numProcs compilers running, which on my work machine would mean up to 144 parallel compiles. 144 parallel compiles is excessive, to be clear, but msbuild doesn’t supply a way of getting full parallelism without getting excessive parallelism, so I didn’t have much choice.

I have a fast SSD and 32 GB of RAM so I would expect some sluggishness but not a total meltdown. And indeed with normal compiles a bit of sluggishness is what I get. But when compiling with /analyze my machine becomes unresponsive for up to half an hour! Mouse clicks take tens of seconds to be processed, and even Task Manager can only rarely update its window. This happens every time I do a highly parallelized /analyze build. I first noticed this problem with VS 2010 but I didn’t seriously investigate the problem until I hit it again when using VS 2013.

Hang analysis

I have ETW tracing running on my machine 24×7 via UIforETW. Data is recorded to a ~300 MB circular buffer and I can type Ctrl+Win+C to record a trace at anytime. This was designed for ease of use when recording traces while playing a game, but it’s also handy when your desktop is locked up and you still need to record a trace. After recording a trace I managed to cancel the build and regain control of my desktop. Then I analyzed the trace and figured out what was going on.

(see Xperf Basics: Recording a Trace (the ultimate easy way) for how to record a trace with UIforETW)

The question “why is my computer unresponsive when doing dozens of simultaneous compiles” is difficult to answer – it’s too broad. With dozens of compilers all fighting for CPU time it is normal for any given thread to be CPU starved some of the time. I needed to find a more tightly scoped question to investigate.

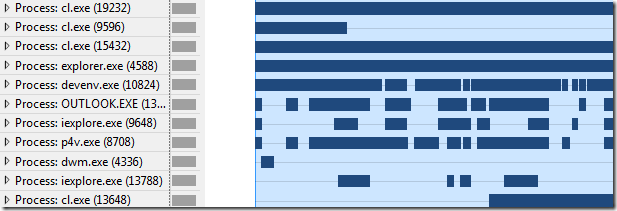

WPA has a very helpful graph when you are investigating a UI hang. Windows keeps track of how long each application goes without checking for messages and if an application goes ‘too long’ without checking for messages then an ETW event is emitted. If you are recording data from the right provider (Microsoft-Windows-Win32k) then those events will be in your trace and a UI Delays graph will be shown in the System Activity area. Normally this graph should be empty, but on the trace of my unresponsive system it showed a sea of bars representing dozens of MsgCheck Delay events from every process on my system – here is just a small fraction of them:

Curiously enough, about three quarters of the hung programs were instances of cl.exe. Windows was reporting that the compiler was not running its message pump. This immediately brought up the question of why does the compiler have a message pump!

Let’s ignore that for now – just think of it as foreshadowing.

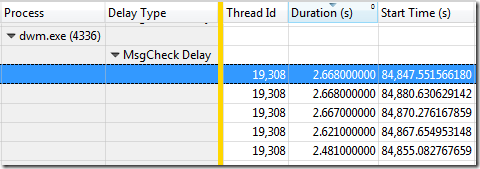

The next step was to choose a hung application and figure out why it was unable to pump messages. I chose dwm.exe (the Desktop Window Manager) because it runs at high priority. It should be able to run smoothly even on an overloaded system so if it is getting hung then you know things have gotten bad.

The UI Delay events include the process Id and thread Id for the thread that is failing to pump messages, and the interval of time during which no messages were pumped. This gives us enough information for us to finally have a well formed question:

Why is thread 19,308 of process dwm.exe(4336) failing to pump messages for 2.668 s starting at 84847.551566180 s into the trace?

That is a concrete question, and now we can figure out how to answer it.

Having this well formed question is quite important because if we naively use wait analysis then we will find that many threads go for many seconds without running. When doing wait analysis it is important to remember that an idle thread is not a problem – most threads on your computer should be idle most of the time. What matters is an unresponsive thread – a thread that does not promptly respond when it should. That’s why the UI Delay events are so important – they highlight a thread that is failing to respond to the user.

(see Xperf Wait Analysis–Finding Idle Time for a detailed look at how to follow wait chains)

The slow-to-respond dwm thread was waiting in ExAcquireResourceExclusiveLite, multiple times, sometimes waiting for hundreds of ms. The ReadyingProcess and other columns, documented here, tell us who “readied” the dwm thread – who released the lock and let it run. I manually followed this chain for a while but after a few hundred context switches I got bored.

The slow-to-respond dwm thread was waiting in ExAcquireResourceExclusiveLite, multiple times, sometimes waiting for hundreds of ms. The ReadyingProcess and other columns, documented here, tell us who “readied” the dwm thread – who released the lock and let it run. I manually followed this chain for a while but after a few hundred context switches I got bored.

So, I used wpaexporter to export all of the context switches for the relevant range. Then I filtered this to only show lines where ExAcquireResourceExclusiveLite was on the NewThreadStack, and then I got Excel to calculate the time difference between adjacent context switches. This was a quick-and-dirty way of finding an approximate lock chain, and who was taking a long time with the lock. These lock handoffs are usually extremely quick – often less than a ms – but a significant number of them took longer, usually about 16 or 32 ms. Even though only less than 3% of the lock handoffs took more than 15 ms these slow lock handoffs accounted for more than 75% of the time I surveyed.

I then looked at some of the context switch data more closely and it appears that the slow lock handoffs are because sometimes a thread is given its turn to get the lock but it doesn’t get any CPU time. So, the lock sits there unused until the next OS scheduling interval gives the thread some CPU time. It appears to be a priority inversion in the ntoskrnl.exe locks.

I have heard that this priority inversion has been fixed in recent versions of Windows.

The calls to ExAcquireResourceExclusiveLite were all from functions like SetWindowPos, CreateWindowEx, etc. Clearly the resource that was being fought over was a key part of the windowing system.

The calls to ExAcquireResourceExclusiveLite were all from functions like SetWindowPos, CreateWindowEx, etc. Clearly the resource that was being fought over was a key part of the windowing system.

The very first thread that I saw in the lock chain was part of the VC++ compiler. It was being called by CreateWindowExW, which seemed weird for a compiler. And CreateWindowExW was being called by mshtml, which seemed even weirder. And in fact, about 65% of the traffic on the windowing system lock was from the VC++ compiler, mostly via mshtml.dll.

So, when you run /analyze the compiler consumes lots of CPU time to analyze your code, and it also opens a window (two actually, one for COM purposes). If you run many copies of the compiler then you get many windows being opened, and over-subscribed CPUs. And madness ensues.

The compiler instances are running at a slightly lower priority than ‘normal’ windowing processes such as Visual Studio and Outlook and this lower priority together with the high CPU demand and their heavy use of the windowing lock seems to be a crucial part of the problem, by leading to priority inversions.

Technically I don’t know that mshtml and the opening of the windows is the cause of hangs. I know that the hangs only happen with /analyze compiles, I know that /analyze compiles load mshtml.dll, and I know that mshtml.dll shows up on some of the wait chains. Correlation is not causation, but it sure is suspicious.

If Microsoft removes mshtml.dll from the compiler then I’ll happily re-run my tests and report back.

But, but why?

Without access to a lot of source code I can’t tell exactly what is going on, but here’s what I know. If you run the compiler with the /analyze option then it loads mspft120.dll – the /analyze DLL. Then mspft120 loads msxml6.dll to load an XML configuration file. Then msxml6 loads urlmon.dll to open the stream, and finally urlmon loads mshtml.dll. Then mshtml.dll creates a window, because that’s what it does.

The XML files being loaded are:

- res://C:\Program Files (x86)\Microsoft Visual Studio 12.0\VC\BIN\mspft120.dll/213

- res://mspft120.dll/300

I’m sure that every step in this process makes sense in some context – just not in this context. I suspect nobody ever noticed that mshtml.dll was being loaded, or else they didn’t run enough parallel compiles for it to matter.

Extraordinary claims require extraordinary evidence

I feel skepticism from my readers. This is good. If somebody told me that the VC++ compiler loaded a web browser I would doubt them. I hope that you trust me, but my feelings won’t be hurt if you feel it is appropriate to trust-but-verify. I can help.

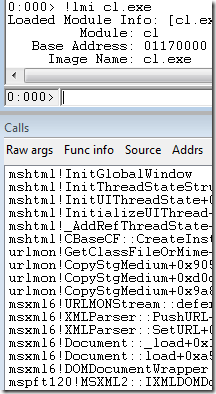

If you are comfortable using windbg then verification is fairly easy. Just run these commands from a command prompt:

- “%VS120COMNTOOLS%..\..\VC\vcvarsall.bat”

- set _NT_SYMBOL_PATH=SRV*c:\symbols*https://msdl.microsoft.com/download/symbols

- windbg.exe cl.exe /analyze SomeSourceFile.cpp

- In the windbg command field type either “sxe ld mshtml” or “bp mshtml!InitGlobalWindow”

These commands are sufficient to load Microsoft’s compiler under windbg and catch mshtml being loaded or creating a window. More details:

These commands are sufficient to load Microsoft’s compiler under windbg and catch mshtml being loaded or creating a window. More details:

- This command sets the path so that you can run cl.exe.

- This command sets up access to Microsoft’s symbol servers – c:\symbols is any local directory where you want the symbols cached.

- This command runs windbg with the compiler as the process to debug – SomeSourceFile.cpp is any source file on your machine.

- The first command says to break when mshtml.dll is loaded. The second one says to break when the InitGlobalWindow function in mshtl is hit. You can use either or both of these.

Then just hit F5 or type ‘g’ into the windbg command field to start debugging. After some delays to download symbols execution will stop at your breakpoint. Then you can type ‘kc’ to get a callstack, and then ‘q’ to quit.

You can also investigate this using Visual Studio. Run devenv.exe from the command prompt where you did the first two steps and set up the debugging session appropriately. You can’t set breakpoints on module load but you can look for module load messages in the output window. I couldn’t set a breakpoint on InitGlobalWindow but I was able to set a breakpoint on CreateWindowExW.

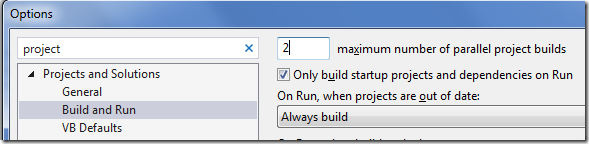

Mitigations

Until Microsoft fixes their compiler to not load their web browser it seems impossible to avoid this problem when doing lots of parallel builds. The only solution that I am aware of is to reduce the amount of parallelism. Setting the maximum number of parallel project builds to a smaller number seems to reduce the hangs to a more manageable level – but it also makes builds take longer.

You can do this from the command line (important for build machines) with this command:

reg add “HKCU\Software\Microsoft\VisualStudio\12.0\General” /v MaxConcurrentBuilds /t REG_DWORD /d 8 /f

This sets the maximum number of parallel project builds to 8, for Visual Studio 2013 (version 12.0). Adjust the version number and amount of parallelism to your needs.

You can also pass a parameter to the compiler’s /MP option to specify the maximum compile parallelism for that set of source files.

I suspect that having fewer CPU cores would also help, since that would automatically reduce the over-subscription ratio – but I want lots of parallelism. Oh well.

If Microsoft had a global compiler scheduler then they could avoid over-subscribing CPUs.

For more details about how to parallelize your MSBuilds (which is desirable, even though it allows this problem) see my previous blog post about Making VC++ Compiles Fast Through Parallel Compilation.

A bug has been filed (but Microsoft’s connect bug database has gone away, taking these historical records with it).

Update

A few people reasonably asked why the mitigation of dropping to two-way parallel project builds is not enough to close the case. One reason is, of course, that opening a web browser from a compiler is still a bad idea. Another reason is that twelve-way parallel project builds is the default for a twelve-thread CPU – default behavior should behave better than that.

The final reason why that mitigation is not ideal is that sometimes more than two-way parallel project builds is needed in order to get ideal build speeds. As I showed in my parallel compilation blog post last week, parallel compilation is off by default, and even when it is on many projects will spend a portion of their time doing serial builds. So, a combination of parallel compilation and parallel project builds is appropriate.

In particular, we use precompiled headers for most of our projects. VC++ builds the precompiled header as the first step in building these projects, and it compiles nothing else until it finishes. So, at the start of every full build the only parallelism I get is from parallel project builds.

It is unfortunate that VC++/msbuild don’t have a global scheduler that avoids running 144 compiles in parallel. A global compiler scheduler is the only way to have full intra- and inter-project parallelism without over committing. Getting a global compiler scheduler would actually be quite easy. Just get MSBuild to create a global semaphore that is initialized to NumCores and pass in a switch to the compilers telling them to acquire that semaphore before doing any work, and release it when they’re done. Problem solved, trivially.

Also, the parallel-project builds setting is a global setting, and if I lower it then some solutions will build more slowly.

Reddit discussion is here.

I guess the takeaway here is that you should avoid using MSXML unless you don’t mind getting IE loaded as well?

I don’t know if msxml always loads IE or if there are settings to avoid it. I’m hopeful that fixing this might be just a matter of setting a flag, but without source-code I can only guess.

I can tell from the call stack that it is calling http://msdn.microsoft.com/en-us/library/ms762722%28v=vs.85%29.aspx One solution would be to load the resource manually using Win32 APIs, generate a BSTR, and then calling http://msdn.microsoft.com/en-us/library/ms754585%28v=vs.85%29.aspx instead. And yes don’t forget to disable loading of external DTDs too.

One (perhaps painful) mitigation suggestion — figure out what urlmon.dll is using from mshtml.dll (or what msxml6.dll is using from ulrmon.dll), and provide a small dummy impl to provide just what’s needed? Unfortunately a lot of this stuff is being created via in-process COM, so it wouldn’t be as simple as just dumping imports/looking at call stacks, but it should be doable..

I was going to suggest the same approach, maybe trying to replace mshtml.dll by something using curl, to avoid creating windows and other UI objects.

“If Microsoft had a global compiler scheduler …”

– Amen

This seems like the start of a better solution:

https://github.com/tpoechtrager/wclang

Or use the official Windows Clang builds: http://llvm.org/builds/

Who develops under Windows, anyway? You might as well commit yourself to an insane asylum right away.

This is the most correct statement in the entire comments section.

Simply quit developing under winblows – and these problems will be gone.

Its always entertaining to see the anti-ms trolls really desperate to get people to read their opinions.

Just because they are likely trolling doesn’t mean that they aren’t 100% correct on this.

Abandoning Windows is a valid solution, but giving up on millions (billions?) of customers because of a few technical problems is not clearly the right solution. A developer who does that is likely to find that OSX and Linux are also imperfect constructs, but with fewer customers. I’m glad to now be a competent Linux developer, but I won’t be abandoning Windows completely anytime soon.

A somewhat popular strategy is developing under some UNIX-y platform and using MinGW to generate Windows binaries.

> I suspect nobody ever noticed that mshtml.dll was being loaded, or else they didn’t run enough parallel compiles for it to matter.

Maybe, or maybe not. Do you remember that anti-trust lawsuit about 10 years ago now against M$ for having bundled IE with win to the detriment of other browsers? Do you remember the “trick” that M$ tried to pull? Removing IE (which meant “del mshtml.dll” from their tricky interpretation). And remember their argument: “removing IE from windows results in a windows that fails to operate” (because they themselves had made so many things “load” IE (but never use it) such that “removing IE” meant “killing windows”.

I suspect what you’ve discovered is a leftover from their attempts to trick the court system. Why does VC++ load mshtml.dll – because we need another app. to fail if we are forced by the govt. to remove IE, and if we get enough apps to fail, the govt. will give us an exception to the lawsuit.

Sounds very far fetched. I’d subscribe to the “never ascribe to malice that which is adequately explained by incompetence” line of thinking instead.

Windows is a very complex beast, and I can definitely see how dependencies like this can sneak in over time as part of some new amazing feature.

> Sounds very far fetched. I’d subscribe to the “never ascribe to malice that which is adequately explained by incompetence” line of thinking instead.

Except when one is dealing with M$, and their desire to maintain their monopoly at all costs. At which point the statement, in regards to M$, is instead: “never ascribe to incompetence that which is adequately explained by malice”.

There´s nothing wrong in bundling Windows with IE, (or any other programs for that matter), the user has always had a free choice of browser and media player. We need to respect the choice of the user, but we also need to respect the right of the company to shape their products in any way that they want (with only few exceptions).

The court is corrupt in that it didn’t defend fundamental rights of Microsoft, and instead decided to jump onto the hyped anti-MS propaganda invented by competitors, and people who generally just have a low understanding of right and wrong.

I may not like everything Microsoft dose, but bundling software can not, and should not trigger fines and governmental intrusion – unless the company blocks the users free choice of installing something else.. In fact, i personally couldn’t care less as a user – i only use Firefox and Chrome because they are better browsers – if IE was the best, i would use that instead. MS shouldn’t have to pay for peoples ignorance, its as simple as that – and we should not support such abuse of authority.

TL;DR – If it weren’t for a Google & Mozilla power team duo to bystep Microsofts monopolistic tactics, we would still be stuck with IE7 as the only browser of choice due to MS’s monopolistic tactics.

You probably weren’t around at the time is my guess. It’s not that I couldn’t install another browser – it’s that at the time, browsers cost money. Bundling one for free with the OS destroyed other competitive businesses – businesses that may have eventually build a better OS if their funding was not cut out from underneath them – they had already built a far better browser. We are only seeing free browsers that are far better than IE because Google made search profitable enough to share with Mozilla Foundation, which helped make Google profitable enough to make Chrome. Can you imagine how lousy the internet would be if we had to deal with IE’s 3-year behind the curve buggy implementations all the time….and that’s with competition to drive progress! If if you are not a huge Google fan, you have to admit they have created and released some very cool tech and the search is by far the leader that all other engines are measured against. When competition thrives, businesses and consumers win. MS definitely tried to thwart this for very greedy means.

@nelsonenzo: I think you are mixing the issue of making browsers free with the integration into Windows.

@yuhong, no I’m not. These concepts are very intertwined.

“The plaintiffs alleged that Microsoft abused monopoly power on Intel-based personal computers in its handling of operating system sales and web browser sales.”

Read more about it here: http://en.wikipedia.org/wiki/United_States_v._Microsoft_Corp.

If you want to be taken seriously, stop using a derisive abbreviation like “M$” and talk like a grown-up.

Microsoft – the purveyor of dog feces cleverly wrapped in attractive packaging.

Comments that stoop to insults and/or add no value are at risk for deletion.

Idea, stop using microsoft ^_^

That is exactly what he needs to do.

Quitters.

I make my living writing code, mostly on Windows.

Have you tried used the MinGW gcc for compilation? Visual Studio is a mess.

I have not tried using MinGW. Do you have any references to comparisons, of build times and code quality?

In this case I was using the /analyze feature which is VC++ specific, although some of the bugs it finds are also found by gcc.

Could it be trying to validate the XML document by downloading a DTD/XSD?

I don’t think it is. I looked at the resource and saw no suggestion of that, and no hint of network traffic in Fiddler.

Can’t you blame swapping for the sluggishness? I don’t know how much RAM the MSVC compiler needs, but if I were to run 144 instances of g++, I would expect to require 70GB+ of RAM.

FWIW, in my experience, MSVC is actually quite lean on memory compared to GCC, This was very obvious when comparing building Frostbite for PS3 vs X360.

I never saw it actually get to 144 instances, but even if it did there would be no problem with swapping on my machine. A lot of the working set of the compiler is shared code so the working set of 144 compilers is much less than 144*working-set-of-one-compile. Extrapolating from memory consumed by the compiles that were running I’m sure that I would have had at least 10 GB free if I’d got to the full 144-way parallelism.

While I agree a global compiler scheduler would be a really good great idea, if you’re running so many compiles that it’s making your machine sluggish, you’re probably running too many compiles. Too much time is being spent on swapping between processes. I suspect that if you did some timing tests, you would want somewhere between one and two compiler processes per core.

I agree that running too many compiles is counter-productive. The original post glossed over this (I’ve added an update) but the point is that a global compiler scheduler is the only way to allow full compile parallelism and full project parallelism without over-committing. For maximum build speeds you need full intra-project and inter-project parallelism and the only way to get this without over committing is to have a global compiler scheduler. Such a scheduler would enforce a global cap on parallel compiles so that builds could smoothly switch from intra- to inter-project parallelism.

It’s worth pointing out that gcc can also hit this problem of n^2 compiles going on. This is discussed in http://hubicka.blogspot.com/2014/04/linktime-optimization-in-gcc-2-firefox.html. If you parallelize project-level builds with -j16 and request parallel link-time-optimization with -flto=16 then you can end up with 256 compilations in parallel.

In other words, gcc has exactly the same problem. It also lacks a global compiler scheduler so if you request maximum project-level parallelism and maximum lto parallelism then you may over-commit, but if you don’t maximize both then you may under-commit in other circumstances.

It’s interesting to see these problems repeat themselves in totally different software.

Oh, and Anon? Grow up. http://www.hanselman.com/blog/MicrosoftKilledMyPappy.aspx

This is probably related to the API they chose to implement. In C#, I have been aware for a few years now, that the System.XML.XmlDocument class uses mshtml (IE), whereas the System.XML.Linq.XDocument class uses completely new managed code. The performance difference is DRASTIC.

I would guess that they simply need to update their codebase.

Good tip.

I wonder though about whether the compiler uses managed code.

I really enjoyed reading this.

Is it even legal to debug a proprietary software like you did ?

I sure hope this type of investigation is legal. Prohibiting investigation of the software that we use would be horrible. Some license agreements do restrict reverse engineering but I’m not sure if those have been tested in court.

If my computer is performing badly then I am going to investigate. I am pleased that Microsoft makes available awesome investigative tools (go ETW/xperf!), for free, and also makes symbols available for their OS and much other software.

Random question, it’s not trying to connect to anything is it? If you wireshark the connection, or just unplug the ethernet cable, does anything improve?

I ran fiddler and it didn’t notice any network traffic.

A compiler cache might be a work-around. I’m not extremely familiar with VS, but if you can avoid calls to cl.exe, it should ultimately avoid the calls into the windowing system.

Embed a web browser in your compiler? What could possibly[1] go wrong?

[1] http://cm.bell-labs.com/who/ken/trust.html

I had heard about compiler inside browse, but browser inside compiler is novelty.

Pingback: Self Inflicted Denial of Service in Visual Studio Search | Random ASCII

Pingback: Buggy Security Guidance from Apple | Random ASCII

This is a good time to note that MSHTML.DLL is commonly updated by out of band IE updates.

In my experience MSHTML.DLL is usually only updated for security fixes. I would expect the fix to show up in IE 12, but that could be a *long* wait.

I checked the most recent batch of updates (May 14, 2014) and the bug is still there. But maybe next month…

The problem is not in MSHTML.DLL. The point is that this is another reason this problem is bad, as Windows cannot replace files while it is in use.

Do you use wprui to record traces? How do you tell it to include Microsoft-Windows-Win32k? Did you write a custom XML profile?

I sometimes use wprui. It should use Microsoft-Windows-Win32k by default. You can see what is recorded by using wpr:

wpr (prints list of options)

wpr -profiles (prints list of available profiles)

wpr -profiledetails generalprofile (prints providers for the general profile)

The last command shows Microsoft-Windows-Win32k in the CaptureState section, and my experience is that it is one of the providers because I get active-window data. This is on Windows 7.

Excellent. Thank you!

Also, do you know where the built-in wprp xml files are stored (the ones in wpr -profiles) so I can examine/fork them?

Sorry, I don’t know. Anybody else?

I was wrong. Microsoft-Windows-Win32k is not enabled by default by wprui. It should be but it is not. So I just updated the MultiProvider.zip file linked from https://randomascii.wordpress.com/2013/04/20/xperf-basics-recording-a-trace-the-easy-way/ to fix this. I added WindowsWin32kProfile.wprp file that can be easily added to wprui per the directions in the blog post.

Do you know what the **best** part about this is? If you run cl.exe under application verifier (like when hitting/investigating an Internal Compiler Error), you get all of the nice memory leaks in mshtml!

Meh. Memory leaks at process shutdown are a state of mind. They are not themselves bad (the OS can free the memory faster than the application) – they are only a problem if they indicate memory that has leaked during compilation.

When they’re at process shutdown, they’re not. When you’re 15 seconds into compilation, then there’s something suspicious going on.

Application Verifier shows memory leaks prior to shutdown? How does it know?

I’m guessing (haven’t bothered investigating) that some DLL unloads or calls TerminateThread.

I just noticed that this post has some very unusual tags. Is “carl sagan, nuclear testing, peanut butter, rhetoric” supposed to be a secret code/message of some sort?

I don’t remember why I put in carl sagan, nuclear testing, or rhetoric. But peanut butter? Isn’t it obvious?

I guess you’re too young to remember the reference which gave me the title of this post: https://www.youtube.com/watch?v=DJLDF6qZUX0

Yup, too young. That’s (actually) quite funny now that it’s in context.

As far as your connect entry says, this should be fixed in Windows 8.1 (maybe with an update). Can anyone confirm this?

I’ll take a look.

I just checked on Windows 8.1 Enterprise with VC++ 2015 and confirmed that the bug is still there. mshtml.dll is loaded, and InitGlobalWindow is called.

That is disappointing.

I can’t comment on the bug from work for some reason but I will try to comment on it from home.

Thx Bruce for checking!

I hope they fix it soon.

Hi Bruce!

Since MS won’t give us any hint or new information in the connect entry, I’m afraid to ask, but could you please test (when easily possible) with Windows 10 RTM and VC++2015 RTM?

thx,

Vertex

Hi Bruce!

It seems as MS removed the entry from connect. Either they fixed it or they didn’t see it as an issue of VS. Nevertheless we still don’t know if they may have fixed it (e.g. in Win10) and if not what to do to get it fixed.

Thx,

Vertex

It’s not fixed. I just checked with VS 2015 Update 1 RC on Windows 10 and mshtml.dll is still loaded. Amazing.

Thx bruce for the continuous effort on this topic!

Pingback: ETW Central | Random ASCII

Um I didn’t read what are you compiling with M$VC, but I’m sure that if it’s profitable, and it gets famous enough, then MS very soon will take on this market niche and will re-implement similar product into it’s portfolio so you’ll have to fight with The Giant for survival. And you can win a fight with a giant only in a HWood movie. If U’re “lucky”, they’ll buy You with the product instead of re-inventing it…

Moreover, you’ll have helped MS to get there by improving their other software products which have defeated products of other companies in the given market niche….

Possibly. But there is a lot of software out there and Microsoft is unlikely to re-implement them all.

When I wrote this I was working at Valve on Steam and their various games.

I now work at Google on Chrome. It is indeed famous enough that Microsoft has re-implemented a similar product into its portfolio. We’ll see how it goes.

Curiously enough, Google is working on improving the clang compiler and now has the option of compiling Chrome with clang instead of the VC++ compiler.

Thanks Bruce for pointing out this strange behavior in cl.exe /analyze. The good news is, we no longer load mshtml.dll as part of /analyze. The fix is available in our latest Preview build at https://www.visualstudio.com/vs/preview for everyone to try out.

I also wanted to be open about why it took us a second round of iteration to get this fixed. In Visual Studio 2017 release, we fixed part of the problem by removing msxml6.dll from the defect processing engine. However, that alone wasn’t enough. There was this second component that was still using it for parsing model files embedded in the code analysis DLL as HTML resource. We got rid of this second component from the latest Preview builds. We don’t import msxml6.dll in our XML parser anymore.

MSXML6 would not require MSHTML if you loaded the resource manually instead of using res://

Now you offer advice on how to fix this bug?

Though a better solution to do this actually is to use IStream.

Can we expect any performance benefits from this change? Or less UI blocking during build or something like that?

Great question, Vertex. There are two main performance benefits of this change. Firstly, cl.exe /analyze no longer loads the browser dlls, therefore you shouldn’t see any CreateWindow calls from the compiler. This should fix the UI blocking issues during build. Secondly, we now use IStream/XmlLite technology to parse the resources which is faster than DOM or SAX2.

Note that this only ever applied to /analyze builds, so normal builds should be unaffected.