How much precision does a float have? It depends on the float, and it depends on what you mean by precision. Typical reasonable answers range from 6-9 decimal digits, but it turns out that you can make a case for anything from zero to over one hundred digits.

In all cases in this article when I talk about precision, or about how many digits it takes to represent a float, I am talking about mantissa digits. When printing floating-point numbers you often also need a couple of +/- characters, an ‘e’, and a few digits for the exponent, but I’m just going to focus on the mantissa.

Previously on this channel…

If you’re just joining us then you may find it helpful to read some of the earlier posts in this series. The first one is the most important since it gives an overview of the layout and interpretation of floats, which is helpful to understand this post.

- 1: Tricks With the Floating-Point Format – an overview of the float format

- 2: Stupid Float Tricks – incrementing the integer representation

- 3: Don’t Store That in a Float – a cautionary tale about time

- 3b: They sure look equal… – special bonus post (not on altdevblogaday), also about precision

- 4: Comparing Floating Point Numbers, 2012 Edition

- 5: Float Precision—From Zero to 100+ Digits (return *this;)

What precision means

For most of our purposes when we say that a format has n-digit precision we mean that over some range, typically [10^k, 10^(k+1)), where k is an integer, all n-digit numbers can be uniquely identified. For instance, from 1.000000e6 to 9.999999e6 if your number format can represent all numbers with seven digits of mantissa precision then you can say that your number format has seven digit precision over that range, where ‘k’ is 6.

Similarly, from 1.000e-1 to 9.999e-1 if your number format can represent all the numbers with four digits of precision then you can say that your number format has four digit precision over that range, where ‘k’ is -1.

Your number format may not always be able to represent each number in such a range precisely (0.1 being a tired example of a number that cannot be exactly represented as a float) but to have n-digit precision we must have a number that is closer to each number than to either of its n-digit neighbors.

This definition of precision is similar to the concept of significant figures in science. The number 1.03e4 and 9.87e9 are both presumed to have three significant figures, or three digits of precision.

Wasted digits and wobble

The “significant figures” definition of precision is sometimes necessary, but it’s not great for numerical analysis where we are more concerned about relative error. The relative error in, let’s say a three digit decimal number, varies widely. If you add one to a three digit number then, depending on whether the number is 100 or 998, it may increase by 1%, or by barely 0.1%.

If you take an arbitrary real number from 99.500… to 999.500… and assign it to a three digit decimal number then you will be forced to round the number up or down by up to half a unit in the last place, or 0.5 decimal ULPs. That 0.5 ULPs may represent a rounding error of anywhere from 0.5% at around 100 to just 0.05% at around 999. That variation in relative error by a factor of ten (the base) is called the wobble.

Wobble also affects binary numbers, but to a lesser degree. The relative precision available from a fixed number of binary digits varies depending on whether the leading digits are 10000 or 11111. Unlike base ten where the relative precision can be almost ten times lower for numbers that start with 10000, the relative precision for base two only varies by a factor of two (again, the base).

In more concrete terms, the wobble in the float format means that the relative precision of a normalized float is between 0.5/8388608 and 0.5/16777216.

The minimized wobble of binary floating-point numbers and the more consistent accuracy this leads to is one of the significant advantages of binary floating-point numbers over larger bases.

The variation in relative precision due to wobble is important later on and can mean that we ‘waste’ almost an entire digit, binary or decimal, when converting numbers from the other base.

Subnormal precision: 0-5 digits

Float numbers normally have fairly consistent precision, but in some cases their precision is significantly lower – as little as zero digits. This happens with denormalized, or ‘subnormal’, numbers. Most float numbers have an implied leading one that gives them 24 bits of mantissa. However, as discussed in my first post, floats with the exponent set to zero necessarily have no implied leading one. This means that their mantissa has just 23 bits, they are not normalized, and hence they are called subnormals. If enough of the leading bits are zero then we have as little as one bit of precision.

As an example consider the smallest positive non-zero float. This number’s integer representation is 0x00000001 and its value is 2^-149, or approximately 1.401e-45f. This value comes from its exponent (-126) and the fact that its one non-zero bit in its mantissa is 23 bits to the right of the mantissa’s binary point. All subnormal numbers have the same exponent (-126) so they are all multiples of this number.

The binary exponent in a float varies from -126 to 127

Since the floats in the range with decimal exponent -45 (subnormals all of them) are all multiples of this number their mantissas are (roughly) 1.4, 2.8, 4.2, 5.6, 7.0, 8.4, and 9.8. If we print them to one-digit of precision then we get (ignoring the exponent, which is -45) 1, 3, 4, 6, 7, 8, and 10. Since 2, 5, and 9 are missing that means that we don’t even have one digit of precision!

Since all subnormal numbers are multiples of 1.401e-45f, subsequent ranges each have one additional digit of precision. Therefore the ranges with decimal exponents -45, -44, -43, -42, -41, and -40 have 0, 1, 2, 3, 4, and 5 digits of precision.

Normal precision

Normal floats have a 24-bit mantissa and greater precision than subnormals. We can easily calculate how many decimal digits the 24-bit mantissa of a float is equivalent to: 24*LOG(2)/LOG(10) which is equal to about 7.225. But what does 7.225 digits actually mean? It depends whether you are concerned about how many digits you can rely on, or how many digits you need.

Representing decimals: 6-7 digits

Our definition of n-digit precision is being able to represent all n-digit numbers over a range [10^k, 10^(k+1)). There are about 28 million floats in any such (normalized) range, which is more than enough for seven digits of precision, but they are not evenly distributed, with the density being much higher at the bottom of the range. Sometimes there are not enough of them near the top of the range to uniquely identify all seven digit numbers.

In some ranges the exponent lines up such that we may (due to the wobble issues mentioned at the top) waste almost a full bit of precision, which is equivalent to ~0.301 decimal digits (log(2)/log(10)), and therefore we can only represent ~6.924 digits. In these cases we don’t quite have seven digits of precision.

I wrote some quick-and dirty code that scans through various ranges with ‘k’ varying from -37 to 37 to look for these cases.

FLT_MIN (the smallest normalized float) is about 1.175e-38F, FLT_MAX is about 3.402e+38F

My test code calculates the desired 7-digit number using double precision math, assigns it to a float, and then prints the float and the double to 7 digits of precision. The printing is assumed to use correct rounding, and if the results from the float and the double don’t match then we know we have a number that cannot be uniquely identified/represented as a float.

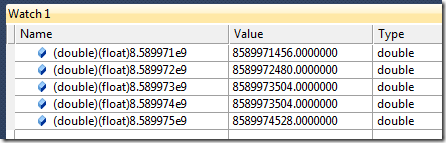

Across all two billion or so positive floats tested I measured 784,757 seven-digit numbers that could not be uniquely identified, or about 0.04% of the total. For instance, from 1.000000e9 to 8.589972e9 was fine, but from there to 9.999999e9 there were 33,048 7-digit numbers that could not be represented. It’s a bit subtle, but we can see what is happening if we type some adjacent 7-digit numbers into the watch window, cast them to floats, and then cast them to double so that the debugger will print their values more precisely:

One thing to notice (in the Value column) is that none of the numbers can be exactly represented as a float. We would like the last three digits before the decimal point to all be zeroes, but that isn’t possible because at this range all floats are a multiple of 1,024. So, the compiler/debugger/IEEE-float does the best it can. In order to get seven digits of precision at this range we need a new float every 1,000 or better, but the floats are actually spaced out every 1,024. Therefore we end up missing 24 floats for each set of 1,024. In the ‘Value’ column we can see that the third and fourth numbers actually map to the same float, shown circled below:

One was rounded down, and the other was rounded up, but they were both rounded to the closest float available.

At 8.589930e9 a float’s relative precision is 1/16777216 but at 8.589974e9 it is just 1/8388608

This issue doesn’t happen earlier in this range because below 8,589,934,592 (2^33) the float exponent is smaller and therefore the precision is greater – immediately below 2^33 the representable floats are spaced just 512 units apart. Because of this, when decimal precision loss happens it is always late in the range.

My test code showed me that this same sort of thing happens any time that the effective exponent of the last bit of the float (which is the exponent of the float minus 23) is -136, -126, -93, -83, -73, -63, -53, -43, -33, 10, 20, 30, 40, 50, 60, 70, or 103. Calculate two to those powers if you really want to see the pattern. This corresponds to just six digit precision in the ranges with decimal exponents -35, -32, -22, -19, -16, -13, -10, -7, -4, 9, 12, 15, 18, 21, 24, 27, and 37.

Therefore, over most ranges a float has (just barely) seven decimal digits of precision, but over 17 of the 75 ranges tested a float only has six.

Representing floats: 8-9 digits

The flip side of this question is figuring out how many decimal digits it takes to uniquely identify a float. Again, we aren’t concerned here with converting the exact value of the float to a decimal (we’ll get to that), but merely having enough digits to uniquely identify a particular float.

In this case it is the wobble in the decimal representation that can bite us. For some exponent ranges we may waste almost a full decimal digit. That means that instead of requiring ~7.225 digits to represent all floats we would expect that sometimes we would actually need ~8.225. Since we can’t use fractional digits we actually need nine in these cases. As explained in a previous post this happens about 30% of the time, which seems totally reasonable given our calculations. The rest of the time we need eight digits to uniquely identify a particular float. Use 9 to play it safe.

printf(“%1.8e”, f); ensures that a float will round-trip to decimal and back

It appears that a lot of people don’t believe that you can print a float as decimal and then convert it back to a float and get the same value. There are a lot of smart people saying things like “text extraction of floats drifts because of the ASCII conversions”. That is only true if:

- Your function that prints floats (printf?) is broken, or

- Your function that scans floats (scanf?) is broken, or

- You didn’t request enough digits.

In short, if you’re getting drift when you round-trip floats to decimal and back then either your C runtime has a bug, or your code has a bug. I use printf(“%1.8e”, f); with VC++ and I have tested that this round-trips all ~4 billion floats, with zero drift. If you want to test this with your own language and library tools then you can easily modify the sample code in the second post in this series to sprintf each number to a buffer and then sscanf it back in, to make sure you get back a bit-wise identical float. This is one time where a floating-point equality comparison is entirely appropriate.

Precisely printing floats: 10-112 digits

There is one final possible meaning of precision that we can apply. It turns out that while not all decimal numbers can be exactly represented in binary (0.1 is an infinitely repeating binary number) we can exactly represent all binary numbers in decimal. That’s because 1/2 can be represented easily as 5/10, but 1/10 cannot be represented in binary.

It’s interesting to see what happens to the decimal representation of binary numbers as powers of two get smaller:

| Binary_exponent | Decimal_value |

| -1 | 0.5 |

| -2 | 0.25 |

| -3 | 0.125 |

| -4 | 0.0625 |

| -5 | 0.03125 |

| -6 | 0.015625 |

| -7 | 0.0078125 |

| -8 | 0.00390625 |

| -9 | 0.001953125 |

Each time we decrease the exponent by one we have to add a digit one place farther along. We gradually acquire some leading zeroes, so the explosion in mantissa digits isn’t quite one-for-one, but it’s close. The number of mantissa digits needed to exactly print the value of a negative power of two is about N-floor(N*log(2)/log(10)), or ceil(N*(1-log(2)/log(10))) where N is an integer representing how negative our exponent is. That’s about 0.699 digits each time we decrement the binary exponent. The smallest power-of-two we can represent with a float is 2^-149. That comes from having just the bottom bit set in a subnormal. The exponent of subnormals floats is -126 and the position of the bit means it is 23 additional spots to the right and 126-23 = 149. We should therefore expect it to take about 105 digits to print that smallest possible float. Let’s see:

1.401,298,464,324,817,070,923,729,583,289,916,131,280,261,941,876,515,771,757,068,283,889,

791,082,685,860,601,486,638,188,362,121,582,031,25e-45

For those of you counting at home that is exactly 105 digits. It’s a triumph of theory over practice.

That’s not quite the longest number I could find. A subnormal with a mantissa filled up with ones will have seven fewer leading zeroes leading to a whopping 112 digit decimal mantissa:

1.175,494,210,692,441,075,487,029,444,849,287,348,827,052,428,745,893,333,857,174,530,571,

588,870,475,618,904,265,502,351,336,181,163,787,841,796,875e-38

Pow! Bam!

While working on this I found a bug in the VC++ CRT. pow(2.0, -149) fits perfectly in a float – albeit just barely – it is the smallest float possible. However if I pass 2.0f instead of 2.0 I find that pow(2.0f, -149) gives an answer of zero. So does pow(2.0f, -128). If you go (float)pow(2.0, -149), invoking the double precision version of the function and then casting to float, then it works. So does pow(0.5, 149).

Perversely enough powf(2.0f, -149) works. That’s because it expands out to (float)pow(double(2.0f), double(-149)).

Conveniently enough the version of pow that takes a float and an int is in the math.h header file so it’s easy enough to find the bug. The function calculates pow(float, -N) as 1/powf(float, N). The denominator overflows when N is greater than 127, giving an infinite result whose reciprocal is zero. It’s easy enough to work around, and will be noticed by few, but is still unfortunate. pow() is one of the messier functions to make both fast and accurate.

How do you print that?

The VC++ CRT, regrettably, refuses to print floats or doubles with more than 17 digits of mantissa. 17 digits is enough to uniquely identify any float or double, but it is not enough to tell us precisely what value they contain. It is not enough to give us the 112 digit results shown in the previous section, and it’s not enough to truly appreciate the sin(pi) trick explained last time.. So, we’ll need to roll our own.

Printing binary floating-point numbers efficiently and accurately is hard. In fact, when the IEEE spec was first ratified it was not yet a solved problem. But for expository purposes we don’t care about efficiency, so the problem is greatly simplified.

It turns out that any float can be represented as a fixed-point number with 128 bits in the integer part and 149 bits in the fractional part, which we can summarize as 128.149 format. We can determine this by noting that a float’s mantissa is a 1.23 fixed-point number. The maximum float exponent is 127, which is equivalent to shifting the mantissa left 127 positions. The minimum float exponent is -126, which is equivalent to shifting the mantissa right 126 positions.

Those shift amounts of our 1.23 mantissa mean that all floats can fit into a 128.149 fixed-point number, for a total of 277 bits.

All we need to do is create this number, by pasting the mantissa (with or without the implied leading one) into the correct location, and then convert the large fixed-point number to decimal.

Converting to decimal is done by two main steps. The integer portion is converted by repeatedly dividing it by ten and accumulating the remainders as digits (which must be reversed before using). The fractional part is converted by repeatedly multiply by ten and accumulating the overflow as digits. Simple. All you need is a simple high-precision math library and we’re sorted. There’s also some special-case checks for infinity, NaNs (print them however you want), negatives, and denormals, but it’s mostly quite straightforward. Here’s the conversion code:

/* See

for the potential portability problems with the union and bit-fields below.

*/

#include <stdint.h> // For int32_t, etc.union Float_t

{

Float_t(float num = 0.0f) : f(num) {}

// Portable extraction of components.

bool Negative() const { return (i >> 31) != 0; }

int32_t RawMantissa() const { return i & ((1 << 23) – 1); }

int32_t RawExponent() const { return (i >> 23) & 0xFF; }int32_t i;

float f;

#ifdef _DEBUG

struct

{ // Bitfields for exploration. Do not use in production code.

uint32_t mantissa : 23;

uint32_t exponent : 8;

uint32_t sign : 1;

} parts;

#endif

};std::string PrintFloat(float f)

{

// Put the float in our magic union so we can grab the components.

union Float_t num(f);// Get the character that represents the sign.

const std::string sign = num.Negative() ? " – " : " + ";// Check for NaNs or infinity.

if (num.RawExponent() == 255)

{

// Check for infinity

if (num.RawMantissa() == 0)

return sign + "infinity";// Otherwise it's a NaN.

// Print the mantissa field of the NaN.

char buffer[30];

sprintf_s(buffer, "NaN % 06X", num.RawMantissa());

return sign + buffer;

}// Adjust for the exponent bias.

int exponentValue = num.RawExponent() – 127;

// Add the implied one to the mantissa.

int mantissaValue = (1 << 23) + num.RawMantissa();

// Special-case for denormals – no special exponent value and

// no implied one.

if (num.RawExponent() == 0)

{

exponentValue = -126;

mantissaValue = num.RawMantissa();

}// The first bit of the mantissa has an implied value of one and this can

// be shifted 127 positions to the left, so that is 128 bits to the left

// of the binary point, or four 32-bit words for the integer part.

HighPrec<4> intPart;

// When our exponentValue is zero (a number in the 1.0 to 2.0 range)

// we have a 24-bit mantissa and the implied value of the highest bit

// is 1. We need to shift 9 bits in from the bottom to get that 24th bit

// into the ones spot in the int portion, plus the shift from the exponent.

intPart.InsertLowBits(mantissaValue, 9 + exponentValue);std::string result;

// Always iterate at least once, to get a leading zero.

do

{

int remainder = intPart.DivReturnRemainder(10);

result += '0' + remainder;

} while (!intPart.IsZero());// Put the digits in the correct order.

std::reverse(result.begin(), result.end());// Add on the sign and the decimal point.

result = sign + result + '.';// We have a 23-bit mantissa to the right of the binary point and this

// can be shifted 126 positions to the right so that's 149 bits, or

// five 32-bit words.

HighPrec<5> frac;

// When exponentValue is zero we want to shift 23 bits of mantissa into

// the fractional part.

frac.InsertTopBits(mantissaValue, 23 – exponentValue);

while (!frac.IsZero())

{

int overflow = frac.MulReturnOverflow(10);

result += '0' + overflow;

}return result;

}

Converting to scientific notation and adding digit grouping is left as an exercise for the reader. A Visual C++ project that tests and demonstrates this and includes the missing HighPrec class and code for printing doubles can be obtained at:

https://www.cygnus-software.com/ftp_pub/printfullfloats.zip

Practical Implications

The reduced precision of subnormals is just another reason to avoid doing significant calculations with numbers in that range. Subnormals exist to allow gradual underflow and should only occur rarely.

Printing the full 100+ digit value of a number is rarely needed. It’s interesting to understand how it works, but that’s about it.

It is important to know how many mantissa digits it takes to uniquely identify a float. If you want to round-trip from float to decimal and back to float (saving a float to an XML file for instance) then it is important to understand that nine mantissa digits are required. I recommend printf(“%1.8e”, f), and yes, this will perfectly preserve your floats. This is also important in debugging tools, and VS 2012 fixed the bug where the debugger only displays 8 mantissa digits for floats.

It can also be important to know what decimal numbers can be uniquely represented with a float. If all of your numbers are between 1e-3 and 8.58e9 then you can represent all seven digit numbers, but beyond that there are some ranges where six is all that you can get. If you want to round-trip from decimal to float and then back then you need to keep this limitation in mind.

Until next time

Next time I might cover effective use of Not A Numbers and floating-point exceptions, or general floating-point weirdness, or why float math is faster in 64-bit processes than in 32-bit /arch:SSE2 projects. Let me know what you want. I’m having fun, it’s a big topic, and I see no reason to stop now.

Doubles and other good ideas

%1.8e works neatly for printing a float with nine digits, but it always prints an exponent, whether it is needed or not. Using %g tells printf to use exponents only when needed, thus saving some space, and improving clarity, but reducing consistency. It’s your choice. Here then are the known alternatives for printing round-trippable floats and doubles.

printf(“%1.8e\n”, d); // Round-trippable float, always with an exponent

printf(“%.9g\n”, d); // Round-trippable float, shortest possibleprintf(“%1.16e\n”, d); // Round-trippable double, always with an exponent

printf(“%.17g\n”, d); // Round-trippable double, shortest possible

Aside: a double (53-bit mantissa) requires 17 digits of mantissa to uniquely identify all values.

A long double (64-bit mantissa) as used in the x87 register set requires 21 digits of mantissa to uniquely identify all values. Unfortunately the VC++ registers window only displays 17 digits for the x87 register set, making many values indistinguishable if you intentionally or accidentally generate x87 register values with more than 53 bits of mantissa, as can happen with fild.

Could you please elaborate the following a bit more?

“The number of mantissa digits needed to exactly print the value of a negative power of two is about N-floor(N*log(2)/log(10)), or ceil(N*(1-log(2)/log(10))) where N is an integer representing how negative our exponent is. “

The N*log(2)/log(10) part is the ‘standard’ calculation of how many decimal digits it takes to represent a base-2 number with N digits. For a positive number with N digits you would apply ceil() to that and be done. In this case we are using that formula to estimate how many leading zeroes you get when you convert 2^(-N) to decimal. I don’t have an elaborate proof, just some intuition and experimentation.

Meanwhile, the number of digits to the right of the decimal place (including zeroes) is simply N. So the calculation is DigitsToRightOfDecimal – LeadingZeroes or N-floor(N*log(2)/log(10)).

This article offers some related perspective:

http://www.exploringbinary.com/number-of-bits-in-a-decimal-integer/

thanks a lot for lengthy article, great info

they only problem i have is why do we have to measure precision in term of conversion from binary to digital?

we work in binary, and convert only to print them.. arent we?

Much of the information in this post is just curiosities, but it can be relevant. If floating-point data is stored in text form (handy for readability) then it is important to know how many digits must be used to avoid loss of information. Many products have made the mistake of just storing or displaying eight digits of mantissa, not realizing that sometimes this is insufficient. If my article convinces a few developers (the Visual Studio team!) to display the extra digit then it is worthwhile.

Also, when debugging extremely delicate calculations it can be handy to print the *actual* value of a number, in order to better understand why a calculation is going awry.

Hi

As said in another comment by Fabian https://randomascii.wordpress.com/2013/02/07/float-precision-revisited-nine-digit-float-portability/#comment-4742 people should start using printf(“%a”), as it’s format the exact hexadecimal representation of the double value, which is going to be read back using scanf(“%la”). It will be the same, exact, identical, value regardless of the architecture, endianness, compiler, operating system. By the time, you find the representation usable, readable and even be able to compare them without converting them to base 10.

Regards.

This is exactly what i was looking for, Thanks!

Do you think you’ll be showing an example of converting from a string to a float in another article? I don’t like using my compilers sprintf_s and sscanf_s functions because they don’t work the way i need them too, so i have created my own print and scan functions. The only ones that are left for me to create is ScanFloat and ScanDouble. Please e-mail me, ill be happy to work something out!

Don’t try writing your on string to float conversion functions — getting them right is famously difficult. For discussion of the many errors found in various conversion routines, and for links to well tested conversion routines, see:

http://www.exploringbinary.com

Or, just call printf/scanf to do the float conversion for you.

Thanks, IL check that out!

the PrintFullFloats.zip link is broken 😦

The link works for me. I know it doesn’t work from some work networks, so that might be the problem. Or the ISP that hosts that link might have had a blip — they do that occasionally. So, try again and/or try from home.

Bruce, the only reason guys like me can get on with writing awesome code, is because of guys like you. I bow to you sir, for treading these dark paths on behalf of all us lesser coders who merely follow, map in hand.

Thank you for a really interesting article.

I’m working in Flash/ActionScript, building a scientific calculator. Got to experimenting with floating-point numbers (AS only supports Double-precision floating point format, and 32-bit integers). First I was checking for a way to implement your version of nearly equal comparison function with ULPs. I made a class that write the double Number into a ByteArray then reads it back as two 32-bit integers, giving me access to the bits. Then I got curious if I can implement this digit-printing routine, so I ported your code to AS. Since I don’t have access to a 64-bit type you use for “Product_t”, I had to break every 32-bit uint into two 16-bit ones (still using 32-bit uints to store them), and have twice as many steps for division and multiplication. Amazingly, it worked correctly on the first run. Besides these lines which I can’t understand, for the life of me. Is this a typo or something?

result += ‘0’ + remainder;

And

result += ‘0’ + overflow;

What’s the ‘0’ for? If I run my code with “‘0’ +”, my results have an extra 0 next to every expected digit!

I can see how the ‘0’ + overflow paradigm could be confusing. The trick is that in C/C++ single quotes are different from double quotes. A single-quoted character is actually an integer constant whose value is the character code of the character. So, when overflow is added to the character constant for zero we get a character constant for one of the digits from zero to nine. That character constant is then added to the string.

It has to be done that way because automatic conversion from integers to strings is considered a bad thing in C/C++. It can be supported through operator overloading, but std::string does not support it.

So, in languages where you can just add overflow to a string and have it converted to its ASCII representation by the language the ‘0’ + sequence is indeed unnecessary.

Hey Bruce,

Thanks for your reply. Of course, I get it now. I used to program in C/C++ about 10 years, but since then I’ve gotten too much used to thinking about single and double quotes in the same way!

I’ve did some optimization to your code that I ported to ActionScript. For division, I skip the leading 0 words before doing the main for loop. For multiplication, I skip the trailing 0 words. Since I have to stay within 32-bit unsigned integer for every operation, I have to operate with 16-bit values, so my multiply and divide can only accept a number under 2^16. So when I get the digits, I divide and multiply by 10000 instead of 10, that way I’m getting 4 digits on each divide and multiply call. Together all of this makes the printing code over 4 times waster, important in an interpreted language such as ActionScript.

All of this started kind of like an exercise and exploration, inspired by reading your series of articles on floating point. Thanks for interesting reads! But during reading, I’ve discovered that the native number printing functions in ActionScript perform rounding incorrectly (and inconsistently), so I’m gonna adapt this printing code for use in my upcoming scientific calculator.

I want to ask you a question about a topic really interesting to me, I cant’t find much information on. It’s about rounding for printing purposes. I want my scientific calculator to mimic a Casio FX series scientific calculator. Example:

A user enters a value of 0.0012345 and presses “=” button. Internally, I get a Number (double) of 1.2344999999999999e-3 – the closest representation of the number entered.

If the calculator is in the “normal 2” display mode, it should display 0.0012345 on it’s 10-digit display. I do that by rounding half away from zero to 9 fractional digits. But the problem is, on Casio FX the normal mode should actually truncate the extra digits: e.g. 0.00123455599999 displays 0.001234555, not 0.001234556. But if I truncate the digits, 0.0012345 in my software calculator outputs 0.001234499. So what I’m trying now – I print 17 digits of the number as a string (no decimal point), then see if the last digits is >= 5 or not, and decide whether to round up or down. I perform the rounding by manipulating the digits as the characters of my string, and end up with a string, containing my number rounded to 16 significant digits. I then round second time (truncate for the normal mode) to a number of digits I need to fit on the display. Is that the way to go? Surely double rounding can create incorrect results, in theory. However with my choice of first rounding to 16 digits I’m just trying to get rid of inaccurate digits. Does that make sense? If not, what could be done here? Casio sure figured out some way.

Actually since reading your articles I’ve tried many things on that scientific calculator, and found out there must be many clever “hacks” they use to try and look more true to actual math than the floating point format is used in their hardware.

For example, I’ve really enjoyed your sin(pi)+pi idea. On the Casio, however, there is some hack about that. Of course, they want sin(pi) to return exactly 0. So:

sin(pi)=0

sin(pi+1e-11)=0

sin(pi+1-10)=-1e-10

sin(32*pi)=1.2e-10

On the other hand:

sin(1e-11)=1e-11

sin(1e-99)=1e-99

sin(1e-5)-1e-5=0 // Actually ~ -1.667e-16 – not that small!

So it looks like there’s a lot of hardcoded conditions in sin() of that calculator, like

if (x is nearly 0)

return x;

else if (x is nearly pi)

return 0;

else

return actual sin(x);

I guess same idea is used for tan(). On many software calculators, calculating tan(pi/2) is notorious for resulting in ~ 1.633e+16 instead of the expected division by zero error. Casio, however, correctly signals a Math Error on tan(pi/2). However, trying tan(21*pi/2) results in a number less than 1e10.

I’m considering whether I should follow the Casio way and use a bunch of clever hacks, nearlyEqual checks and special cases for every math function supported, or just offer the user the actual results produced, with all the limitations of the double precision?

Do you have suggestions on better ways to approach this issue?

Appreciate your input!

Generating many digits at a time (by dividing by 10000) is a great optimization. In Fractal eXtreme (http://www.cygnus-software.com/) I have to print and convert numbers that may be thousands of digits long so I divide by 1000000000 to get nine digits at a time.

Clever hacks to get the ‘correct’ numbers will probably lead to madness. For instance, the Windows calculator thinks that sqrt(4)-2 is non-zero, which is pretty tragic. I think you have two sane choices:

1) Correctly rounded double precision with wise choices about how many digits to print.

2) Correctly rounded base-10 floating-point. This is a bit more work — especially getting it correctly rounded in all cases — but this will sometimes give more intuitive results because, for instance, 1/5 can be represented. Average users don’t want to know about 1/5th being a repeating fraction, and they shouldn’t have to care.

Just my opinion of course.

Fractal eXtreme looks awesome!

sqrt(4) – 2 being not zero is definitely one of the crazy things I’d like to try to avoid in my calculator. But how…

What exactly do you mean by “correctly rounded”? I can control rounding when I’m printing numbers, and I guess I might have to write my own parseFloat() to parse numbers in the user input, but I have no control on rounding during calculations.

I’ve read about decimal64 format, is that what you mean? Do you think is it possible/feasible to implement in software (in ActionScript)?

Thanks for your opinion!

The basic operations (+, -, *, /, and square-root) in IEEE floating-point math are defined to be correctly rounded. With the default rounding mode of round-to-nearest-even that means that the answer that they return is required to be the closest possible result to the infinitely correct result. In other words it means that their results are perfect (given the finite precision of the formats). When correctly rounded sqrt(4) will give exactly 2. Microsoft’s calculator calculates sqrt(4) to 40 digits, which is impressive, and is more precision than IEEE doubles. But, it rounds incorrectly so sqrt(4) is wrong in the 40th digit. Oops.

Since IEEE sqrt is correctly rounded, sqrt(4) gives exactly 2.0. That means that fsqrt(4.0f), which only has ~7 digits of precision, ends up working better than the 40 digits of Microsoft’s calculator. The importance of getting the correct result (correctly rounded) was recognized decades ago, but Microsoft’s calculator reinvented the wheel and made it square.

decimal64 is one possibility. You can implement the basic math operations of base-10 math in any programming language. Getting correctly rounded +, -, *, / and sqrt isn’t too hard. Getting correctly rounded sin/cos/tan is *extremely* hard so you may need to punt on those and just go for ‘close enough’.

For another take on printing floating-point numbers see this excellent post:

http://www.ryanjuckett.com/programming/printing-floating-point-numbers/

Thanks for the link and for your knowledge. I guess I’ll just have to read the IEEE standard. I’m sure I can implement the basic arithmetic operations in base-10, however since I”m making a scientific calculator, it’s gonna support a lot more than that, implementing everything is gonna take a while, and I’m not sure the performance in ActionScript will be adequate. It’s one thing to use this kind of math just for printing the result of the calculation, another thing to use it for all the calculations. I guess I’ll just make the best use of the built-in Number type, and see how it goes. But surely implementing a base-10 math library would be quite fun.

http://dl.acm.org/citation.cfm?id=2837654 is another good (and recent) link. 🙂

The description looks promising, but paying $15 to read it is annoying.

Miguel, thanks for that reference! I’ve found the same article is available for free on the author’s university website: https://cseweb.ucsd.edu/~lerner/papers/fp-printing-popl16.pdf

I’ve written some testing code to check the number of reliable (accurate) digits for doubles.

The results match up with the theory you’ve explained. For normal numbers, a double has 53 bits of mantissa (1 bit implied), equivalent to around 15.955 decimal digits. Accounting for wobble, we can rely on 15 (15.655) digits in an arbitrary double, and require 17 (16.955) digits to round-trip an arbitrary double to decimal and back. My testing code confirmed this.

However, this got me thinking, so I’ve got a question for you.

Given an arbitrary double, is there a practical and easy way to determine (for a normal or subnormal number):

1) How many digits of this number are accurate?

2) How many digits of this number are required to make a successful round-trip to decimal and back?

For example I want a function that prints numbers with as much precision as necessary to uniquely identify them, but no more. So for an arbitrary double argument, my printing function should determine the minimum number of digits to print a round-trippable decimal.

My idea so far is (for normal numbers, haven’t considered subnormals yet):

1) Print the number itself, and the next and prior numbers 1 ULP away, to 17 digits, respectively into variables a, b, c. The numbers will have N matching leading digits, compare the (N+1)th digits of a, b, c. If they are in sequence (e.g. 5, 6, 7), the (N+1)th digit is reliable, so there are (N+1) reliable digits, otherwise (e.g. 4, 6, 7 or 5, 6, 8), there are N reliable digits.

2) Print the number itself, and the next and prior numbers 1 ULP away, to 16 digits, respectively into variables a, b, c. Compare the last digits of a, b, c. If they are all different (e.g. 5, 6, 7), 16 digits is enough precision to make a round-trip, otherwise (e.g. 6, 6, 7 or 5, 6, 6) 17 digits are required to make a round-trip.

However this way is not very fast, so I wonder how to approach it from a math standpoint.

Thanks!

You could try approaching it mathematically but you would then be at increased risk of bugs in various CRT implementations causing failures. I would recommend just using printf(“%.17g\n”, d); which should generally give the shortest round-trippable double, or close enough anyway.

I don’t know what do you mean by CRT.

That’s what I’m doing at the moment, having implemented my own printf in ActionScript.

However, in some cases a number which (omitting the exponent) should print like 5 (and is printed like this by ActionScript’s built-in toString()), is printed as 5.000…0001e. Checking the numbers around it, I can see that printing it to 16-digit would still be unambiguous.

By CRT I mean C RunTime library and by that I mean whatever language support library implements floating-point print functions.

Let me know if you figure out an elegant solution for minimizing digits. In my case I am often more concerned with consistency so I’m happy with a fixed number of digits.

I see. I’m thinking the question “how many digits are necessary to print to uniquely identify a number?” is related to the implementation of scanf (or parseFloat in case of ActionScript). How many digits is needed for a particular number to be correctly parsed from a string? After some testing I’ve found out that ActionScript’s parseFloat is even more inaccurate than it’s number-printing functions. For example, when I use the literal number 9.00000000000005e-235 in my AS code, and print it to 21 digits (maximum that AS allows), it’s 9.00000000000004990276e-235. But doing a parseFloat(‘9.00000000000005e-235’), printed to 21 digits, is 9.00000000000004431595e-235, which is 4 ULPs away from the correct nearest representable value.

That means I can forget about round-trippable doubles in AS! Unless I will write my own parseFloat implementation. I’ve heard advice to never “roll you own” scanf because there are really many issues. I could use the output of the AS parseFloat, print it with high enough precision, go 1 ULP towards the original number, print that one, then subtract the strings to find out how roughly many ULPs away is the parsed number, then add that many ULPs to that number. This might be more effective than trying to make my own decimal to binary converter?

In *theory* the question is only dependent on the design of the IEEE floats and doubles. In practice it *may* be dependent on how well scanf/ParseFloat are implemented.

That is *quite* disappointing that parseFloat gives different results from the compiler’s parser. You should file a bug about that.

It’s quite frustrating indeed. I’ve filed a bug about that, but Adobe is not quick at all about checking them. I guess if it’s not concerning mobile game development, which Adobe is focusing on for Flash platform these days, they might not care much about that for a long time. I’ll see what I can do by myself. After all, my scientific calculator wouldn’t care much about parseFloat speed, when evaluating a formula all that is necessary is just parsing a few floats once.

I’ve implemented a parseNumber function which uses parseFloat to get an initial candidate double. I then print the candidate digits (to 24 digits) and calculate the distance from the number in the string to parse, using my very limited high-precision big decimal class which stores numbers as a sign, a normalized decimal digits string (with decimal point implied after the first digit), and an exponent. I advance the candidate 1 ULP in the direction of the goal number, getting a second candidate. I calculate the difference between the first candidate and the new number, which gives me the magnitude of 1 ULP. I then divide the distance from the first candidate to the goal number, to find out how many ULPs away is it. If the number of ULPs can be rounded to the nearest integer with enough certainty, just advance the number by the rounded number of ULPs (-1 since already advanced one ULP), otherwise (number of ULPs is nearly half-way between two integers), get both candidates and choose the one whose absolute distance to the goal number is smaller. If the distances are equal, I chose the number closer to zero.

It’s much slower than the original parseFloat, but produces results identical to the compiler (at least for the numbers I’ve tested it on). For a scientific calculator I guess it’s worth to use it.

A similar approach could be used to determine how many digits are necessary to uniquely identify a particular number, but the performance will be slow as well. For my application it’s not really necessary feature so I’m gonna forget about it for now. I still think this question could be answered mathematically if we’re printing the digits ourselves (like your example high-precision digit printing code above), but I can’t wrap my head around it yet.

I think my math routines are now sufficient to continue on the actual UI of my scientific calculator. Your blog and your comments have been a real help, Bruce, I can honestly say if I didn’t find your articles, I wouldn’t implement these features at all, and would be still frustrated about I can’t print or round some numbers correctly.

Thanks.

–Gene

Sounds great! I recommend testing with known-hard numbers (see http://www.exploringbinary.com/) and with randomly generated 64-bit bit-patterns if you want to look for possible bugs.

Good idea, and thanks for interesting link!

So parseFloat failed on three of the numbers I’ve got from there (expected result on hte left, actual result on the right). At least it didn’t freeze (like some old versions of Java and PHP used to do, according to that website)…

assertion failed: 6.92949564460092000000e+15 == 6.92949564460091900000e+15

assertion failed: 2.22507385850720138309e-308 == 2.22507385850719990089e-308

assertion failed: 2.22507385850720088902e-308 == 2.22507385850719990089e-308

My correction function got all these numbers right.

I made a random double generator, for every number I print it to 17 digits using my own printing function, then convert back to number using parseFloat and my correction function.

First I checked the subnormal number range, interestingly parseFloat() worked correctly from the smallest subnormal number to about 3.1e-309. Starting around there, the number of inaccurate results goes up sharply.

I’m really curious what’s special about this number, looking at it’s bit pattern it doesn’t look like some interesting edge case!

So I hardcoded 3e-309 as the lower limit for my correction function – below that it just returns parseFloat().

Running the test code with randomly generated doubles I can see how many inaccurate results parseFloat() generates. And it happens at any exponent value (the further exponent is from 0, the more inaccurate is the result, when abs(exponent) is around 300, the results of parseFloat() are off by as much as 6 ULP (max I’ve seen so far)). My correction function so far produces accurate results, will leave the test routine running overnight…

Bruce,

I’ve read this (excellent) article before but was looking at it again while doing my own research…

If you had tested the full decimal exponent range (-38 to 38, instead of just -37 to 37) you would have found failures in the -38 range: 9.403961e-38, for example, converts to 9.40396153281…e-38, which round trips back as 9.403962e-38.

I also found one failure technically in the 28 range: 1e28. It converts to 9.9999994421…e27, which round trips back as 9.999999e27. It is the only “1e+/-n” input bordering a failing range to be so unlucky. (I would imagine in double-precision there would be more such examples.)

Hmmm. That sounds very odd. I assume you are referring to round-tripping of numbers printed with %1.8e? I tested all non-NaN numbers (I looped through the integer representations) and when I tested they all round-tripped in VC++ (from float to text and then back to the same float).

What VC++ version were you using? I probably used VC++ 2010.

I am talking about the other direction: text to float to text.

I had run this on VS 2010 but just repeated it on VS 2015. This code should prove the point:

float f = 1e28f;

printf(“%1.6e\n”, f); //Prints 9.999999e+27

This is the correct behavior and not a bug.

Sorry I wasn’t thinking — this is just an artifact of my test program. It blindly prints everything to 7 digits. 1e28 is one digit and of course would print OK to 6 digits. But my comment on the -38 range still stands.

Wait. Viewed as 1.000000e28 it is 7 digits, and it does not round trip. So I stand by my original comment, I wonder why your test program did not catch this.

What is the hexadecimal representation of the number, and what compiler version are you seeing a failure to round-trip with? ’cause I just checked and all numbers in that range work fine for me. I go:

sprintf_s(buffer, “%1.8e”, start.f);

then:

int count = sscanf_s(buffer, “%f”, &fRead);

and verify that I get the same number back. 100% success, with VS 2013.

It seems like we tested this in different ways, somehow getting almost identical results in identifying the less than 7-digit precision decimal exponent ranges: -35, -32, -22, -19, -16, -13, -10, -7, -4, 9, 12, 15, 18, 21, 24, 27, and 37. I detected those plus some in the -38 decimal exponent range (which you did not test) and one in the 28 decimal exponent range (which your test did not discover). I generated all 7 digit decimal strings, converted them to floats, and then converted them back to strings (%1.6e”). I had thought this was the way you’d done it as well. (I also did tests in the other direction — the “greater than 8-digit precision test” — where I loop through all floats, convert to text with “%1.7e”, and then convert back. But I did not comment on that part.) I don’t know how you tested both directions originating from floats and using “%1.8e”.

Sorry, I was just being dense. I never actually tested the round-tripping to seven digits. That was just theory. Interesting that it fails. Is that a breakdown in theory, or (I’m assuming) a bug in the VC++ CRT?

It’s not a bug, and it matches theory (but unexpected at first glance). 1e28 is at a border where the decimal gap size (for 7 digits) exceeds the binary gap size (for 24 bits) again, so it looks like it should have 7 digits of precision. But the closest float to 1e28 is less than 1e28, so that brings it into a “doesn’t have 7 digits” range at exponent 27. (This scenario sets up at several other power of 10 borders, e.g. 1e38, but the resulting conversions happen to be close enough to round back correctly to 7 digits.)

I’m less concerned about that direction. Correctly rounded float-to-text is definitely nice to have, but not as critical as float-to-text-to-float. But, I do believe that perfection is attainable and we should expect it.

Bruce, I’ve written a related article at http://www.exploringbinary.com/decimal-precision-of-binary-floating-point-numbers/ — please take a look.

Excellent work as always. That’s a great analysis.

Regarding the discussion about wobble, Goldberg’s article defines wobble as being between

(1/2)*(Base)^(-precision) and ((Base)/2) * ((Base)^(-precision)).

For a float with 24 bits precision, shouldn’t the wobble be between 2^(-25) == 1/33554432 and 2^(-24) == 1/16777216 instead of 2^(-23) == 1/8388608 and 2^(-24) == 1/16777216.

As always, thanks for the great articles.

Yeah, that makes sense. So, between 0.5/8388608 and 0.5/16777216. I fixed the article because I think that is correct.

Thanks. On a related point…

Under the section Representing decimals: 6-7 digits, you mention that “At 8.589930e9 a float’s relative precision is 1/16777216 but at 8.589974e9 it is just 1/8388608”.

Since 2^33 == 8.589934592e9,

Then 8.589930e9 will be near the end of the range [2^32, 2^33) which should give a relative precision of about 1/(2^25) == 1/33554432.

And 8.589974e9 will be near the start of the range [2^33, 2^34) which should give a relative precision of about 1/(2^24) == 1/16777216.

However, isn’t the inability to represent 8.589973e9 not so much related to the different relative precisions at 8.589930e9 and 8.589974e9 (since every power of 2 range has the same range of relative precision), but as you mention in the next paragraph, more to do with the reduction in precision by a factor of 2 as one moves from [2^32, 2^33) to [2^33, 2^34) .

I guess “relative precision” is a slightly vague term – should the numerator be 1.0 or 0.5? That is, should the numerator be the gap between numbers or the maximum error?Goldberg defines wobble, but is that the same as defining relative precision? I’m not sure.

When you cross an exponent boundary the gap between numbers (precision/error in absolute terms) doubles, and the relative precision halves – they both get worse. I think that which one is the “cause” of the inability to represent 8.589973e9 depends on how you phrase your argument. Both?

I think the main factor in the inability to represent 8.589973e9 is due to the precision/gap size in the range [2^33, 2^34) where 8.589973e9 resides.

It is the gap size that results in the relative error, which is calculated as the difference between 8.589973e9 and 8.589973504e9, divided by 8.589973e9.

As Goldberg puts it,

“If the result of a calculation is the floating-point number nearest to the correct result, it still might be in error by as much as .5 ulp. Another way to measure the difference between a floating-point number and the real number it is approximating is relative error, which is simply the difference between the two numbers divided by the real number…

Since numbers of the form d.dd…dd × (Base^e) all have the same absolute error, but have values that range between (Base^e) and ((Base^e) × Base), the relative error ranges between … (1/2)*(Base)^(-precision) and ((Base)/2) * ((Base)^(-precision)).

In particular, the relative error corresponding to .5 ulp can vary by a factor of (Base). This factor is called the wobble.”

Thus, from what I understand, relative error is calculated for a single float representation within the range [2^n, 2^(n+1)) whereas wobble refers to the range of the relative errors of the floats within the range [2^n, 2^(n+1)).

Where you mention that “At 8.589930e9 a float’s relative precision is 1/16777216 but at 8.589974e9 it is just 1/8388608”, the relative error/precision at 8.589974e9 can be calculated specifically as

|(8.589973504e9-8.589974e9)| / 8.589974e9 =(approx.) 5.8e-8

which is within the wobble range for floats – 1/16777216 (at start of power of 2 range) to 1/33554432 (at end of power of 2 range).

As to whether the numerator should be 1.0 or 0.5, Goldberg defines “the relative error ranges (for the wobble as being) between ((Base/2) * (Base^(-p)) * (Base^e)/(Base^e) and ((Base/2) * Base^(-p)) * (Base^e)/Base^(e+1). So, strictly speaking the numerator, which is the 1/2 ULP term, should be

(Base/2) * (Base^(-p)) * (Base^e)

After the reduction of the relative error expressions where Base == 2, whether you would like to consider the numerator as 1 or 0.5 is purely algebraic I think.

One caveat about “%g” is that if the floating point number can be represented as an integer then it will be written without a “.”. For example, printf(“%17g”, 42.0) produces “42” instead of “42.0”. In some applications this may mess with the round tripping because the string gets parsed as an integer instead of as a double.

One workaround is to manually add a “.0” at the end of the string if the generated string looks like an integer (a sequence of digits, possibly prefixed by a “-” sign).