I recently saw a blog post covering how Sleep(n) works on various Windows operating systems and it showed slight variations in sleep times, between and within operating systems. The unanswered questions were:

- Why does the sleep time vary between operating systems?

- Why does the sleep time vary between calls?

In this post I make an educated guess about the first question and I use xperf (of course) to investigate the second.

The short answer is that:

- The Sleep() implementation was changed (improved, in my opinion) between XP and later versions of Windows

- Sleep() itself is ridiculously reliable, but OS load causes thread wakeup to be sometimes be slightly delayed

That’s it.

Sleep Implementation on Windows

The portion of the scheduling system that implements Sleep() does so based on a timer interrupt that fires at a regular interval. When you call Sleep(n) the operating does not schedule your thread to be woken up ‘n’ milliseconds later. Instead it chooses which timer interrupt would be the best fit (more on this later) and schedules your thread to be readied at this time.

On my laptop the timer interval varies between 0.5 ms and 15.6 ms, with 15.6 ms being the default.

In other words, if you call Sleep(1) in a loop then it is quite possible that your thread might only be readied every 15.6 ms. If you call Sleep(1) at some random time then the delay before your thread is readied can be quite unpredictable.

Traditionally the Linux kernel also uses this periodic timer system for scheduling, however a tickless kernel is available now.

Know your frequency

Many games and multi-media programs increase the timer frequency by calling timeBeginPeriod(1). This requests that a timer interval of no longer than one milisecond.

Note: reducing the timer interval has an adverse affect on battery life by preventing the system from going idle. It is particularly important that programs which may sit idle for long periods of time – paused video/music players, minimized programs, background programs, etc. – don’t raise the timer frequency when they don’t need it.

Obviously you can’t run tests on the behavior of Sleep() without knowing what the timer interval is. You might think that calling timeBeginPeriod(1) would give you sufficient knowledge, but it does not. Calling timeBeginPeriod(1) ensures that the timer interval is no more than one millisecond – but it could be less. When I got anomalous results during initial testing I checked to see what my system’s clock resolution was using the clockres tool from sysinternals. These were my results:

c:\>clockres

ClockRes v2.0 – View the system clock resolution

Copyright (C) 2009 Mark Russinovich

SysInternals – http://www.sysinternals.comMaximum timer interval: 15.600 ms

Minimum timer interval: 0.500 ms

Current timer interval: 0.500 ms

Note that the timer interval is 0.5 ms. You can often use “powercfg /energy -duration 5” to figure out who has raised the timer frequency – look for Platform Timer Resolution in the report web page to find out who has requested a higher timer resolution. In my case VirtualBox had lowered the interval to 0.5 ms, so I shut it down before resuming my tests.

This demonstrates an important aspect of the Windows timer interrupt: it is a global resource. The timer interval will be the minimum value selected by all the running programs. One program that reduces the interval will reduce battery life for the entire system, and will affect the Sleep() behavior of every other program on the system. As the original author noted, you can also use timeGetDevCaps to query the current timer frequency.

Operating system differences

I said earlier that the Sleep() chose which interrupt-time would be the best fit for a specific call to Sleep() but I glossed over what ‘best fit’ means. Because the wakeup times are quantized the system necessarily has to decide whether to round up, round down, or round to nearest – there will never be an interrupt which is exactly the desired time. The documentation for Sleep() used to say that the thread would sleep for at least the specified length of time, so Windows XP rounds up. The documentation now says that the thread may sleep for less than the specified length of time – and indeed Windows 7 and Windows 8 round down.

This variation is most obvious if you set the timer interval to 1 ms and then call Sleep(1) in a loop. On Windows XP the round-up behavior means that your thread will be readied every two milliseconds – the next interrupt will be less than one ms away and therefore ineligible. On Windows 7/8 the round-down behavior means that your thread will be readied every millisecond.

I greatly prefer the Windows 7/8 behavior because it allows for waking up every ms which is impossible with Windows XP.

The trivial test on Windows XP proves that it is rounding up – when you call Sleep(1) in a loop you are typically calling it less than 0.01 ms after the timer interval and the rounding up to two interrupts later means you are sleeping for over 1.99 ms. However on Windows 7/8 it is not so obvious whether the logic does round-down or perhaps round-to-nearest. So I changed my test loop to look like this:

for (int i = 0; i < 1000; ++i)

{

Sleep(1);

BusyWait(0.9);

}

BusyWait is a simple function that spins in a loop for the requested number of milliseconds. When I ran this test the average time per iteration barely budged. This test showed that Sleep(1) on Windows 7 still readies my thread on the next timer interrupt, even when it is less than 0.1 ms away. Fascinating. I didn’t test on Windows 8 because I am lazy and because I don’t have such a machine at hand but I assume that its results would be similar.

Readied does not equal running

Timer interrupts are, by their very nature, extremely stable and precise. They happen at exactly the requested frequency, and your thread is readied immediately when they fire. So, on all Windows operating systems you can assume that Sleep() readies your thread at exactly the correct time (rounded up or down). So, where do the variations come from? Why does careful measurement show that the interval is sometimes a few tenths of a millisecond longer or shorter?

The answer is that even an idle Windows system is still running other code. Some of this other code may also be triggered by the timer interrupt which means that there will sometimes be a lot of processes that all want to run at exactly the same time. Even on a multi-core system this can lead to short delays, especially if some cores are parked or if the Windows scheduler is trying to maintain core affinity.

Either way, when your thread is readied that just means that it is ready to run. It may not actually get a chance to run immediately. If another thread gets to run first then you will get a slightly longer interval. If your thread is only delayed by a few tenths of a millisecond then the next loop iteration will be shorter – you’re already closer to the next timer interrupt.

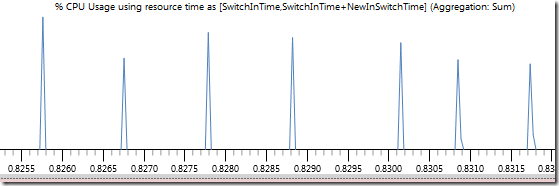

This behavior can be seen in this screen shot of WPA’s CPU Usage (Precise) graph. The units on the x-axis are seconds and we can see the monotonic regularity of our test process – until one tick at around 0.8300 is delayed, causing a longer interval followed by a shorter interval:

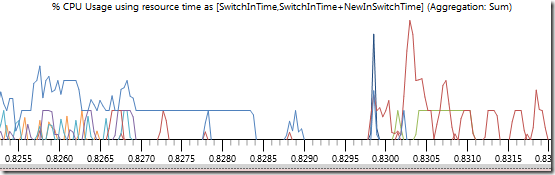

My system wasn’t particularly idle so when I turn off filtering you can see the many other threads that are running. However it turns out that none of them interfered with our high-priority thread:

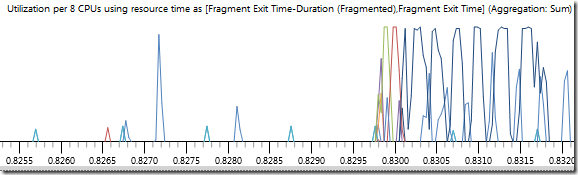

The DPC/ISR graph shows what happened. A series of interrupts and DPCs started up right when our thread was scheduled to run, so it had to wait. Even a high-priority thread is no match for an interrupt.

Aside: if you look closely at the graph above you can see the timer interrupt’s regular heartbeat – it showed up on time, even if the thread ran late.

(For more information on thread states such as Waiting, Ready, and Running see The Lost Xperf Documentation–CPU Scheduling)

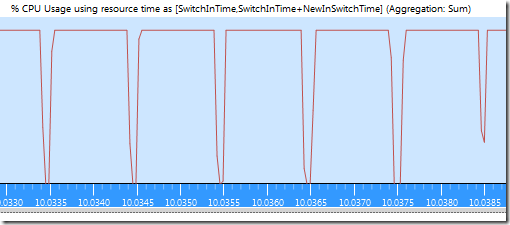

The CPU Usage (Precise) graph with BusyWait(0.9) looks like this – our thread finishes its BusyWait(), calls Sleep(1), and is then readied just a fraction of a millisecond later:

The increased variance of wakeup times on Windows 7 and then Windows 8 could be from higher system load, it could be from higher system load, or it could be from timer coalescing – each version of Windows tries to merge timers more aggressively so that the system can go to sleep longer, which would lead to higher timer variance.

Don’t call Sleep(0)

No discussion of Sleep() is complete without reminding developers that calling Sleep(0) is evil. It allows another thread of equal priority to run but (due to dynamic priority adjustment) you can never be sure that such a thread exists. And if it did there is still no sensible reason to use Sleep(0). Sleep(0) is morally bankrupt and should never be used on Windows, because it has no well defined purpose and it often leads to busy waiting.

(See my article “In Praise of Idleness” for more thoughts on why you shouldn’t busy wait)

Show us the code

It’s not science unless the code is shared to allow others to reproduce the results. Here’s the batch file I used to record traces:

xperf -on PROC_THREAD+LOADER+DPC+INTERRUPT+CSWITCH

SleepFrequency

xperf -stop -d sleeptest.etl

@rem Display the timer interval

clockres

Here’s the code for the test program. I didn’t bother measuring the length of each interval because I knew I could extract that from the trace.

#include <Windows.h>

#include <stdio.h>// Link with the winmm library for timeBeginPeriod

#pragma comment(lib, “winmm.lib”)// Return the time in seconds as a double.

double GetTime()

{

LARGE_INTEGER frequency;

QueryPerformanceFrequency(&frequency);

LARGE_INTEGER time;

QueryPerformanceCounter(&time);

return time.QuadPart / double(frequency.QuadPart);

}// Sleep the specified number of milliseconds.

void BusyWait(double msTime)

{

double endTime = GetTime() + msTime * 0.001;

while (GetTime() < endTime)

;

}int main(int argc, char* argv[])

{

timeBeginPeriod(1);

SetThreadPriority(GetCurrentThread(),

THREAD_PRIORITY_TIME_CRITICAL);const int kCount = 2000;

double start = GetTime();

for (int i = 0; i < kCount; ++i)

{

Sleep(1);

BusyWait(0.9);

}

double elapsed = GetTime() – start;

printf(“%f ms per tick.\n”, elapsed * 1000.0 / kCount);

}

This was really interesting to read because I’ve never seen the change in Sleep() behaviour mentioned before, thanks! It was also useful to learn about clockres utility, it showed me that on my machine ATI control center (ccc.exe) has changed the timer resolution to 1ms. Notice that I had to close Sysinternals Process Explorer (which many other readers of this blog probably run too) to find this using powercfg as it kept giving “Could not open the NT Kernel Logger. The NT Kernel Logger is already in use.” error until I did it.

You should try complaining to ATI about their control center changing the timer resolution. An always running application like that is exactly the sort of application that should leave the timer interval alone. On my laptop it looks like lowering the timer interval from 5 ms to 1 ms wastes about 0.3 W. In addition to the battery life impact that probably wastes a few MW of power, if ATI’s control center is at all widely deployed. This is a case where a software fix can save far more power than all the light bulbs I’ll ever turn off in my life.

Channel 9 explains it here (in part): http://channel9.msdn.com/Shows/Defrag-Tools/Defrag-Tools-26-WinDbg-Semaphores-Mutexes-and-Timers

I’m not a huge fan of watching videos to learn tech information — 51 minutes is a lot of time to invest for a topic that I mostly know already — but I appreciate the link. windbg tips are always useful.

Illuminating article. I had used ClockRes in the past and have now grabbed the 2.0 version. I was not aware of powercfg’s logging ability. That was also illuminating.

Bad news – plenty of poorly-behaved software. Google’s a big culprit. List of typically-running applications that lower the time interval on my system (Workstation, but still…):

Google Talk Plugin (5ms) – likely always active whenever GMail is open anywhere

Google Chrome (1ms) – Just one tab open in one instance (for JIRA GreenHopper)

Twitter Client (1ms) – It’s even a .NET app! Came via wpfgfx_v0400.dll

SIP phone application (1ms) – If it really needs it, should probably only set it during an active call

svchost.exe (10ms) – Strange. No services listed and I stopped the one that did request 1ms

It’s interesting that foobar2000 is not in that list, playing or not. While playing, it does request that the system not automatically enter sleep. So does the Realtek audio driver.

Thanks for sharing your results. I just tested with Chrome while running on battery and it still lowers the timer resolution. That is incredibly bad. That means (according to some quick measurements) that having a single empty Chrome tab wastes about 0.3 W on my laptop. Bad, bad, bad.

On my laptop I just found that Visual Studio 2012 and Starry Night had requested a 1 ms timer interval. Starry Night does this every time it is run, while Visual Studio does it when it uses wpfgfx_v0400.dll — I’m not sure how to trigger that. It looks like WPF might have a design bug. Windows Live Photo Gallery requests a 10 ms timer interval whenever it plays a video, and never resets it. That is sloppy.

Can you do anything about the chrome right now or provide an insight?

I dug into the code, and header (https://github.com/chromium/chromium/blob/c37f2d0f2eca5ba9bb1af333266cbb418ce66d04/base/time/time_win.cc) implies that it should only be used while not on battery, but after unplugging my laptop, it still requests 1ms interval

How do you know that Chrome is requesting a 1 ms interval? Note that the latest Windows 10 seems to toggle the timer frequency ~15 times a second. This causes clockres and TimerTool to claim the timer frequency is 1 kHz, while powercfg says it is 64 Hz. It’s a kernel timer interrupt which is apparently different so it’s not clear that this matters other than making clockres and TimerTool useless.

I was checking using powercfg as described in the article. I tracked it down PID to google play music. Seems to be coming from Google Play Music for Chrome extensions. Once play page is closed, chrome begins to request 4ms interval

Huh. I’ll take a look, but since Microsoft seems to have stopped caring (the results from clockres are apparently meaningless now) I’m not sure what I should do.

I was never intended to imply that you have to do anything. You are much more knowledgeable than me and since windows itself breaks this rule and there is no point, then whatever. I just read this article and run the tools, to see is it still the case. Although I should have provided more details from the start. Even if it is not the case anymore, at least I found that my trackball tray app sets timer to 1ms, and learnt to “NOP” the call with IDA (with no negative effects), which is I am grateful to you for.

The Windows audio subsystem often requests a high timer interrupt frequency whenever audio is being played. The details depend on your hardware, application type (Win32 versus UWP). audio latency requested, OS version, and other details. Given the lack of documentation it is almost impossible to tell whether what is happening is by design or not, unfortunately.

This is probably the wrong place to complain about this but the Steam client also locks my laptop timer to 1KHz as soon as it starts until it exits.

Also, launched games all have their main thread’s ideal processor set to 0:0. It’s not really important, but I wonder what’s up with that.

I don’t think the ideal processor means much — it seems to change frequently as a process runs, although I could be wrong.

Setting the timer to 1 KHz is bad, however. I’ll investigate that and try to make sure that stops, at least when Steam is minimized.

Setting the timer to 1 KHz was a bug that was introduced about six months ago. A fix has been checked in but will take a while to propagate out. Thank you for the report.

The fix is out. The steam client no longer modifies the timer resolution.

Pingback: timeGetTime versus GetTickCount | Random ASCII

looks like googletalk sets it to 1KHZ to.

That’s unfortunate. I’m assuming that google talk doesn’t use very much CPU time (probably 10% or less of a core?) which means that waking up every ms probably is wasting power.

Microsoft’s quartz.dll, part of the DirectShow Runtime, also sets it to 1 KHz. I hit this fairly frequently when browsing with IE 10. I have not yet figured out exactly what triggers it — some multimedia I suppose.

I guess I found a small error in your text.

In the forth paragraph under “Operating system differences” you wrote: “The trivial test on Windows XP proves that it is _rounding down_ …”.

It should be “rounding up”, shouldn’t it?

Thanks for sharing your insights!

Fixed — thanks.

Pingback: Windows Timer Resolution: Megawatts Wasted | Random ASCII

The tickless kernel link is broken now, but that doesn’t matter as the Linux kernel has been able to provide good sleep accuracy without it due to support for High Resolution Timers. See http://elinux.org/High_Resolution_Timers for more details.

Good job

Only small remark on QueryPerformanceCounter function

(From http://msdn.microsoft.com/en-us/library/windows/desktop/ms644904(v=vs.85).aspx )

”’ On a multiprocessor computer, it should not matter which processor is called. However, you can get different results on different processors due to bugs in the basic input/output system (BIOS) or the hardware abstraction layer (HAL). To specify processor affinity for a thread, use the SetThreadAffinityMask function.””

So be careful when you use it to compute duration (as i understand two calls are required but you don’t know which proc will be called)

If I bought a computer and found that QueryPerformanceCounter was not working then I would return it. SetThreadAffinityMask is a hack that does not work in general because threads need to be able to migrate. SetThreadAffinityMask is okay for a toy benchmark, but if QueryPerformanceCounter doesn’t work then real programs, like games, will be affected.

Don’t accept broken hardware.

Pingback: In Praise of Idleness | Random ASCII