For years (decades?) one of the most requested features in Visual C++ has been better support for debugging optimized code. Visual Studio’s debug information is so limited that in a program that consists just of main(argc, argv) the VS debugger can’t accurately display argc and argv in an optimized build. All I wanted was for Visual Studio to not lie to me about the value of local variables and function parameters in optimized builds.

It turns out that Microsoft shipped this feature in Visual Studio 2012, but forgot to tell anyone. This could be the most important improvement to Visual Studio in years but it’s been almost top-secret.

Update: Visual Studio 2013 Update 3 makes this feature official! It’s now under /Zo (that’s lowercase ‘o’, not zero or uppercase ‘o’, despite what the VS blog says). It works really well, as long as you don’t accidentally check the option that quietly disables this awesome feature (more details in the update at the end).

Updated update: Visual Studio 2015 makes this feature even more official. Now optimized debugging can even coexist with Edit-and-continue!

So download VS 2013 Update 3 or higher and give /Zo a try. However, be aware that the VS debugger is still missing support for one handy enhanced debugging feature. For that you need to debug with windbg, which can actually show you virtual callstack entries from inline functions when you build with /Zo. This is a big deal. Stepping through overloaded operators and STL functions will never be the same again. This screenshot is from windbg, stepping through three layers of inlining:

It’s beautiful.

Okay, back to the original article:

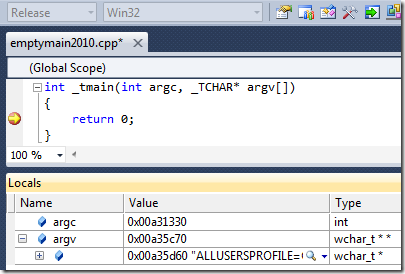

Here’s what Visual Studio 2010 looks like by default when you debug the simplest possible project with the default release-build optimizations. I don’t understand why VS has such trouble with this program given that argc and argv are quite plainly sitting on the stack. I don’t think that argc is really 0x00a31330.

Note: looking at the stack shows that the debugger is looking for the variables four bytes away from where they are located. So close…

Before somebody fires up Visual Studio 2012 and tells me that it lies just as much as previous versions let me clarify my claim. Visual Studio 2012 ships with support for optimized debugging, but this support is off by default.

For reasons that I can only speculate about (perhaps the feature wasn’t considered done?) the compiler support for this feature is hidden behind a cryptic and undocumented compiler command line switch – /d2Zi+ (now exposed as /Zo). The default release builds of VS 2012 behave identically to VS 2010, but once you add the magic command-line switch and rebuild then things start to look a lot more sensible:

If your Internet attention span has been exceeded already then feel free to stop now. Just remember to upgrade to Visual Studio 2012 or beyond and add /d2Zi+ (/Zo) to your compiler command line. The next time you are looking at a crash dump from a customer, a release-only bug, or you just happen to be debugging optimized code, you’ll thank yourself for doing this preparatory work.

This feature is important enough to justify upgrading Visual Studio. I wish I’d realized it was there six months ago. Better late than never.

Optimized debugging of main() is not particularly compelling, but the feature works well beyond that. Here is a shot of the locals window while debugging Fractal eXtreme after doing the upgrade and /d2Zi+ dance. The displayed values are now accurate where before they were wrong, and the debugger now knows when variables have been optimized away:

Note that there is a difference between “optimized away” and “out of scope”. In an optimized build a variable may cease to exist prior to the end of its scope. This would be a flagrant violation of the C++ standard but the “as-if” rule permits this vital optimization.

VS 2010 together with windbg had some modest support for this capability, but it really didn’t work well. This is much better.

Details schmetails

The /d2Zi+ switch has actually been mentioned previously, by Sasha Goldstein in 2011 and by Andrew Hall in 2013. It’s not an officially documented flag, so it could go away at any moment, but hey, carpe diem, and debug optimized code while the iron is hot. I’m assuming that it will become more official looking in some future release – I don’t think they’ll actually take it away.

Sasha’s 2011 article talks about how the enhanced debug information tracks variables better but suggests that the built-in C++ debugger in Visual Studio doesn’t support the enhanced information (update: because at the time it didn’t). That’s why I missed this crucial feature initially, despite seeing Sasha’s blog post. You don’t need to use windbg – the default VC++ debugger works fine.

Andrew Hall’s post points to some other capabilities of /d2Zi+ – it records inlining information that the profiler can use to attribute time to functions. That’s pretty cool, but currently the only debugger that supports that extra information is windbg.

TANSTAAFL so there’s gotta be a catch. The optimized debugging feature works by storing additional information in the .pdb files – presumably tracking when variables move to a new memory address or register, and when they disappear. This extra information takes space so you should expect that your PDB files will get larger when compiling with /d2Zi+. I haven’t done exhaustive tests, but a quick analysis of eight PDBs showed an average size increase of… honestly it was so small that I couldn’t separate it from the noise. This may be because PDB files never shrink, so if you don’t delete them between links then your results will be invalid. Your mileage may vary. The actual code generated should be unchanged – my tests show no differences.

Upgrading

Upgrading Fractal eXtreme was incredibly trivial. I loaded the VS 2010 solution file into VS 2012 and said yes when it asked if I wanted to upgrade. The code compiled with no errors or warnings. After verifying that release-mode debugging was still a poor experience I added /d2Zi+. As if by magic I started being able to see what was going on. Optimized code is still weird – inlining, constant propagation, and code rearrangement are essential complexities of this task – but at least the accidental complexity is now mostly removed.

Upgrading large projects at work is much more complicated, but as far as upgrades go it’s really not bad. I’m quite glad that they kept the project file format consistent – the diffs are quite small. It also helped that all of our code at work is compiled (but not linked) with VC++ 2012 every day, as described at Two Years (and Thousands of Bugs) of Static Analysis.

It’s worth pointing out that while Visual Studio 2012 will upgrade the .vcxproj files, it does not modify the .sln files. This means that when you double-click the .sln file it still opens in Visual Studio 2010, which doesn’t know how to use the v110 (VS 2012) toolset. You can fix this by opening the .sln file and changing the third line from “# Visual Studio 2010” to “# Visual Studio 2012”. That’s it.

(for details on fractal math optimizations on modern CPUs see the fractals section)

Windows XP

The default Visual Studio 2012 toolset (v110) generates code that won’t run on Windows XP. You have to use the v110_xp toolset for that, after downloading the appropriate VS 2012 update. Alas, the v110_xp toolset doesn’t support /analyze, so I’ve configured our project creation system to select the right toolset for the job. Be sure to automate this boring task.

gdb and gcc

For the record, yes, I am aware that gcc/gdb already offers a comparable experience. As a Windows developer it’s good to see Visual Studio catching up. And I do like having the registers/locals/disassembly/memory/call-stack windows updating as I step through the code.

There goes the neighborhood

I’m quite capable of dropping down to assembly language to see what is really going on. There is something satisfying about tracking the flow of execution and data while you’re on the trail of a cool bug.

But my god, what a waste of time. Tracking bugs this way is inefficient, and it reduces the pool of developers who are qualified to look at crash dumps coming in from customers. I’d fear being put out of work by these newly sophisticated computers, but somehow I think I’ll find something else to work on.

VC++ improvement requests

Earlier week I asked people to vote on the two VC++ improvements that I think are most important. I would have included optimized debugging on the list except that I found out last week that it was already supported. So, no point putting it on the list. But, it’s not too late to vote for getting the most valuable /analyze warnings in the regular compile.

Reddit discussion is here – vote it up if you want others to know about this.

Update, August 2014

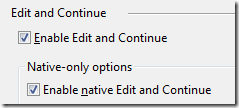

A lot of people, myself included, have found that the support for optimized debugging in VS 2013 is flaky. For a while I assumed that they had broken some aspects of it, but when Update 3 came out and the feature was still unreliable I filed a bug. The response from Microsoft was basically “it works on my machine”. That meant it was time to get scientific. I asked a bunch of my coworkers to try the test project, and I got back a stream of reports that the feature was indeed broken. And then one solitary voice in the wilderness said “it works on my machine”. With on-site proof that it did work we investigated and found the crucial difference. The option that quietly disables this awesome feature is… Edit and Continue.

If you enable native Edit and Continue in the debugger (which is separate from compiling with it) then debugging of optimized code stops working. In other words, if you check the two boxes to the right then the optimized debugging experience will be as painful as it has been for decades. If you leave one of them unchecked then… Nirvana.

I should have guessed. I’d previously found that this setting chooses between autoexp.dat and .natvis support, so I already knew that it had non-obvious side-effects.

It appears that when you enable native Edit and Continue you are actually selecting a different, older, debugging engine. This old debugging engine supports Edit and Continue and autoexp.dat. The new debugging engine supports .natvis visualizers and optimized debugging information.

Update: with VS 2015 this problem goes away. Edit and Continue and Optimized Debugging no coexist. See the comment from Ramkumar Ramesh.

It also appears (thanks to Jeremy in the comments) that if you set the Debugger Type to “Mixed” instead of “Native-Only” then you also get the old debugging engine, and therefore no support for the optimized debug information.

So, the advice is clear:

- Compile with /Zo (or /d2Zi+ if on VS 2012) in all of your optimized builds

- Use Edit and Continue if you want

If debugging optimized code then make sure native Edit and Continue is disabled so you get the enhanced optimized debugging experience, and use .natvis files for visualizing complex data structuresIf debugging non-optimized code then enable Edit and Continue if you want to use it, and use autoexp.dat for visualizing complex data structures

It’s clunky, but it works.

I wrote that this isn’t supported by the Visual Studio debugger because at the time of writing, it wasn’t 🙂 I’m pretty sure I was using Visual Studio 11 Beta at the time. I’m very happy to discover that it works now.

I made a minor update to reference that. Thanks for commenting.

Nice find!

Any idea how this works in Visual Studio 2013? Is it still there? Is it turned on by default?

Andrew Hall’s post suggests that it is still there, still off by default, with the same switch to turn it on. I’ve installed VS 2013 RC but I haven’t tested this.

It was actually added in VS2010 SP1. I’ve asked about it being turned on by default, and the answer is that the pdb size increase is enough of a problem for key customers that they are keeping it off by default. I’ve put in a couple of requests to get it at least documented, and made into a real compiler flag. Hopefully your post will help with that, since you seem to get things fixed by posting about them.

I think that /d2Zi+ was added in VS 2010, but the VS 2010 debugger (with or without SP1) has no support for actually using the information. And, the debug information emitted by VS 2010 SP1 must be limited-or-buggy because when I compile with VS 2010 SP1 with /d2Zi+ and then debug with VS 2012 I get a poor experience.

In short, my tests show that you need to compile and debug with VS 2012, with /d2Zi+.

I’ll have to do more PDB size tests since I don’t believe the numbers from my first tests.

I used the functionality to my great delight on several Xbox 360 titles. I guess the 360 compiler team could have been ahead of devdiv in supporting the new pdb file format.

What debugger were you using?

We’ve been using /d2Zi+ in 2010 for a while with windbg, and this gave some optimized debugging improvements, but it was not accurate enough that I felt like recommending it.

Fractal Extreme looks cool. Do you have any plans of creating a purely fragment shader based one?

The shallow zooms in Fractal eXtreme, where double/long-double precision is sufficient, are already ridiculously fast on modern CPUs. The performance bottlenecks when exploring fractals is when you zoom in deep and you need hundreds or thousands of bits of precision. Unfortunately that maps purely to GPUs. So, 64-bit math on the CPU is what I must use for deep zooms, and the GPU doesn’t offer much.

I’ve blogged about some of the optimizations used — take a look at:

https://randomascii.wordpress.com/category/fractals/

Visual Studio 2013 release candidate is available, maybe you can see if it is still present (or improved). I did try looking for myself, but it said I needed to upgrade my Windows before installing. I’m running Windows 8, so I can only assume it requires that Windows 8 SP1+ (Windows 8.1) be installed. I guess Windows 8.1 will be out by the time VS 2013 is officially released, and since Windows 8.1 is a free upgrade (being virtually a service pack) maybe they rightly assume you should be running it. Probably works on Windows 7 though.

This is only an issue with the release candidate version released last week. The earlier preview version was fine on Windows 8.

I installed VS 2013 RC on Windows 7, but I haven’t had a chance to try it.

The feature is present in VS 2013. It is still off-by-default and needs the cryptic command-line switch to enable it. I can’t tell if it is improved or not.

We immediately added this flag to our build toolchain and it has already proved useful. Hooray!

Hey Bruce,

Thanks for sharing this info! It’s a tremendously exciting tidbit of info! I have run into some problems where it works on some projects but not others. I’ve tried it now on 4 home projects and it’s only worked on 1. Three of the projects (one that it worked on) were small console applications build on VS12. Another is an older VS10 MFC app (converted, I did check the third line of the sln to ensure it’s 2012 now). This one didn’t work with the switch.

Would you expect it to be buggy given it’s lack of official support, or do you know of any other factors that I may need to resolve in order to make it work with all projects?

Thanks again for the info, I look forward to seeing if we can leverage this at work!

What do you mean by “it didn’t work”? There may be bugs and limitations that could cause optimized debugging to work imperfectly with some code constructs, but the idea of it failing completely on some projects seems illogical. You’ll have to be more specific about what is not working. Sharing a complete project is the best, since that provides a repro for Microsoft to investigate if the problem is real.

Note that checking the third line of the SLN is irrelevant. You need to make sure that the code is *compiled* with VS 2012 which is a project file attribute. Try printing _MSC_VER.

It may be that you have file-specific build settings which are trumping the project settings and thereby preventing /d2zI+ from being passed along, but I’m just guessing.

Sorry for the lack of clarity. I didn’t want to add too much potentially unnecessary info in my original comment.

Fortunately this caused me to look back at it to verify the details before responding to your comment. It turns out it does work for each project, but what I found interesting is that one of my older projects (converted from VC8) is very unreliable in certain areas of the codebase as to whether or not the data is accurate. For example, my serialize function reads the data in and the current statement jumps around a bit (somewhat expected given the specific code) and occasionally the correct data appears (such as one of my variables shows kColorBlack (0) for a value, however, a couple lines later it reverts to -1275051, which it had been before (an invalid value). But, other parts of the code work just fine.

My other 2 projects had given me an error like “identifier ‘a’ is undefined”, however upon further investigation I realized that even though the disassembly shows the variable, it’s set to the argc parameter and thus may be optimized away (in some way). I say in some way because I have a variable that I expected to see optimized away and it says “Variable is optimized away and not available”. That made me think that ‘a’ was valid, and the debugger simply didn’t track it properly. I changed this to read a user value from the console, and this showed that release did work.

Therefore, I take back my concern that it may just “not work” with an entire codebase. I see your point on optimized code with some code constructs (such as the one I happened to test, and only test on this one codebase) not working right.

My MSC_VER is 1700 in the project that was acting up. Also, I’ve never set a file specific build setting in a home project, so I can confirm that’s not an issue I should be running into.

Thanks again Bruce!

Cool — thanks for the clarification.

I encourage you to make a stand-alone repro (a project that can build and run independently) of the bugs you are seeing in your serialize function. The goal for this feature is that the debugger should never lie about the value of a variable and the VC++ team is interested in code that breaks this. You could also try downloading VS 2013 RC to see if it corrects the problem, but I don’t that is necessary.

If we, collectively, report bugs in this feature then it increases the odds that it will work reliably when it finally becomes an official feature. It’s important.

BTW, I like this compile-time way of displaying the compiler version:

#define QUOTE0(a) #a

#define QUOTE1(a) QUOTE0(a)

#pragma message(__FILE__ “(” QUOTE1(__LINE__) “): Built with compiler version ” QUOTE1(_MSC_VER))

Do we know if this affects the code at all, or is it just the PDBs?

This does not affect the generated code at all. Using this switch just adds additional information to the PDBs to allow better debugging of exactly the same code.

Thanks, Bruce. This is good news, since there’s no way I was going to get anyone to go along with using an undocumented switch that affects code.

You can easily prove that /d2Zi+ doesn’t affect the code-gen by disassembly the generated DLLs before and after and observing that nothing changes.

Well, I tried it, and it is helpful, though not perfect. I looked at a known case where an int is passed down through four functions; previously, only the top level showed the correct value, but now the top three do. It didn’t get quite to ground floor, but definitely an improvement.

Thanks for writing this up.

I believe Microsoft would like this to be perfect. We need to help them. If you find places where it is not (especially in VS 2013) then please create a minimal repro and file a bug on connect.microsoft.com.

> It’s now under /Zo (that’s lowercase ‘o’, not zero or uppercase ‘o’, despite what the VS blog says) and I haven’t tried the official version yet but wow!

It is still saying /Z0 (as zero) in official 2013.3 announcement

It seems that the “Debugger Type” under Configuration Properties->Debugging (when you right-click on a project) needs to be set to “Native-Only”. If it’s set to mixed, you don’t get the enhanced debugging.

Thanks Jeremy — that’s very helpful. I guess selecting Mixed is another way of quietly requesting the old debug engine.

Try to disable the flag “Use Managed Compatibility Mode” in Debugging -> General. I think it will reactived to new engine under mixed debugging.

With all above feature included By Ashoka some more feature of the VS2012;

Please follow below link have 21 new feture of VS2012 – 2013

http://getmscode.blogspot.in/2014/02/vs2012-new-features.html

Pingback: Slow Symbol Loading in Microsoft’s Profiler, Take Two | Random ASCII

I have no debugging infos in VS 2012 in run time with break point. Neither it show me anything with mouse hover an object nor let me drag an drop it to the “Watch1” window. Any Idea? Or I should forget this product of Microsoft?

Sorry, can’t help you. It worked nicely for me, but there are things that can go wrong. I recommend a stackoverflow post with lots of details (language, settings, maybe a complete project uploaded somewhere) so that people can make concrete suggestions.

Hey Bruce,

I work on C++ EnC/Visual Studio debugger and thought I’d suggest a correction to your otherwise excellent blog post.

With VS 2015, the “Enable Edit and Continue (Native)” does not resurrect the legacy debug engine anymore since C++ EnC is now implemented in the default debug engine (along with X64 EnC). There’s a new option “Enable Native Compatibility mode” to do this instead, so EnC has no impact anymore on /d2Zi+ anymore, it’s now “native compat mode” that invalidates this.

Thanks,

-Ramkumar

Visual Studio Debugger

Thanks for the update, and that’s great news. I’ll update the blog post to better reflect this new and improved reality.

Pingback: Shrink my Program Database (PDB) file | C++ Team Blog