When I run into a problematically slow program I immediately reach for a profiler so that I can understand the problem and either fix it or work around it.

This guidance applies even when the slow program is a profiler.

And so it is that I ended up using Windows Performance Toolkit to profile Windows Performance Toolkit. Again. And not for the last time. The good news is that once again I was able to learn enough about the problem to come up with a very effective workaround (now available in UIforETW) in the Traces list context menu, as Strip chrome symbols, easily extendable for other problematic PDBs.

I’ve previously documented slowdowns and unreliabilities with WPT symbol loading in Xperf Symbol Loading Pitfalls. Today’s blog post documents a new problem with slow WPT symbol loading.

And, it appears that I’m not the only person hitting new WPT/WPA symbol loading slowdowns (details here):

What’s my motivation?

I recently moved to Google where I’m working on Chrome for Windows. It wasn’t long before I fired up xperf (aka Windows Performance Toolkit or WPT) to see what Chrome’s performance looked like. I profiled the public build and used the public symbol server to automatically download full symbols.

(you can find the Chrome symbol server at https://chromium-browser-symsrv.commondatastorage.googleapis.com, changed from http for greater security)

I soon noticed that Windows Performance Analyzer (WPA) was taking a very long time to load the symbols for chrome.dll and chrome_child.dll. The symbols for these two DLLs were taking a total of about 25 minutes to be loaded which was making using the profiler quite cumbersome.

WPT translates symbols into .symcache files in c:\symcache so that subsequent uses of the same symbols are much faster.

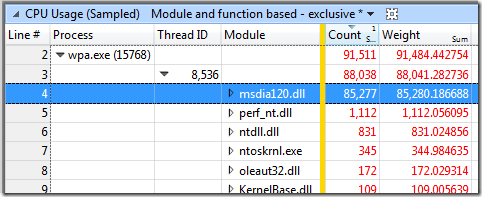

25 minutes is plenty enough time to investigate so I recorded another profile during this time – profiling the profile viewer. This meta-profile showed that the vast majority of the wpa.exe main-thread samples – 97% of them in the screenshot below – were in the main thread inside of msdia120.dll – which is different from the previous problem:

The first time I analyzed this I found that almost all of the msdia120.dll samples were in Mod1::TranslateFileId, called through SymCachePdb::LoadInlineeLines.

Tracking source file numbers for inlined functions is something that only happens if you enable enhanced debugging information (previously enabled with /d2Zi+, now with /Zo, discussed in more detail here), so the initial evidence suggested that it was Chrome’s use of /d2Zi+ that was causing its symbols to take so long to be processed.

Aside: if /Zo is the problem then that makes this whole performance problem particularly annoying. My tests show that WPA uses a mapping between addresses and functions, and nothing else. It doesn’t make use of line-number information. But see the comments for where to view the inlining information in WPA.

As I continued to monitor this issue, talking to some people at Microsoft about it, I found that the results were actually variable. On some Chrome builds the symbols loaded quite quickly, and on another build the time was spent almost entirely in these three msdia120.dll functions:

(normally I use the stack view but Module/Function is handy to see what module and function the CPU is actually spending its time in)

The lack of consistency is peculiar, but not critical in this case. Without the source code to WPA or msdia120.dll there’s not much point in theorizing unless it leads to a workaround.

The workaround

Chrome’s PDB files are big – up to 775 million bytes.

Whether the slowdown is because of /d2Zi+ or just because Chrome’s PDBs are big doesn’t really matter. Either way, I thought that maybe making the PDBs smaller would reduce how long it took WPA to process them. The Windows debugger toolkit comes with pdbcopy.exe which can be used to strip private information (types, file names, line numbers, etc.) from PDBS so I decided to use that. And it worked.

I now have a Python script that does the following steps immediately after recording a trace:

- xperf -i trace.etl -a symcache -dbgid

- Scan the output from step 1 for references to chrome.dll and chrome_child.dll

- Use RetrieveSymbols.exe (source code here based on ideas discussed here) to download the symbols based on the guid, age, and pdbname recorded in step 2

- Use pdbcopy -p to copy the symbols to a temporary directory, stripping private information and making the PDB files ~85% smaller

- Set _NT_SYMBOL_PATH to point just to the temporary directory with the stripped symbols

- xperf -i trace.etl -symbols -a symcache -build

The invocation of the symcache action in step 1 gets xperf to dump information about all of the symbols referenced in the trace. That information gives us everything we need to retrieve the symbols from the Chrome symbol server.

(have I mentioned that I love symbol servers? Read Symbols the Microsoft Way for details)

The invocation of the symcache action in step 6 builds the .symcache files from the PDB files. During this step xperf can only see the stripped symbols and it processes them extremely quickly.

Old timings:

~25 minutes to process the Chrome symbols from within WPA

New timings:

29 seconds to pdbcopy and process the Chrome symbols prior to running WPA

50 times faster. Works for me. This fix, configured to run automatically for Chrome’s symbols if Chrome developer is checked in the Settings dialog, can be found in https://github.com/google/UIforETW, which is an open-source tool for recording and managing traces.

Command-line processing of ETW traces

Xperf has a number of options for command-line processing of ETW trace files. If you run “xperf -help processing” you can get a list of them. These xperf actions are documented here but the symcache action is missing. The documentation is pretty light so the lack of symcache documentation doesn’t make much difference.

The xperf processing commands have mostly been made obsolete by wpaexporter (example usage shown here) but they are still occasionally useful. I think the symcache and heap actions are the only ones I ever use now.

Observations

If the profilers or msdia120.dll were open source then this would have made my investigation easier and would have let me create a true solution.

However, profiling these closed source tools still let me come up with a workaround and the time savings (especially across other Windows developers at Google) will quickly justify the effort.

I think that this slow symbol loading represents both a bug in WPA (requesting data that it appears to not use) and in msdia120.dll (taking too long to load data). I’ve contacted the WPT team and filed a Visual Studio bug. The bug has some sample traces attached to make seeing the problem even easier. If you are hitting this issue or other MSDIA slowdowns then consider commenting on or voting on the bug.

Update: some useful comments to this post explain how WPA uses this extra information so it’s not actually a WPA bug. But WPA should have an option to not use this information since it is usually useless and very expensive to obtain.

WPT ships with a fairly old copy of msdia120.dll (built June 16, 2013). I tried using the VS 2013 Update 3 version (built June 25, 2014) but it didn’t seem to make any difference.

Working around this bug would be easier if there was a tool to translate a PDB file to a .symcache file. In fact there is such a tool, called symcachegen, documented here but it was never actually released 😦

The .symcache files made from stripped Chrome PDBs are about half the size as those made from non-stripped PDBs. It has been suggested that there might be extra value in those larger .symcache files. However I haven’t seen any extra value so until I see it I’ll go with 50x faster and 50% smaller as a nice consolation prize.

Oh man, so many stories about this.

We ask for inline symbols (as in, all the aliases that were inlined to a given RVA) because a certain someone whose name rhymes with Woe Caughlin asked for them, best as I recall. I think we show them in the text dump when you specify a magic switch to -symbols.

We didn’t give you the option of including/excluding them in .symcache files (much like line numbers) because I’ve historically been opposed to bimodal .symcaches; given how we generate them to shared locations that would just lead to loads of confusion.

So there isn’t really less we can do in WPA. Other improvements around threading have been in the works and we just need better ship vehicles to push them out through (also in the works).

DIA has an effective monopoly on the PDB file format and I don’t think anyone is being served well by this. Similarly, symsrv.dll has a monopoly on the symbol server/store and it’s also not helpful. Some tools like PerfView have private re-implementations of symsrv.dll and that leads to yet other tricky problems. That’s the downside of a closed ecosystem. (On the upside, these tools exist at all, I guess.)

Thanks for that perspective Robin.

I agree with the dangers of bimodal symcaches and the extra information would be harmless if there wasn’t the performance cost (I hadn’t noticed the larger .symcache files and don’t much care about them). And, I’d love to have WPA make use of the inlining information and line-number information — being able to jump to the relevant source file would be incredibly useful.

So, we just need the VS team to speed up DIA2 and WPA to use the extra information and I’ll be the happiest clam ever.

Failing that, an option to not request the extra information would sure be convenient, if that would solve the performance problem.

Right. The challenge is that WPA is using DIA in ways it wasn’t really built for (i.e. enumeration). It’s fascinating how long it can take to noodle through a ~100MB file (or 150MB, I think, was our benchmark: wininet.pdb) when you enumerate it through (more or less) lookup APIs.

I also don’t even want to know how much time we blow on BSTR creation/destruction due to the COM API but that’s for another day.

We’ve also discussed getting rid of .symcache’s and moving to PDBs directly, or allowing both. The trade-offs are immensely complex and Kyle Sabo (sure to comment on this shortly if it doesn’t cause his ulcers to flare up already) wrote a 10-15 page internal performance report on this topic.

The biggest challenge is getting anyone to buy into the notion that this overall problem is worth a few months of an engineer’s time. If I didn’t have to fight that fight you’d be rolling in the symbols today.

FWIW, breakpad routinely hits these same sorts of problems since it also iterates through PDBs to get all of the symbols. I’ve written various dia2 based tools to dump all symbols in order to find the biggest globals, most repeated globals, etc., so there’s definitely demand for enumeration.

But, I’ll talk more to the VS guys about that, now that I’ve got a bug filed.

There is an “Inlined Functions” column in the CPU Usage (Sampled) table that shows an alphabetical listing of all the functions that were inlined into the one currently being sampled. This isn’t especially useful for normal performance evaluation since WPA can’t determine which specific inlinee the current instruction once belonged too (especially after optimizations are applied), but it’s useful for debugging/evaluating PGO effectiveness (which I wrote about a while ago on my website).

The line numbers only show up in the dumper output with “-add_inline” option (or maybe it’s the “-add_pgodata” option? I forget), but there they will show up for each stack frame which makes them slightly more useful.

Thanks Kyle — that’s great information. I confirmed that the trace of my test app showed the inlined leaf function. It looks like it doesn’t show multiple levels of inlining or non-leaf inlining (should be determinable from an address since windbg does it), but I’ll take what I can get.

For those reading the comments at home, note that in order to find the Inlined Functions column you have to go to the View Editor and drag it over to the active area.

And Kyle’s excellent article on this is at http://kylesabo.azurewebsites.net/evaluating-pgo-training-with-wpa/

“The biggest challenge is getting anyone to buy into the notion that this overall problem is worth a few months of an engineer’s time.”

Where can we make our voices heard? We’re seriously hampered by this performance problem and it’s very frustrating because we don’t even know where to address our feedback.

Commenting on the bug I filed is probably a good start:

https://connect.microsoft.com/VisualStudio/feedback/details/1017787/msdia120-dll-handles-zo-pdb-files-extremely-slowly

Or, file another bug and link them.

Do the MS people understand that if this dev tool / file format was open sourced, annoyed developers of Bruce’s caliber would have fixed this already, with a pull request with full test coverage and benchmark numbers?

Zeev, what happens to that tool when the file format revs? Will you be around a year or three years from now to patch it up? Or does it become another thing that Microsoft then needs to maintain in addition to DIA?

That’s intended as a genuine question, not a rejection of the idea. I think open source is a beautiful thing, especially projects that demonstrate sustained investment over time (e.g. Boost), but the unglamorous maintenance work over time isn’t something I understand yet vis-à-vis volunteer work.

@Robin Giese: Microsoft would continue to maintain it, just as it does now. Why would that change just because it’s open source?

Being open source allows others to resolve issues for themselves easier and share the fixes with the maintainer (Microsoft in this case) or with anyone else who might be interested in applying the fixes to their own copy of the software even if the maintainer refuses to apply it to their codebase.

One possibility, I suppose, would be to extract just the basic information to .symcache files (so that the PDBs can be processed quickly and the .symcache files can be small) and use the PDBs if available for extra on-demand information.

But, I certainly don’t know all of the tradeoffs, and I would guess that Kyle’s 10-15 page report already considers this option.

I had to write a custom PDB parser for generating Breakpad symbols (https://github.com/luser/dump_syms) precisely for the reason that DIA*.dll is so monstrously slow.

Totally agree with Robin though, the fundamental problem with the PDB format itself is that it is not open source. I don’t particularly care about the implementation of DIA*.dll, but not having a clearly documented specification (like DWARF), so that anyone can write their own tools against the format, is a black mark against MS in my book.

Congrats on the job firstly! Looking forward to all the performance improvements you bring to Chrome to get it back performing again!

I love this post and how very inception of it, thanks for the write up, I remember when I was trying to profile it with WPA as was having hanging issues, and it became impossible so gave up, glad to see I’m not the only one!

Pingback: Xperf Symbol Loading Pitfalls | Random ASCII

Pingback: Profiling the profiler: working around a six minute xperf hang | Random ASCII

Pingback: UIforETW – Windows Performance Made Easier | Random ASCII

Pingback: The Lost Xperf Documentation–CPU sampling | Random ASCII

Pingback: ETW Central | Random ASCII

Hey Check out a new PDB2JSON symsrv I wrote hosted in Azure, documented on github (github.com/K2/Scripting) I wrote it initially to support CORECLR PDB (native) analysis on platforms other than Windows, it hosts and converts all PDB’s into a JSON format that can be used w/o worrying about DIA2SDK compatibility. The server also supports a PowerShell in-memory analysis tool 🙂