This post is a more carefully thought out and peer reviewed version of a floating-point comparison article I wrote many years ago. This one gives solid advice and some surprising observations about the tricky subject of comparing floating-point numbers. A compilable source file with license is available.

We’ve finally reached the point in this series that I’ve been waiting for. In this post I am going to share the most crucial piece of floating-point math knowledge that I have. Here it is:

[Floating-point] math is hard.

You just won’t believe how vastly, hugely, mind-bogglingly hard it is. I mean, you may think it’s difficult to calculate when trains from Chicago and Los Angeles will collide, but that’s just peanuts to floating-point math.

Seriously. Each time I think that I’ve wrapped my head around the subtleties and implications of floating-point math I find that I’m wrong and that there is some extra confounding factor that I had failed to consider. So, the lesson to remember is that floating-point math is always more complex than you think it is. Keep that in mind through the rest of the post where we talk about the promised topic of comparing floats, and understand that this post gives some suggestions on techniques, but no silver bullets.

Previously on this channel…

This is the fifth chapter in a long series. The first couple in the series are particularly important for understanding this point. A (mostly) complete list of the other posts includes:

- 1: Tricks With the Floating-Point Format – an overview of the float format

- 2: Stupid Float Tricks – incrementing the integer representation

- 3: Don’t Store That in a Float – a cautionary tale about time

- 3b: They sure look equal… – ranting about Visual Studio’s float failings

- 4: Comparing Floating Point Numbers, 2012 Edition (return *this;)

- 5: Float Precision–From Zero to 100+ Digits – non-obvious answers to how many digits of precision a float has

- 6: C++ 11 std::async for Fast Float Format Finding – running tests on all floats in just a few minutes

- 7: Intermediate Floating-Point Precision – the surprising complexities of how expressions can be evaluated

- 8: Floating-point complexities – some favorite quirks of floating-point math

- 9: Exceptional Floating Point – using floating point exceptions for fun and profit

- 10: That’s Not Normal–the Performance of Odd Floats – the performance implications of infinities, NaNs, and denormals

- 11: Doubles are not floats, so don’t compare them – a common type of float comparison mistake

- 12: Float Precision Revisited: Nine Digit Float Portability – moving floats between gcc and VC++ through text

- 13: Floating-Point Determinism – what does it take to get bit-identical results

- 14: There are Only Four Billion Floats–So Test Them All! – exhaustive testing to avoid embarrassing mistakes

- 15: Please Calculate This Circle’s Circumference – the intersection of C++, const, and floats

- 16: Intel Underestimates Error Bounds by 1.3 quintillion – the headline is not an exaggeration, but it’s not as bad as it sounds

Comparing for equality

Floating point math is not exact. Simple values like 0.1 cannot be precisely represented using binary floating point numbers, and the limited precision of floating point numbers means that slight changes in the order of operations or the precision of intermediates can change the result. That means that comparing two floats to see if they are equal is usually not what you want. GCC even has a (well intentioned but misguided) warning for this: “warning: comparing floating point with == or != is unsafe”.

Here’s one example of the inexactness that can creep in:

float f = 0.1f;

float sum;

sum = 0;

for (int i = 0; i < 10; ++i)

sum += f;

float product = f * 10;

printf("sum = %1.15f, mul = %1.15f, mul2 = %1.15f\n",

sum, product, f * 10);

This code tries to calculate ‘one’ in three different ways: repeated adding, and two slight variants of multiplication. Naturally we get three different results, and only one of them is 1.0:

sum=1.000000119209290, mul=1.000000000000000, mul2=1.000000014901161

Disclaimer: the results you get will depend on your compiler, your CPU, and your compiler settings, which actually helps make the point.

So what happened, and which one is correct?

What do you mean ‘correct’?

Before we can continue I need to make clear the difference between 0.1, float(0.1), and double(0.1). In C/C++ the numbers 0.1 and double(0.1) are the same thing, but when I say “0.1” in text I mean the exact base-10 number, whereas float(0.1) and double(0.1) are rounded versions of 0.1. And, to be clear, float(0.1) and double(0.1) don’t have the same value, because float(0.1) has fewer binary digits, and therefore has more error. Here are the exact values for 0.1, float(0.1), and double(0.1):

| Number | Value |

| 0.1 | 0.1 (of course) |

| float(.1) | 0.100000001490116119384765625 |

| double(.1) | 0.10000000000000000555111512312578270211815834045 41015625 |

With that settled, let’s look at the results of the code above:

- sum = 1.000000119209290: this calculation starts with a rounded value and then adds it ten times with potential rounding at each add, so there is lots of room for error to creep in. The final result is not 1.0, and it is not 10 * float(0.1). However it is the next representable float above 1.0, so it is very close.

- mul = 1.000000000000000: this calculation starts with a rounded value and then multiplies by ten, so there are fewer opportunities for error to creep in. It turns out that the conversion from 0.1 to float(0.1) rounds up, but the multiplication by ten happens to, in this case, round down, and sometimes two rounds make a right. So we get the right answer for the wrong reasons. Or maybe it’s the wrong answer, since it isn’t actually ten times float(0.1) !

- mul2 = 1.000000014901161: this calculation starts with a rounded value and then does a double-precision multiply by ten, thus avoiding any subsequent rounding error. So we get a different right answer – the exact value of 10 * float(0.1) (which can be stored in a double but not in a float).

So, answer one is incorrect, but it’s only one float away. Answer two is correct (but inexact), whereas answer three is completely correct (but appears wrong).

Now what?

Now we have a couple of different answers (I’m going to ignore the double precision answer), so what do we do? What if we are looking for results that are equal to one, but we also want to count any that are plausibly equal to one – results that are “close enough”.

Epsilon comparisons

If comparing floats for equality is a bad idea then how about checking whether their difference is within some error bounds or epsilon value, like this:

bool isEqual = fabs(f1 – f2) <= epsilon;

With this calculation we can express the concept of two floats being close enough that we want to consider them to be equal. But what value should we use for epsilon?

Given our experimentation above we might be tempted to use the error in our sum, which was about 1.19e-7f. In fact, there’s even a define in float.h with that exact value, and it’s called FLT_EPSILON.

Clearly that’s it. The header file gods have spoken and FLT_EPSILON is the one true epsilon!

Except that that is rubbish. For numbers between 1.0 and 2.0 FLT_EPSILON represents the difference between adjacent floats. For numbers smaller than 1.0 an epsilon of FLT_EPSILON quickly becomes too large, and with small enough numbers FLT_EPSILON may be bigger than the numbers you are comparing (a variant of this led to a flaky Chromium test)!

For numbers larger than 2.0 the gap between floats grows larger and if you compare floats using FLT_EPSILON then you are just doing a more-expensive and less-obvious equality check. That is, if two floats greater than 2.0 are not the same then their difference is guaranteed to be greater than FLT_EPSILON. For numbers above 16777216 the appropriate epsilon to use for floats is actually greater than one, and a comparison using FLT_EPSILON just makes you look foolish. We don’t want that.

Relative epsilon comparisons

The idea of a relative epsilon comparison is to find the difference between the two numbers, and see how big it is compared to their magnitudes. In order to get consistent results you should always compare the difference to the larger of the two numbers. In English:

To compare f1 and f2 calculate diff = fabs(f1-f2). If diff is smaller than n% of max(abs(f1),abs(f2)) then f1 and f2 can be considered equal.

In code:

boolAlmostEqualRelative(floatA,floatB,floatmaxRelDiff = FLT_EPSILON){// Calculate the difference.floatdiff =fabs(A - B);A =fabs(A);B =fabs(B);// Find the largestfloatlargest = (B > A) ? B : A;if(diff <= largest * maxRelDiff)returntrue;returnfalse;}

This function is not bad. It works. Mostly. I’ll talk about the limitations later, but first I want to get to the point of this article – the technique that I first suggested many years ago.

When doing a relative comparison of floats it works pretty well to set maxRelDiff to FLT_EPSILON, or some small multiple of FLT_EPSILON. Anything smaller than that and it risks being equivalent to no epsilon. You can certainly make it larger, if greater error is expected, but don’t go crazy. However selecting the correct value for maxRelativeError is a bit tweaky and non-obvious and the lack of a direct relationship to the floating point format being used makes me sad.

ULP, he said nervously

We already know that adjacent floats have integer representations that are adjacent. This means that if we subtract the integer representations of two numbers then the difference tells us how far apart the numbers are in float space. That brings us to:

Dawson’s obvious-in-hindsight theorem:

If the integer representations of two same-sign floats are subtracted then the absolute value of the result is equal to one plus the number of representable floats between them.

In other words, if you subtract the integer representations and get one, then the two floats are as close as they can be without being equal. If you get two then they are still really close, with just one float between them. The difference between the integer representations tells us how many Units in the Last Place the numbers differ by. This is usually shortened to ULP, as in “these two floats differ by two ULPs.”

So let’s try that concept:

/* Seehttps://randomascii.wordpress.com/2012/01/11/tricks-with-the-floating-point-format/for the potential portability problems with the union and bit-fields below.*/#include <stdint.h> // For int32_t, etc.unionFloat_t{Float_t(floatnum = 0.0f) : f(num) {}// Portable extraction of components.boolNegative()const{returni < 0; }int32_t RawMantissa()const{returni & ((1 << 23) - 1); }int32_t RawExponent()const{return(i >> 23) & 0xFF; }int32_t i;floatf;#ifdef _DEBUGstruct{// Bitfields for exploration. Do not use in production code.uint32_t mantissa : 23;uint32_t exponent : 8;uint32_t sign : 1;} parts;#endif};boolAlmostEqualUlps(floatA,floatB,intmaxUlpsDiff){Float_t uA(A);Float_t uB(B);// Different signs means they do not match.if(uA.Negative() != uB.Negative()){// Check for equality to make sure +0==-0if(A == B)returntrue;returnfalse;}// Find the difference in ULPs.intulpsDiff =abs(uA.i - uB.i);if(ulpsDiff <= maxUlpsDiff)returntrue;returnfalse;}

This is tricky and perhaps profound.

The check for different signs is necessary for several reasons. Subtracting the signed-magnitude representation of floats using twos-complement math isn’t particularly meaningful, and the subtraction would produce a 33-bit result and overflow. Even if we deal with these technical issues it turns out that an ULPs based comparison of floats with different signs doesn’t even make sense.

Even a ridiculously small number, like 1e-30 has an ‘large’ integer representation of 228,737,632. So, while 1e-30 is ‘close’ to zero and ‘close’ to negative 1e-30 it is a gargantuan distance from those numbers in ULPs.

After the special cases are dealt with we simply subtract the integer representations, get the absolute value, and now we know how different the numbers are. The ‘ulpsDiff’ value gives us the number of floats between the two numbers (plus one) which is a wonderfully intuitive way of dealing with floating-point error.

One ULPs difference good (adjacent floats).

One million ULPs difference bad (kinda different).

Comparing numbers with ULPs is really just a way of doing relative comparisons. It has different characteristics at the extremes, but in the range of normal numbers it is quite well behaved. The concept is sufficiently ‘normal’ that boost has a function for calculating the difference in ULPs between two numbers.

A one ULP difference is the smallest possible difference between two numbers. One ULP between two floats is far larger than one ULP between two doubles, but the nomenclature remains terse and convenient. I like it.

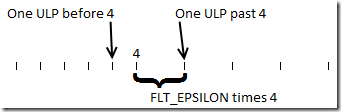

ULP versus FLT_EPSILON

It turns out checking for adjacent floats using the ULPs based comparison is quite similar to using AlmostEqualRelative with epsilon set to FLT_EPSILON. For numbers that are slightly above a power of two the results are generally the same. For numbers that are slightly below a power of two the FLT_EPSILON technique is twice as lenient. In other words, if we compare 4.0 to 4.0 plus two ULPs then a one ULPs comparison and a FLT_EPSILON relative comparison will both say they are not equal. However if you compare 4.0 to 4.0 minus two ULPs then a one ULPs comparison will say they are not equal (of course) but a FLT_EPSILON relative comparison will say that they are equal.

This makes sense. Adding two ULPs to 4.0 changes its magnitude twice as much as subtracting two ULPs, because of the exponent change. Neither technique is better or worse because of this, but they are different.

If my explanation doesn’t make sense then perhaps my programmer art will:

ULP based comparisons also have different performance characteristics. ULP based comparisons are more likely to be efficient on architectures such as SSE which encourage the reinterpreting of floats as integers. However ULPs based comparisons can cause horrible stalls on other architectures, due to the cost of moving float values to integer registers. These stalls generally happen when doing float calculations and then immediately doing ULP based comparisons on the results, so keep that in mind when benchmarking.

Normally a difference of one ULP means that the two numbers being compared have similar magnitudes – the larger one is usually no larger than 1.000000119 times larger than the smaller. But not always. Some notable exceptions are:

- FLT_MAX to infinity – one ULP, infinite ratio

- zero to the smallest denormal – one ULP, infinite ratio

- smallest denormal to the next smallest denormal – one ULP, two-to-one ratio

- NaNs – two NaNs could have very similar or even identical representations, but they are not supposed to compare as equal

- Positive and negative zero – two billion ULPs difference, but they should compare as equal

- As discussed above, one ULP above a power of two is twice as big a delta as one ULP below that same power of two

That’s a lot of notable exceptions. For many purposes you can ignore NaNs (you should be enabling illegal operation exceptions so that you find out when you generate them) and infinities (ditto for overflow exceptions) so that leaves denormals and zeros as the biggest possible problems. In other words, numbers at or near zero.

Infernal zero

It turns out that the entire idea of relative epsilons breaks down near zero. The reason is fairly straightforward. If you are expecting a result of zero then you are probably getting it by subtracting two numbers. In order to hit exactly zero the numbers you are subtracting need to be identical. If the numbers differ by one ULP then you will get an answer that is small compared to the numbers you are subtracting, but enormous compared to zero.

Consider the sample code at the very beginning. If we add float(0.1) ten times then we get a number that is obviously close to 1.0, and either of our relative comparisons will tell us that. However if we subtract 1.0 from the result then we get an answer of FLT_EPSILON, where we were hoping for zero. If we do a relative comparison between zero and FLT_EPSILON, or pretty much any number really, then the comparison will fail. In fact, FLT_EPSILON is 872,415,232 ULPs away from zero, despite being a number that most people would consider to be pretty small.

For another example, consider this calculation:

float someFloat = 67329.234; // arbitrarily chosen

float nextFloat = 67329.242; // exactly one ULP away from ‘someFloat’

bool equal = AlmostEqualUlps( someFloat, nextFloat, 1); // returns true, numbers 1 ULP apart

Our test shows that someFloat and nextFloat are very close – they are neighbors. All is good. But consider what happens if we subtract them:

float diff = (nextFloat – someFloat); // .0078125000

bool equal = AlmostEqualUlps( diff, 0.0f, 1 ); // returns false, diff is 1,006,632,960 ULPs away from zero

While someFloat and nextFloat are very close, and their difference is small by many standards, ‘diff’ is a vast distance away from zero, and will dramatically and emphatically fail any ULPs or relative based test that compares it to zero.

There is no easy answer to this problem. If you think you have found an easy and generic answer to this problem then you should re-read the material above.

The most generic answer to this quandary is to use a mixture of absolute and relative epsilons. If the two numbers being compared are extremely close – whatever that means – then treat them as equal, regardless of their relative values. This technique is necessary any time you are expecting an answer of zero due to subtraction. The value of the absolute epsilon should be based on the magnitude of the numbers being subtracted – it should be something like maxInput * FLT_EPSILON. Unfortunately this means that it is dependent on the algorithm and the inputs. Charming.

The ULPs based technique also breaks down near zero for the technical reasons discussed just below the definition of AlmostEqualUlps.

Doing a floating-point absolute epsilon check first, and then treating all other different-signed numbers as being non-equal is the simpler and safer thing to do. Here is some possible code for doing this, both for relative epsilon and for ULPs based comparison, with an absolute epsilon ‘safety net’ to handle the near-zero case:

boolAlmostEqualUlpsAndAbs(floatA,floatB,floatmaxDiff,intmaxUlpsDiff){// Check if the numbers are really close -- needed// when comparing numbers near zero.floatabsDiff =fabs(A - B);if(absDiff <= maxDiff)returntrue;Float_t uA(A);Float_t uB(B);// Different signs means they do not match.if(uA.Negative() != uB.Negative())returnfalse;// Find the difference in ULPs.intulpsDiff =abs(uA.i - uB.i);if(ulpsDiff <= maxUlpsDiff)returntrue;returnfalse;}boolAlmostEqualRelativeAndAbs(floatA,floatB,floatmaxDiff,floatmaxRelDiff = FLT_EPSILON){// Check if the numbers are really close -- needed// when comparing numbers near zero.floatdiff =fabs(A - B);if(diff <= maxDiff)returntrue;A =fabs(A);B =fabs(B);floatlargest = (B > A) ? B : A;if(diff <= largest * maxRelDiff)returntrue;returnfalse;}

Catastrophic cancellation, hiding in plain sight

If we calculate f1 – f2 and then compare the result to zero then we know that we are dealing with catastrophic cancellation, and that we will only get zero if f1 and f2 are equal. However, sometimes the subtraction is not so obvious.

Consider this code:

sin(pi);

It’s straightforward enough. Trigonometry teaches us that the result should be zero. But that is not the answer you will get. For double-precision and float-precision values of pi the answers I get are:

sin(double(pi)) = +0.00000000000000012246467991473532

sin(float(pi)) = -0.000000087422776

If you do an ULPs or relative epsilon comparison to the ‘correct’ value of zero then this looks pretty bad. It’s a long way from zero. So what’s going on? Is the calculation of sin() really that inaccurate?

Nope. The calculation of sin() is pretty close to perfect – in recent glibc versions it is perfect. Even Intel’s fsin instruction, which gives an answer with just a few bits of precision in this case, still gets the correct order of magnitude. The problem is not with sin()/fsin – the problem lies elsewhere. But to understand what’s going on we have to invoke… calculus!

For a deeper analysis of Intel’s fsin instruction see Intel Underestimates Error Bounds by 1.3 quintillion

But first we have to acknowledge that we aren’t asking the sin function to calculate sin(pi). Instead we are asking it to calculate sin(double(pi)) or sin(float(pi)). Since pi is transcendental and irrational it should be no surprise that pi cannot be exactly represented in a float, or even in a double.

Therefore, what we are really calculating is sin(pi-theta), where theta is a small number representing the difference between ‘pi’ and float(pi) or double(pi).

Calculus teaches us (since the derivative of sin(pi) is negative one) that, for sufficiently small values of theta, sin(pi-theta) == theta. Therefore, if our sin function is sufficiently accurate we would expect sin(double(pi)) to be roughly equal to pi-double(pi). In other words, sin(double(pi)) actually calculates the error in double(pi)! This is best shown for sin(float(pi)) because then we can easily add float(pi) to sin(float(pi)) using double precision:

| float(pi) | +3.1415927410125732 |

| sin(float(pi)) | -0.0000000874227800 |

| float(pi) + sin(float(pi)) | +3.1415926535897966 |

If you haven’t memorized pi to 15+ digits then this may be lost on you, but the salient point is that float(pi) + sin(float(pi)) is a more accurate value of pi than float(pi). Or, alternately, sin(float(pi)) tells you nothing more than the error in float(pi).

Woah. Dude.

Again: sin(float(pi)) equals the error in float(pi).

I’m such a geek that I think that is the coolest thing I’ve discovered in quite a while.

Think about this. Because this is profound. Here are the results of comparing sin(‘pi’) to the error in the value of ‘pi’ passed in:

sin(double(pi)) = +0.0000000000000001224646799147353207

pi-double(pi) = +0.0000000000000001224646799147353177

sin(float(pi)) = -0.000000087422776

pi-float(pi) = -0.000000087422780

Wow. Our predictions were correct. sin(double(pi)) is accurate to sixteen to seventeen significant figures as a measure of the error in double(pi), and sin(float(pi)) is accurate to six to seven significant figures. The main reason the sin(float(pi)) results are less accurate is because operator overloading translates this to (float)sin(float(pi)) which rounds the result to float precision.

I think it is perversely wonderful that we can use double-precision math to precisely measure the error in a double precision constant. Visual C++ 2015 can print the value of double(pi) to arbitrarily many digits, and glibc now calculates pi() accurately, so we can use this code and some hand adding to calculate pi to over 30 digits of accuracy!

double pi = 3.14159265358979323846;

printf(“%.35f\n%.35f\n”,pi,sin(pi));3.14159265358979311599796346854418516

0.00000000000000012246467991473532072

You can also see this trick in action with a calculator. Just put it in radians mode, try these calculations, and note how the input plus the result add up to pi. Nerdiest bar trick ever:

pi =3.1415926535…

sin(3.14)

=0.001592652

sin(3.1415)

=0.000092654

sin(3.141502050)

=0.000090603

The point is, that sin(float(pi)) is actually calculating pi-float(pi), which means that it is classic catastrophic cancellation. We should expect an absolute error (from zero) of up to about 3.14*FLT_EPSILON/2, and in fact we get a bit less than that.

Know what you’re doing

There is no silver bullet. You have to choose wisely.

- If you are comparing against zero, then relative epsilons and ULPs based comparisons are usually meaningless. You’ll need to use an absolute epsilon, whose value might be some small multiple of FLT_EPSILON and the inputs to your calculation. Maybe.

- If you are comparing against a non-zero number then relative epsilons or ULPs based comparisons are probably what you want. You’ll probably want some small multiple of FLT_EPSILON for your relative epsilon, or some small number of ULPs. An absolute epsilon could be used if you knew exactly what number you were comparing against.

- If you are comparing two arbitrary numbers that could be zero or non-zero then you need the kitchen sink. Good luck and God speed.

Above all you need to understand what you are calculating, how stable the algorithms are, and what you should do if the error is larger than expected. Floating-point math can be stunningly accurate but you also need to understand what it is that you are actually calculating. If you want to learn more about algorithm stability you should read up on condition numbers and consider getting Michael L Overton’s excellent book Numerical Computing with IEEE Floating Point Arithmetic. If your code is giving large errors then, rather than increasing your epsilon values, you should try restructuring your code to make it more stable – this can sometimes give stunning results.

The true value of a float

In order to get the results shown above I used the same infinite precision math library I created for Fractal eXtreme to check all the math and to print numbers to high precision. I also wrote some simple code to print the true values of floats and doubles. Next time I’ll share the techniques and code used for this extended precision printing – unlocking one more piece of the floating-point puzzle, and explaining why, depending on how you define it, floats have a decimal precision of anywhere from one to over a hundred digits.

Now that VC++ 2015 can print the exact value of floats and doubles it is trivial for Windows developers to print the exact value represented by a float or double. Being able to see the exact value of numbers such as double(0.1) can help make sense of some tricky floating-point math problems.

Credits

Thanks to Florian Wobbe for his excellent error finding and many insights into this topic.

Other references:

- Josh Haberman’s floating-point summary, part 1

- Floating-point converter tool

- The original floating-point-comparison article, with all of its flaws

I wish you would write about the practical ways to use floats robustly (in games, for example).

This basic stuff has been covered very well already. For example, here :

http://docs.oracle.com/cd/E19957-01/806-3568/ncg_goldberg.html

Don’t Store That in a Float was definitely game targeted. Any particular game specific areas you were interested in?

Very helpful post. I have a question regarding the <= and < you used for relative and absolute comparison in AlmostEqualRelativeAndAbs(). Is the difference in operator intentional or a typo? I know the difference is not much, but I am just curious why there is this difference.

I’m not seeing the difference — I’m seeing <= used everywhere. Using < wouldn't be wrong, it would just slightly change the meaning. For instance, if maxDiff is 0.25 then it's the difference between numbers exactly 0.25 apart being considered equal or not. Same idea for maxRelDiff. I guess documenting the precise meaning would have been good…

Very insightful article. Thank you, Bruce!

Here is an example, where “<=" works but "<" won't.

However, "works" is a question of semantics, as we're entering the domain of denormalized floats, so comparing them is probably meaningless.

The smallest (positive) float with normalized mantissa is a = 2^-126.

The largest float with denormalized mantissa is b = (1 – FLT_EPSILON) * 2^-126.

So we want these adjacent floats (a and b) to pass as almost equal!

diff

= a – b

= 2^-126 – (1 – FLT_EPSILON) * 2^-126

= FLT_EPSILON * 2^-126

= a * FLT_EPSILON.

The expressions "a – b" and "a * FLT_EPSILON" should both evaluate correctly to 2^-149 (smallest positive float).

That means:

diff FALSE

diff TRUE (What we wanted!)

Comparing denormalized floats only relative and not absolute means further trouble (besides what you explained in your article):

a = (1 – FLT_EPSILON) * 2^-126

b = (1 – 2 * FLT_EPSILON) * 2^-126

On one hand:

diff = a – b = FLT_EPSILON * 2^-126 = 2^-149

And on the other hand:

largest * FLT_EPSILON

= a * FLT_EPSILON

= ((1 – FLT_EPSILON) * 2^-126) * FLT_EPSILON

= (1 – FLT_EPSILON) * 2^-149

Strictly mathematical: It is diff = 2^-149 > (1 – FLT_EPSILON) * 2^-149 = largest * FLT_EPSILON.

However, they still pass the test if “(1 – FLT_EPSILON) * 2^-149″ is rounded up to 2^-149, and we use ” 2^-104. Otherwise “smallest * FLT_EPSILON” might be rounded up to “largest * FLT-EPSILON”.

Example:

a = 2^-104

b = (2 – 2 * FLT_EPSILON) * 2^-105. So b is the second-next smaller float to a.

b * FLT_EPSILON

= ((2 – 2 * FLT_EPSILON) * 2^-105) * FLT_EPSILON

= (1.1…101 * 2^-105) * FLT_EPSILON

= 1.1…101 * 2^-128

By “denormalizing” 1.1…101 * 2^-128, we get 0.01…1|01 * 2^-126 (with two additional digits). If the method “round to +infinity” is used, “b * FLT_EPSILON” will be rounded up to 0.1 * 2^-126 = 2^-127 = a * FLT_EPSILON.

2) You changed the formula from “(a – b) / a <= FLT_EPSILON" to "a – b <= a * FLT_EPSILON". What are your reasons? Cleaner code, better performance for multiplication than division…?

WordPress has shredded my post completely. I try to remember and rephrase what I’ve written and post it in three parts. Sorry for spamming.

Very insightful article. Thank you, Bruce!

Here is an example, where ‘<=' works but '<' won't.

However, "works" is a question of semantics, as we're entering the domain of denormalized floats, so comparing them is probably meaningless.

The smallest (positive) float with normalized mantissa is a = 2^-126.

The largest float with denormalized mantissa is b = (1 – FLT_EPSILON) * 2^-126.

So we want these adjacent floats, a and b, to pass as almost equal!

diff

= a – b

= 2^-126 – (1 – FLT_EPSILON) * 2^-126

= FLT_EPSILON * 2^-126

= a * FLT_EPSILON.

The expressions 'a – b' and 'a * FLT_EPSILON' should both evaluate correctly to 2^-149 (smallest positive float). That means:

diff < largest * FLT_EPSILON is FALSE

diff <= largest * FLT_EPSILON is TRUE (What we wanted!)

Comparing denormalized floats only relatively and not absolutely means further trouble (besides what you explained in your article):

a = (1 – FLT_EPSILON) * 2^-126

b = (1 – 2 * FLT_EPSILON) * 2^-126

On one hand:

diff = a – b = FLT_EPSILON * 2^-126 = 2^-149

And on the other hand:

largest * FLT_EPSILON

= a * FLT_EPSILON

= ((1 – FLT_EPSILON) * 2^-126) * FLT_EPSILON

= (1 – FLT_EPSILON) * 2^-149

Strictly mathematical: It is diff = 2^-149 > (1 – FLT_EPSILON) * 2^-149 = largest * FLT_EPSILON.

However, they still pass as equal if (1 – FLT_EPSILON) * 2^-149 is rounded up to 2^-149, and we use ‘<=' for comparison.

I got two questions regarding AlmostEqualRelativeAndAbs:

1) Why don’t you multiply with the smallest (instead of the largest)?

The advantage would be, that the second-next smaller number to a power of 2 would NOT pass as equal.

However, this only works for numbers bigger than 2^-104. Otherwise ‘smallest * FLT_EPSILON’ might be rounded up to ‘largest * FLT-EPSILON’:

a = 2^-104

b = (2 – 2 * FLT_EPSILON) * 2^-105. So b is the second-next smaller float to a.

b * FLT_EPSILON

= ((2 – 2 * FLT_EPSILON) * 2^-105) * FLT_EPSILON

= (1.1…101 * 2^-105) * FLT_EPSILON

= 1.1…101 * 2^-128

By denormalizing 1.1…101 * 2^-128, we get 0.01…1|01 * 2^-126 (with two additional digits). If the method “round to +infinity” is used, ‘b * FLT_EPSILON’ will be rounded up to 0.1 * 2^-126 = 2^-127 = a * FLT_EPSILON.

2) You changed the formula from ‘(a – b) / a <= FLT_EPSILON' to 'a – b <= a * FLT_EPSILON'. What are your reasons? Cleaner code, better performance for multiplication than division…?

1) I think I chose to multiply by the largest instead of largest because I felt it gave a more intuitive meaning to the comparison. It avoids the (harmless but confusing) case where smallest is zero. But, ultimately, I don’t remember. Maybe no reason.

2) I’m sure I rearranged it to avoid division. Division is quite expensive.

Regarding your earlier comments, I still don’t see where I am using ‘<' in AlmostEqualRelativeAndAbs. I see two uses of '<='. Maybe I changed it a while ago? I don't remember doing that, but it's possible.

Thanks, for an enlightening series, Bruce. By chance, is there some content missing before this paragraph?

“If you do an ULPs or relative epsilon comparison to the correct value of zero then this looks pretty bad. It’s a long way from zero. So what’s going on? Is the calculation of sin() really that inaccurate?”

What looks pretty bad? There seems to be some discontinuity here.

Oops.

Yeah, a new heading and about five short paragraphs got nuked. I have to do a manual fix-up stage on the code when I post to make it look nice and apparently I did a careless job.

Thank you very much. It’s all fixed now.

Thank you for taking the time to write your articles Bruce. I recently bumped into a float comparison issue in my own code, and found your insight very valuable.

I’m finding it difficult to decide how many ULPs to allow between floating point numbers in different situations. There isn’t a general rule suggested, so I was wondering if you had a practical relationship to use?

For instance, a 32-bit float accurately represents 6 significant decimal figures (http://www.codeguru.com/forum/printthread.php?t=323835). If you take this into account in your algorithms, would it always be reasonable to use a tolerance of a single ULP?

One ULP will be impractical in some cases.

The theoretically ideal plan is to analyze your algorithms carefully to see how well conditioned they are and generate reasonable error bounds based on that. That requires a lot of time and specialized knowledge. More practical is to try it and see.how much error you get. You can determine the error either by comparing to known-good results, or compare float-precision results to double-precision results. Interval math is another way to determine what your error bounds are.

Most people select an epsilon, and if they find failures they increase it. If you have to increase it a lot, then you probably need to rethink what you are doing.

BTW, how many significant decimal figures a float can represent is much more complex than ‘6’. Six is one possible answer, but so is 7.22. I’ll cover that in my next post.

Thank you for your reply Bruce. Looking forward to your next post!

“floats have a decimal precision of anywhere from one to over a hundred digits”

You must have 2^-149 in mind, the smallest float: it has 105 significant digits. (The smallest double, 2^-1074, has 751 significant digits.) By the way, you can print all those digits with gcc, and some other languages (http://www.exploringbinary.com/print-precision-of-dyadic-fractions-varies-by-language/). (Is that what your “simple code to print the true values of floats and doubles” is?)

Nice series of articles.

2^-149 is one of the numbers I have in mind. There are actually floats that require a few more digits than that, but that is the interesting range. Thanks for the link — I’ll take a look at that. I’ll be sharing code that does the actual printing, for instructive purposes and for those using platforms that can’t print with full precision.

Floats with more digits than 2^-149? I guess I’ll have to wait until your next article to see what they are 🙂

Of course, I see now: 0.99999988079071044921875 x 2^-126 has 112 significant digits, as you show in your latest article. Thanks for clearing that up.

An other floating curiosity that I read in “Write Portable Code”:

If the store operation is not optimized away, the two following functions may not return the same binary value if add is popped into a double:

float add (float a, float b){

return a+b;

}

float add2(float a, floatb){

float tmp = a+b;

return b;

}

Because FPU typically carry higher precison than registers (x87=80bits).

Yes, this issue, and variants on it, can be quite problematic. I’ll be covering some variants on this issue in the future.

The basic problem is that the x87 FPU doesn’t have an instruction to round a number to a particular precision. This can only be done by storing the number to memory, which is relatively expensive.

One correction of sorts: while the x87 registers are 80 bits, there is a rounding mode and by default it is set to double precision (64-bits). Therefore the mantissa is rounded to double precision after each operation. This doesn’t affect this particular problem, but it is an important distinction in other cases. And, sometimes the precision mode is set to 32 bits, in which case this problem disappears.

Actually, it isn’t. With a precision mode set to less than 80 bits, x87 calculations *may* produce less precision, or they may not. The “precision mode” is effectively a lower bound on resulting precision, the real precision being implementation defined.

Typically nearly all operations always produce full 80-bit precision result (regardless of precision mode), exceptions being only a few slow operations that are able to save time by cutting precision to the set mode. Divisions, square roots and transcendental ops – that’s all.

It is easy to experimentally determine that the Intel x87 FPU optimizes divide and square root when the precision is lowered (they are faster) whereas the performance of add/subtract/multiply is unchanged by the precision settings.

It is also easy to experimentally determine that the Intel x87 FPU rounds the mantissa of the result to the requested precision for all operations, even when the performance is unchanged. The code below tests this for addition:

double g_input1 = 16777216.0;

double g_input2 = 1.125;

void TestPrecision()

{

unsigned int currentState;

_controlfp_s(¤tState, _PC_24, MCW_PC);

double result1 = g_input1 + g_input2;

_controlfp_s(¤tState, _PC_53, MCW_PC);

double result2 = g_input1 + g_input2;

printf(“%.17g\n%.17g\n”, result1, result2);

}

Compile it for x87 and it produces this result, with result1 rounded to a 24-bit mantissa:

16777218

16777217.125

QED

#comment-13171 was not posted by keldoelykke. My account might have been compromised. Not sure. The avatar was wrong too.

Aha… user ‘me’ is just trolling with that user name … disregard my previous comment.

I am getting hacker alerts in my mailbox and then I got a notification mail that ‘me’ had made a rather serious comment 🙂

I just dealt with this issue in a new post, available at https://randomascii.wordpress.com/2012/03/21/intermediate-floating-point-precision/

It’s a bit misleading to describe the x87 precision as 80 bits, but I’ll let my article speak for that.

Pingback: Float Precision–From Zero to 100+ Digits | Random ASCII

Pingback: Intermediate Floating-Point Precision | Random ASCII

Pingback: Floating-point complexities | Random ASCII

Pingback: Exceptional Floating Point | Random ASCII

One common variation on the floating-point comparison theme — so common that I didn’t bother mentioning it — is variations on this theme:

float test = 4.1;

if (test == 4.1)

{

printf(“They’re equal”);

}

In most cases this will not — and indeed should not — print “They’re equal”. That’s because ‘test’ is equal to 4.1f (the infinitely precise value 4.1 rounded to float precision) and ‘4.1’ is probably rounded to double precision. Since ‘test’ has been more aggressively rounded, the two values are not equal.

In fact, if they compare as equal that probably means that ‘test’ was not properly rounded. Printing “They’re equal” is a deficiency.

Pingback: That’s Not Normal–the Performance of Odd Floats | Random ASCII

let say:

int main()

{

float f=0.1; //takes 4 bytes using gcc

char *a=(char*)&f;

int *i=(int*)&f;

printf(“%x %x %x %x %x”,*a,*(a+1),*(a+2),*(a+3),*i);

}

output : ffffffcd ffffffcc ffffffcc 3d 3dcccccd

……………………………………………………………………………………

Problem 1: for *a,*(a+1),*(a+2), why i am getting additional ffffff as a is pointer to char which is 1 byte.

Problem 2: for *i, why i am not getting output 3dcccccc?

……………………………………………………………………………………..

expected result:

sign bit: 0

exponent: 0111 1011

mantissa: 100 1100 1100 1100 1100 1100

observed result:

sign bit: 0

exponent: 0111 1011

mantissa: 100 1100 1100 1100 1100 1101

The leading ffffff when you print the bytes is because on your compiler ‘char’ is signed and it is therefore sign extended to an int when passed to print. Use unsigned char to avoid this.

The 1 at the end is because of rounding. The first two digits that are skipped are ’11’ which means that rounding up gives a more accurate result than truncating. Your ‘expected result’ would give an error of about 0.75 ULP, whereas the observed result gives an error of about 0.25 ULP.

Pingback: Doubles are not floats, so don’t compare them | Random ASCII

Reblogged this on Digital Drive and commented:

[Floating Point] Maths is tough!

First I would like to say that this is an excellent article. It is the best I have seen on the subject in a while.

That said, there is one unanswered question. What is the best way to calculate the value that should be used for the maxDiff parameter of the AlmostEqualUlpsAndAbs and AlmostEqualRelativeAndAbs functions. You imply that a value like maxInput * FLT_EPSILON could be used. Of course you later qualify that remark by saying “Unfortunately this means that it is dependent on the algorithm and the inputs” immediately after suggesting the value of maxInput * FLT_EPSILON.

I will refer to an example you gave in your article to explain why I am asking.

float someFloat = 67329.234; // arbitrarily chosen

float nextFloat = 67329.242; // exactly one ULP away from ‘someFloat’

// The following returns true, note that it does not really matter what value

// you choose for the maxDiff parameter.

bool equal = AlmostEqualUlpsAndAbs(someFloat, nextFloat, FLT_EPSILON, 1);

float diff = (nextFloat – someFloat); // .0078125000

// The following simply does not work. Any clues as to how to properly

// calculate this value so the diffEqualZero is set to true instead of false.

float maxDiff = std::max(diff, 0.0f) * FLT_EPSILON;

bool diffEqualZero = AlmostEqualUlpsAndAbs(diff, 0.0f, maxDiff, 1);

Would knowledge of the maximum number of significant digits after the decimal point make it possible to calculate a better value for maxDiff? My thought is that if the floats are obtained from instruments taking measurements and the measurements are known to be accurate to the third decimal place, would the best value for maxDiff be ten times the smallest number with that number of digits after the decimal? For example, the smallest number with three digits after the decimal is 0.001. Ten times that would be 0.01, or the smallest number with 2 digits after the decimal.

This seems to work but I am wondering if this is the best solution in the scenario I have described.

I would appreciate any input you might have to offer on this.

I don’t really have a good answer, I don’t think there is a good cross-domain answer, and I fear that any attempt at an answer would just add more confusion. So I punted like a coward.

However, maxDiff is only needed for numbers that should be at or near zero. It is only needed to handle cases like sin(pi). For relative error maxUlpsDiff is the appropriate parameter to use, and this should suffice for most cases.

So, I would set maxDiff as small as possible. Many sources suggest setting it to FLT_EPSILON, and it may be that for most ranges that is valid. I would start there and see how it works.

I believe that the correct value of maxDiff should be affected only by expected precision of your near-zero calculations. If your inputs are from instruments with known error then you would normally use maxUlpsDiff to handle this — unless you are subtracting them and hoping to get zero, in which case sig-figs is your friend.

Zero is a huge nuisance. Avoid it if possible.

I think FLT_EPSILON is 1.19e-7f, not 1.19e7f.

Good catch. Fixed.

Is this code PD, or some other licence (BSD/GPL)? I’m interested in using your ULP implementation in a GPLd program, cause you know, I’m probably going to spend my next few days catching bugs from getting this wrong…

To avoid confusion and uncertainty I am explicitly using the Apache license for the code and text of this blog post.

Copyright 2021 Bruce Dawson

Licensed under the Apache License, Version 2.0 (the “License”);

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an “AS IS” BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

Also useful to take a look ULP-related functions in Boost.

http://www.boost.org/doc/libs/1_51_0/libs/math/doc/sf_and_dist/html/math_toolkit/utils/next_float.html

Pingback: Game Developer Magazine Floating Point | Random ASCII

Pingback: Top Eight Entertaining Blog Facts for 2012 | Random ASCII

Pingback: Float Precision Revisited: Nine Digit Float Portability | Random ASCII

float has only 23 bit explitcitly for fraction part, that is, 0.00000011920928955078125 , so does it really make sense for comparing sum=1.000000119209290, mul=1.000000000000000, mul2=1.000000014901161 ? There should be no difference in their binary representation, I guess…

Both ‘sum’ and ‘mul’ are valid float values with different binary representations. As the article says, they are adjacent floats. ‘mul2’ requires double precision to distinguish it from 1.0 and this is also mentioned in the article.

The main point of the code snippet was to demonstrate that floating-point calculations are not exact and you may get some inaccuracy. This was purely to set the stage for why it is useful to have a way of seeing if two floats are almost equal.

Sorry for that thoughtless question. What I want to say is, f * 10 would be treated as float, single-precision expression in C, rather than a double type, and there would be no binary difference between mul1 and mul2. I tried it on VS2008 and got the follows:

sum = 1.000000119209290, mul = 1.000000000000000, mul2 = 1.000000000000000.

That is correct that if mul2 is rounded to a float then its error disappears. However there are certainly circumstances where the variation could bubble up to the surface. All it would take would be subtracting 1.0f from the result (prior to storing to a float) and suddenly the difference would be significant. For instance “f * 10 – 1.0f” may be non-zero.

Whether that precise problem matters depends heavily on your compiler and processor because it is ultimately a question of intermediate precision. I devoted an entire separate blog post to that complicated topic:

However the general point was just “floating-point math can accumulate error”. No controversy there I’m sure.

That‘s true. This is really a great article and helps me a lot, thank you for sharing.

When you wrote “f * 10 – 1.0f”, that reminded me of an entirely different ball of yarn you didn’t touch on in this article: FMA instructions. Because they perform the add (or subtract) before rounding, they introduce a similar set of issues to x87’s sometimes-surprising extended precision behavior. Catastrophic cancelation behaves rather differently if you round before or after the subtraction.

FMA is out of scope of this article… I just had a twitch when I saw that expression. I’m coming from the other side of things. I spent a fair bit of time last year working on writing a floating point reference implementation for verifying a processor design. We spent quite a lot of time discussing FMAs, their merits and demerits, and ended up spending many, many hours getting familiar with Dr. Kahan’s impressive body of work. (I also found an obscure rounding error in Knuth’s MMIX-ARITH implementation. Unrelated to FMAs, though.)

Yes, FMA is confounding and is very thematically similar to extended precision. I think the issue you are alluding to is something like:

a * b + c * d

where a == c and b == -d. The mathematical answer is zero, and a non-FMA implementation will calculate that trivially. However with FMA one multiplication will be rounded and the other one will not.

I think that the cool thing about this is that the answer you get from an FMA implementation is an *exact* measure of the rounding error in the multiplication. Therefore this is very similar to sin(double(pi)) whose result is a measure of the rounding error in double(pi).

I also find it interesting that FMA is very difficult to emulate. It seems like double-precision without FMA should be able to emulate single-precision FMA, but in fact it can’t quite do it. Double-precision can do an exact single-precision multiply, but you also need a way to do a double-precision add-and-truncation-to-float as a single step, otherwise you get double rounding. Subtle.

Hey Bruce,

thank you so much for this post. I’ve been programming for several years now and was so embarrassed to only just stumble across this issue while grading an undergrad class. I found this post through the link on your old website and I like how it’s not too much in depth like the other must read article “what every computer scientist should know about floating point arithmetic” and also not too short like the answer to that question in the c-faq website I found. I’ll definitely also browse through the other floating point articles in the future 🙂

Pingback: Floating-Point Determinism | Random ASCII

“an ULPs based comparison of floats with different signs doesn’t even make sense”

I’m a bit unclear why. Aren’t -0 and the smallest positive float very close? Google’s googletest turns the sign-bit binary of floats into a biased integer which I think means it’s quite happy with floats of different signs. Start with AlmostEquals() in http://code.google.com/p/googletest/source/browse/trunk/include/gtest/internal/gtest-internal.h#318 The routines it calls are within the same file.

There are two issues here: does it make sense, and does it work well. I would argue it doesn’t make sense to do an ULPs based comparison of different signed numbers because this is effectively a relative comparison, and a relative comparison is meaningless with different signs. Google may have copied my previous paper, and this can work, but I don’t think it is meaningful. With different signs you should (IMHO) use a fixed epsilon.

There’s also the practical consideration of overflow when you subtract two biased numbers — the difference between 2.0 and -2.0 won’t fit into a 32-bit signed value, so great care must be used.

So, no obvious meaning and problematic, so I don’t recommend it.

So 1.0 less 0.1 ten times shouldn’t be tested against 0 with an ULP-based comparison because the result might be either less or more than +0? http://play.golang.org/p/Ps9h2VYP9H

Correct. If you calculate 1.0 – 0.1 * 10 (or something similar) and the result does not come out to zero then, in terms of ULPs, it will be *enormously* far from zero. The difference will probably be about FLT_EPSILON or DBL_EPSILON which are millions and trillions of ULPs away from zero. This huge difference is representative of the fact that an ULPs based comparison near zero is meaningless, at least in that case.

Great article!

I started to think about this and came up with a solution based on the fact that the quotient of two numbers that are very close is very close to 1, which works for both very small and very large numbers. The comparison then becomes “fabsf(1.0-a/b)<EPSILON".

This has a few issues though, for one the division operation might be expensive. Also, a and b cannot be exactly zero so that have to be handled separately. In the end I come up with this function:

#define EPSILON 2*FLT_EPSILON

/** Returns true if a and b are "equal" */

int equal(const float a, const float b) {

return

(a == b) ||

(a == 0 && fabsf(b)<EPSILON) ||

(b == 0 && fabsf(a)<EPSILON) ||

(fabsf(1.0-(a/b)) < EPSILON);

}

Just adjust EPSILON to the precision needed. With 2*FLT_EPSILON adjacent numbers compare as equal.

One problem, as you say, is that division can be very expensive. And, on some CPUs (Xbox 360 and PS3!) floating-point compares are fairly expensive.

But on to correctness… This is an armchair analysis — I haven’t written test code.

Your equal function is not symmetric — it can give different results for equal(x,y) versus equal(y,x). That’s bad. You need to sort the inputs by absolute magnitude.

If ‘a’ and ‘b’ have different signs then they will compare equally equal as if they had the same signs. That’s bad. The fabs around 1.0-(a/b) causes this and I’m not sure what benefit it gives. Sorting by magnitude (see the previous comment) would be better than that fabs.

Another problem is that I think your code will return true for (0, 1e-20) due to the zero check, but false for (1e-19, 1e-20), even though those numbers are closer. That’s because your zero handling means that some relatively ‘large’ numbers will compare equal to zero, but when compared against non-zero numbers they will be found to be quite noticeably different. Zero is hard.

Comparing floats is hard. Any method that claims to automatically handle zero is probably lying.

Thanks for the quick reply!

I see the points you are making and you’re absolutely correct. I believe the main problem is that I misinterpreted what the epsilon of the floating points really is.

Floating point is indeed really hard.

Great article, Bruce. Was a very fun read.

You’ve clearly shown the importance of having relative (ULPS or epsilon based) and absolute (for near zero) comparisons. Why isn’t this a part of the C++ standard though?

Why do comparison operations on float default to bitwise compares? Why rely on user-defined functions for comparing a well-established data type?

The default comparison isn’t quite bit-wise (zeroes and NaNs are handled specially) but I know what you mean.

Fundamentally this is the concern of the IEEE floating-point standard more than the C++ standard.

That said, the default equality comparison absolutely should be true equality. To do otherwise risks madness. I have a post almost ready that uses exact floating-point comparisons to validate math, thus proving that exact comparisons are valid.

Fuzzy comparisons are very context sensitive. You have to decide what epsilon value to use, for instance. A standard fuzzy comparison would need user-specified epsilons.

And, the fuzziness sometimes has to extend to ”. When, say, creating a BSP using dot products and ‘<' you would like to divide the world into two sets, but arguably what you actually do is divide the world into two definite sets plus a set of points that are so close to the cutting plane that they are ambiguous. I don't see how the floating-point math standard can fix that — although it could offer functions to help with it.

So, standard fuzzy comparison functions would be nice, but the default should remain exact comparisons.

Ah, yes, that’s right – shouldn’t mess with true equality compares.

Scenarios like partitioning can’t really do with the extra leverage it provides, as you pointed out.

But, how about boolean functions like CollisionDetection? I can see AlmostEquals(..) being useful there.

And that makes me curious – in your experience at Valve, how often does AlmostEquals(..) actually get used?

I would expect most collision detection functions to return whether two objects overlap, not whether their locations are equal so AlmostEquals would not be directly relevant. It could be relevant to check, in internal builds, whether there is sufficient precision to make the collision detection meaningful.

I don’t think our games do a lot of floating-point equality comparisons, so I don’t think AlmostEquals is relevant very often.

I’ve been using a ULP-based float compare that fails the catastrophic cancellation test.

If in my Vector class, for example, I’m guarding against a potential division-by-0 (with a debug-only assert) by using this non-bitwise float compare.. which is flawed anyway (for values very close to 0).. would you recommend I do only a bitwise check for 0 in such situations (when guarding against division-by-0)?

Thank you.

I can’t say what you should do without far more information. If you want to avoid getting infinity as a result then you need to check for numbers near zero (dividing by most subnormals will give infinity even though they aren’t zero). There is no magic-bullet correct-advice I’m afraid — it really depends on the meaning of your results and their non-zeroness.

Great article, thank you ! Have you considered publishing this serie of posts as an ebook/PDF guide ?

I can’t think of any advantage to doing that compared to a blog-post.

Great articles, I learned a lot. I’m concerned about the AlmostEqualRelativeAndAbs() though. The user needs to provide two parameters for the precision. The absolute and relative threshold. It’s not user friendly for the user (must give coherent pair) and dangerous in some cases.

Two examples :

maxDiff = 1.4E-40

maxRelDiff = 0.001

Close to zero, the absolute check will return true for cases where the relative one wouldn’t. There is a discontinuity that the user may not be aware of because giving coherent pair is complicated.

__

maxDiff = 1.4E-43

maxRelDiff = 0.001

In this case, the absolute test may fail and ask the relative one to solve a problem it can’t.

I tried to come up with a function taking only one precision parameter, making the usage easier for the user :

#define FLT_SUB_MIN 1.4E-45f

//the input precision is the relativeError inverse.

//A precision of 1000 would tolerate an error of 0.1%

bool AlmostEqualRelative(float A, float B, float precision)

{

//Check the precision desired is in between 1/2^0 and 1/2^22

assert(precision > 1.0f && precision < 4194304.0f);

// Check if the numbers are really close — needed

// when comparing numbers near zero.

float diff = fabs(A – B);

if (diff <= FLT_SUB_MIN * precision)

return true;

A = fabs(A);

B = fabs(B);

float temp = diff * precision;

return (temp <= A || temp <= B);

}

I think it's better for three reason :

-There is only one input parameter. A pseudo-continuity is ensured internally, making the interface easier for the user. What the absolute check and the relative one test are almost two distinct sets of inputs.

-The relative test compare two floats bigger than "diff". In your function, "largest * maxRelDiff" may give a wrong result if both are subnormals. Would the test still succeed ? In my function, "diff * precision* generate a float at least bigger than diff so I got rid of this possible issue.

-In your function you create "largest" out of a condition, then execute an other condition. In my function, everything is combined in one if statement. The OR will return true if almost equal to A without testing B. Knowing this fact, the user could even optimize the usage of this function if he knows which one of A or B if more often the bigger.

Two days ago I was still thinking floats where some magical creatures that you couldn't compare properly. With your articles I think I have a better understanding of the machinery. But it's up to you to validate my theory about the efficiency/user friendliness of my function.

Cheers.

Oooohhh, I did not take the cases around zero into account. So what if I trigger the absolute test only if one of the input is below the achievable precision ?

bool AlmostEqualRelative(float A, float B, float precision)

{

//Check the precision desired is in between 1/2^0 and 1/2^22

assert(precision > 1.0f && precision < 4194304.0f);

A = fabs(A);

B = fabs(B);

//Return true if precision can't be achieved

float minInputValue = FLT_SUB_MIN * precision;

if (B <= minInputValue) //Manage case B subnormal below supported precision

return (A + B) * precision < 1.0f;

if (A <= minInputValue) //Manage case A subnormal below supported precision

return (A + B) * precision < 1.0f;

float temp = fabs(A – B) * precision;

return (temp <= A || temp <= B);

}

It tested this guy for normals and subnormals (crossing between negative and positive) and it worked like a charm.

You are trying to do the impossible.

> Two examples :

> maxDiff = 1.4E-40

> maxRelDiff = 0.001

> Close to zero, the absolute check will return true for cases

> where the relative one wouldn’t.

Yes, that is true. That is the job of maxDiff, to return ‘equality’ when very close to zero. If it doesn’t do that for some values then it is pointless.

This cannot be made simple and easy because it depends on where your numbers are coming from, what their expected ranges are, and what their expected errors are. If you compare sin((float)pi) to zero then the expected relative diff is infinite, and the expected absolute diff is pretty large — larger than FLT_EPSILON. Your function fails in that case, even given a perfectly rounded sin() function. Yes, sin((float)pi) is a case of catastrophic cancellation, but that is often the cause of numbers near zero.

> what if I trigger the absolute test only if one of the input is below the achievable precision

What do you mean by the achievable precision?

Maybe the error in my code is that one shouldn’t try using a generic function to compare against zero. Zero is always a particularly tough case because you have lost all information about what a ‘reasonable’ error might be, so perhaps a special function for that purpose makes sense.

Ultimately the whole comparison problem is very context sensitive, and that is unavoidable.

“Yes, that is true. That is the job of maxDiff, to return ‘equality’ when very close to zero. If it doesn’t do that for some values then it is pointless.”

But how to provide thoughtfully maxDiff and maxRelDiff so that the two of them don’t step on each other territory ?

“Your function fails in that case, even given a perfectly rounded sin() function.”

The second function passes this test, I’m hopeful.

“What do you mean by the achievable precision?”

If I target a precision of 1/1000, I can process a relative comparison for floats higher (after fabs()) than 1000 times the minimal subnormal (1.4E-45). If one of the input is below this threshold, I must switch to an absolute comparison of the difference between the two inputs. (In the second algorithm I do “(A + B)”, it should be “diff” instead).

“Achievable precision” is an awful term, “Input lower threshold” ?

“…so perhaps a special function for that purpose makes sense.”

I hope not. I will test more thoroughly.

I don’t think your second function handles the case of sin((float)pi). That function gives an answer of -0.000000087422776, or about 8e-8, when the ‘expected’ answer is zero. This is about 5e37 greater than the minimal subnormal. And yet, it is almost exactly the correct answer given the input value. This sort of unexpected (without detailed analysis) behavior when dealing with results near zero makes it, I believe, impossible to automatically figure out the right error range.

Relative errors can work nicely, but they fall down near zero, whatever ‘near zero’ means. And there’s the rub. The definition of ‘near zero’ is dependent on what calculations you did to get there, and that cannot possibly be known by a comparison function.

> But how to provide thoughtfully maxDiff and maxRelDiff

> so that the two of them don’t step on each other territory ?

I don’t think this is generally possible.

I think I got the core of the problem.

If I had :

X = 1E30

Y = 1E30 + 1 ULP

Then

A = Y – X

B = 0

A perfect comparison should return true since the subtraction of Y by X is really close to zero considering the order of magnitude of X and Y. Still it will be around 1E21, which is extremely far away from zero, be it an absolute or a relative comparison.

In this case we should have compare X and Y directly instead of comparing the subtraction to zero. So it comes back to your conclusion “Ultimately the whole comparison problem is very context sensitive, and that is unavoidable.”.

Thanks for taking the time to explain.

What about following code? That’s what I deem could be better than any methods discussed in the article.

/*

check if two floating point numbers are almost equal.

\param P specifies precision for comparison of the binary significand (ie. ~= number of significant figures)

*/

template<unsigned int P, typename T> inline bool almostEqual(const T x, const T y) {

int ex, ey;

const T sx=std::frexp(x, &ex);

const T sy=std::frexp(y, &ey);

if (ex!=ey)

return false;

const T p=std::pow(10.0, static_cast<int>(P));

return floor(sx*p) == floor(sy*p);

}

// Edited to HTMLize the cast so that it shows up. Note that ‘P’ is of type unsigned int.

Sorry, that works poorly. The exponent check will pass for 0.5 and 0.9999999, but will fail for 0.9999999 and 1.0, even though the latter two are as close-as-can-be. So already you have rejected some number pairs that should presumably pass.

The second check is insufficiently sensitive to be tuned well. Specifying how many decimal digits of precision to account for works poorly because the amount of decimal precision varies hugely, from about 6-9 digits. Also, because you use an equality test you hit another cusp problem where arbitrarily close numbers can be on opposite sides of the cusp.

Yeah, have realized it shortly after post. The problem with exponent. But you are right that the second problem is even more dire as the sensitivity needs to be tuned more sometimes.

You made several mistakes here? P is described in the comments but not actually defined in the code. What is P? Is it a const T or a const int? Also, static_cast(P) in wrong. I think this should be static_cast(P), but I cannot be certain.

It looks the commenting system is eating angle brackets, in the original code suggestion and in your critiques — which makes them kind of amusing. I assume that you in both cases static_cast<T>(P) was intended — C style casts survive the commenting system better. I had to use HTML encoding to get the angle brackets above to survive. I can fix up the original and the reply as shown above if you’d like — let me know Pavel and Ben.

Reblogged this on «Memento mori» and commented:

Плавающая запятая

Hi,

Great article. Thx.

I too have played around the issues around 0.

The result is https://github.com/KeldOelykke/FailFast/blob/master/Java/FailFast/src/starkcoder/failfast/checks/primitives/floats/IPrimitiveFloatEqualsAlmostCheck.java .

Any thoughts about the formula with the 1 in the denominator? You are way deeper into this topic, so you might have opinions against it.

Here are some tests with various input values: https://github.com/KeldOelykke/FailFast/wiki/isFloatValueEqualsAlmost-&-failFloatValueEqualsAlmost

Any comments are appreciated.

Kind Regards,

Keld Ølykke

It looks like the code implements a relative epsilon only — I couldn’t see an absolute epsilon to handle numbers near zero. That means that this code will necessarily not handle numbers near zero correctly. That’s fine, as long as that limitation is understood.

I also think that a default relative epsilon of 1.001 is too permissive as a default, since it lets through huge amounts of slop. I’d go with something like 1.000001 as a default relative epsilon.

Thx.

I eliminated the absolute epsilon test. It might not be so clear 😦

I did this in a way that corresponds to your AlmostRelativeEquals-function with the following modification:

float largest = (B > A) ? B : A;

if(largest < 1f) largest = 1f; // eliminates absolute epsilon test, good idea or too hacky?

Ok. I will go for a smaller default. Thx

Too hacky. You are implicitly assuming that the absolute epsilon should be FLT_EPSILON and there is no justification for this. Given my favorite test case of sin(theta) the absolute epsilon (necessary for comparing sin(pi*n) to zero) is equal to the epsilon of theta, which may be arbitrarily larger than FLT_EPSILON. For instance:

Compare(sin(theta), 0);

may say that the result is not equal to zero even when theta is as close to pi*n as is possible, as in 314.15926535f. Or consider this:

Compare(sin(3.1415926535f) * .000001, 0)

What absolute epsilon should you use here? I believe the correct answer is 3.14159 * 0.5 * FLT_EPSILON * 0.00001.

In short, you’re injecting a magic number which makes your results range specific. This means that the same calculation done in m/s versus mm/s versus km/s may give different results.

Thx.

I see. I think I need a another method overload that also accepts an absolute epsilon.

If we could turn both numbers into scientific notation* could we not get back to having one epsilon value working on the mantissa* difference?

*(e.g. http://introcs.cs.princeton.edu/java/91float/))

> If we could turn both numbers into scientific notation* could we not get back to having

> one epsilon value working on the mantissa* difference?

> *(e.g. http://introcs.cs.princeton.edu/java/91float/))

Ah no. The absolute epsilon is an expectation of rounding errors that may have occurred the inputs. That can be less or greater than the error we accept between the inputs (if they had no rounding errors). Apples and Pears.

Well, not really. The absolute epsilon is an expectation of reasonable rounding errors in the result that are not proportional the result’s magnitude. Any calculation that does subtraction can hit these. If you do subtraction then the expected error is the sum of the expected errors in the inputs. If you then multiply the result of the subtraction by 1e6 then the expected error is a million times larger

Hi again,

I have been tinkering all week about how to create a clear view about what I want an AlmostEqual-function to do. Here is the result: https://plus.google.com/108327308203548221967/posts/5fapojoArHS

Armed with these 2 functions – High(X) & Low(X) – we calculate them both for input X and see if input Y is within [Low(X);High(X)].

The 2 tweak parameters for the functions are as you have deducted above absolute and relative epsilon.

Any good?

I like the idea of visualizing what the two error terms actually mean. My functions don’t apply them both simultaneously, which means their graph would have a straight line for when the absolute epsilon applies.

I recommend reading about condition numbers as they give a mathematical basis for figuring out how inaccurate an equation can be. Most people don’t bother — they just experiment and see what they can get away with.

Thx.

I made the 2 functions from the following 4 functions: https://plus.google.com/108327308203548221967/posts/iAFkYTeHzVm

I will have a look at condition numbers. Thx.

Pingback: Intel Underestimates Error Bounds by 1.3 quintillion | Random ASCII

The algorithm for nearly equal comparison does not handle correctly infinity cases:

1) AlmostEqualRelativeAndAbs(std::numeric_limits<float>::infinity(), std::numeric_limits<float>::infinity(), 0.0f, 0.0f); // Returns false

2) AlmostEqualRelativeAndAbs(-std::numeric_limits<float>::infinity(), std::numeric_limits<float>::infinity(), 0.0f, 0.1f); // Returns true

Following changes must be done to fix this behavior:

Add equal comparison test to the start of algorithm:

if (A == B) return true;

Add this special case test after A = abs(A); B = abs(B); :

if (A == B && diff == A) return false;

An excellent article, wish I’d read it earlier!

I was looking for the answer to …

If a comparision is made with a floating point constant that is exactly representable in binary IEEE-754 single format – like 8191.875, is the result predicable, in all environents?

In my case the other operand is received over a serial bus in IEEE-754 single format and I only perform the comparison if it is neither an NaN nor an infinity.

I’d like to think my answer is obvious (predicable in all environments) but as “[Floating-point] math is hard.” I’d like to make sure I haven’t missed anything.

I can’t think of any way that the comparison in your scenario could misbehave. Generally speaking if you compare two floats that are equal then (ignoring NaNs) they will compare equal. The only non-standard deviations from this that I am aware of are when optimizing compilers for the x87 FPU fail to round a recent calculation to float precision. That leads to the possibility that FloatFunc(f) != FloatFunc(f) because one result is rounded to float and the other is left at double or extended-double precision.

As long as that doesn’t apply to your situation (and it sounds like it doesn’t) then you’re all good. SSE and other FPUs generally avoid the x87’s odd problems.

Thanks to your serie of articles, I’m finally starting to understand 2-3 things about floating-points.

Now I’m in the process of writing a math library for learning purposes and I’d like to provide a bunch of equality functions, one for each comparison method (absolute, relative, ULP, or a combination of them). And for the principle I’d also like to take care of the long double case.

Depending on the implementation, and if I understood properly, the size of a long double can vary but it’s more likely to be made of 80 or 128 bits. Since there’s no standard integer type to represent a floating point that large—and since I’d like to avoid using any external library—, I’ve been wondering how to compute the ULP difference between two long doubles.

I’ve stumbled upon the function `float_distance` from the boost library as well as a question on StackOverflow, both linked below, but I’m easily overwhelmed by their complexity so I was wondering what were your thoughts on those two implementations and/or if you already had a viable long double-compliant alternative of the technique that you’ve presented in this article?

https://github.com/boostorg/math/blob/master/include/boost/math/special_functions/next.hpp#L285

http://stackoverflow.com/questions/13940316/floating-point-comparison-revisited

Note that I’m using C++11 but I’m not sure if it makes things easier here.

Kind regards,

Christopher.

Small follow-up: I linked to the question on SO thinking that I could turn it around to get the number of ULPs.

After thinking a bit more about it, I guess that it doesn’t make much sense—how could one deduce a correct ULP distance between two numbers by using a constant epsilon value? You’ve explained very well in your article that the epsilon between adjacent floats is nothing but constant.

The `ulps` parameter in his function is actually used against the difference between significands rather than against the difference of the two values to compare as one would expect from the function signature—that is misleading and is a bit of a cheat.

To confirm all this, I modified his function to get it to return an ULP difference, and even thought it’s highly possible that I might have done something terribly wrong in the process, it seems to work fine for the floats in the range [1.0f, 2.0f] (constant epsilon value in that range) while it fails otherwise.

http://ideone.com/HymrHJ

An ULPs function that only works in such a restricted range doesn’t seem very helpful.

I haven’t tried writing an ULPs routine using floating-point math, but it would seem to me that if you divide the two numbers by each other then the numbers of ULPs that the ratio is away from 1.0 is a reasonable estimate of the ULPs between the two numbers — of by a factor of two, at most, I believe.

Then again, at that point you might as well just use a relative method.

If you do want the exact number of ULPs between the numbers then just write a simple high-precision math function. See the HighPrec class that I use and have a link to here as an example:

I don’t have a long-double compliant technique. Since the relative and ULP methods are fundamentally the same (to some extent they are just different ways of thinking about the problem) I would probably go with the relative method.