Denormals, NaNs, and infinities round out the set of standard floating-point values, and these important values can sometimes cause performance problems. The good news is, it’s getting better, and there are diagnostics you can use to watch for problems.

In this post I briefly explain what these special numbers are, why they exist, and what to watch out for.

This article is the last of my series on floating-point. The complete list of articles in the series is:

- 1: Tricks With the Floating-Point Format – an overview of the float format

- 2: Stupid Float Tricks – incrementing the integer representation of floats

- 3: Don’t Store That in a Float – a cautionary tale about time

- 3b: They sure look equal… – special bonus post (not on altdevblogaday)

- 4: Comparing Floating Point Numbers, 2012 Edition – tricky but important

- 5: Float Precision—From Zero to 100+ Digits – what does precision mean, really?

- 5b: C++ 11 std::async for Fast Float Format Finding – special bonus post (not on altdevblogaday) on fast scanning of all floats

- 6: Intermediate Precision – their effect on performance and results

- 7.0000001: Floating-Point Complexities – a lightning tour of all that is weird about floating point

- 8: Exceptional Floating point – using floating-point exceptions to find bugs

- 9: That’s Not Normal–the Performance of Odd Floats

The special float values include:

Infinities

Positive and negative infinity round out the number line and are used to represent overflow and divide-by-zero. There are two of them.

NaNs

NaN stands for Not a Number and these encodings have no numerical value. They can be used to represent uninitialized data, and they are produced by operations that have no meaningful result, like infinity minus infinity or sqrt(-1). There are about sixteen million of them, they can be signaling and quiet, but there is otherwise usually no meaningful distinction between them.

Denormals

Most IEEE floating-point numbers are normalized – they have an implied leading one at the beginning of the mantissa. However this doesn’t work for zero so the float format specifies that when the exponent field is all zeroes there is no implied leading one. This also allows for other non-normalized numbers, evenly spread out between the smallest normalized float (FLT_MIN) and zero. There are about sixteen million of them and they can be quite important.

If you start at 1.0 and walk through the floats towards zero then initially the gap between numbers will be 0.5^24, or about 5.96e-8. After stepping through about eight million floats the gap will halve – adjacent floats will be closer together. This cycle repeats about every eight million floats until you reach FLT_MIN. At this point what happens depends on whether denormal numbers are supported.

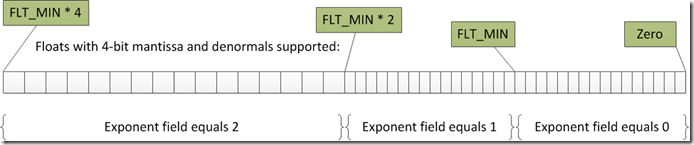

If denormal numbers are supported then the gap does not change. The next eight million numbers have the same gap as the previous eight million numbers, and then zero is reached. It looks something like the diagram below, which is simplified by assuming floats with a four-bit mantissa:

With denormals supported the gap doesn’t get any smaller when you go below FLT_MIN, but at least it doesn’t get larger.

If denormal numbers are not supported then the last gap is the distance from FLT_MIN to zero. That final gap is then about 8 million times larger than the previous gaps, and it defies the expectation of intervals getting smaller as numbers get smaller. In the not-to-scale diagram below you can see what this would look like for floats with a four-bit mantissa. In this case the final gap, between FLT_MIN and zero, is sixteen times larger than the previous gaps. With real floats the discrepancy is much larger:

If we have denormals then the gap is filled, and floats behave sensibly. If we don’t have denormals then the gap is empty and floats behave oddly near zero.

The need for denormals

One easy example of when denormals are useful is the code below. Without denormals it is possible for this code to trigger a divide-by-zero exception:

float GetInverseOfDiff(float a, float b)

{

if (a != b)

return 1.0f / (a - b);

return 0.0f;

}

This can happen because only with denormals are we guaranteed that subtracting two floats with different values will give a non-zero result.

To make the above example more concrete lets imagine that ‘a’ equals FLT_MIN * 1.125 and ‘b’ equals FLT_MIN. These numbers are both normalized floats, but their difference (.125 * FLT_MIN) is a denormal number. If denormals are supported then the result can be represented (exactly, as it turns out) but the result is a denormal that only has twenty-one bits of precision. The result has no implied leading one, and has two leading zeroes. So, even with denormals we are starting to run on reduced precision, which is not great. This is called gradual underflow.

Without denormals the situation is much worse and the result of the subtraction is zero. This can lead to unpredictable results, such as divide-by-zero or other bad results.

Even if denormals are supported it is best to avoid doing a lot of math at this range, because of reduced precision, but without denormals it can be catastrophic.

Performance implications on the x87 FPU

The performance of Intel’s x87 units on these NaNs and infinites is pretty bad. Doing floating-point math with the x87 FPU on NaNs or infinities numbers caused a 900 times slowdown on Pentium 4 processors. Yes, the same code would run 900 times slower if passed these special numbers. That’s impressive, and it makes many legitimate uses of NaNs and infinities problematic.

Even today, on a SandyBridge processor, the x87 FPU causes a slowdown of about 370 to one on NaNs and infinities. I’ve been told that this is because Intel really doesn’t care about x87 and would like you to not use it. I’m not sure if they realize that the Windows 32-bit ABI actually mandates use of the x87 FPU (for returning values from functions).

The x87 FPU also has some slowdowns related to denormals, typically when loading and storing them.

Historically AMD has handled these special numbers much faster on their x87 FPUs, often with no penalty. However I have not tested this recently.

Performance implications on SSE

Intel handles NaNs and infinities much better on their SSE FPUs than on their x87 FPUs. NaNs and infinities have long been handled at full speed on this floating-point unit. However denormals are still a problem.

On Core 2 processors the worst-case I have measured is a 175 times slowdown, on SSE addition and multiplication.

On SandyBridge Intel has fixed this for addition – I was unable to produce any slowdown on ‘addps’ instructions. However SSE multiplication (‘mulps’) on Sandybridge has about a 140 cycle penalty if one of the inputs or results is a denormal.

Denormal slowdown – is it a real problem?

For some workloads – especially those with poorly chosen ranges – the performance cost of denormals can be a huge problem. But how do you know? By temporarily turning off denormal support in the SSE and SSE2 FPUs with _controlfp_s:

#include <float.h> // Flush denormals to zero, both operands and results _controlfp_s( NULL, _DN_FLUSH, _MCW_DN ); … // Put denormal handling back to normal. _controlfp_s( NULL, _DN_SAVE, _MCW_DN );

This code does not affect the x87 FPU which has no flag for suppressing denormals. Note that 32-bit x86 code on Windows always uses the x87 FPU for some math, especially with VC++ 2010 and earlier. Therefore, running this test on a 64-bit process may provide more useful results.

If your performance increases noticeably when denormals are flushed to zero then you are inadvertently creating or consuming denormals to an unhealthy degree.

If you want to find out exactly where you are generating denormals you could try enabling the underflow exception, which triggers whenever one is produced. To do this in a useful way you would need to record a call stack and then continue the calculation, in order to gather statistics about where the majority of the denormals are produced. Alternately you could monitor the underflow bit to find out which functions set it. See Exceptional Floating Point for some thoughts on this, or read this paper.

Don’t disable denormals

Once you prove that denormals are a performance problem you might be tempted to leave denormals disabled – after all, it’s faster. But if it gives you a speedup means that you are using denormals a lot, which means that if you disable them you are going to change your results – your math is going to get a lot less accurate. So, while disabling denormals is tempting, you might want to consider investigating to find out why so many of your numbers are so close to zero. Even with denormals in play the accuracy near zero is poor, and you’d be better off staying farther away from zero. You should fix the root cause rather than just addressing the symptoms.

In your other articles you mention denormals a couple of times. This article explaining what they are all about in plain English is really nice.

Update: some very smart physics friends tell me that denormals frequently occur in physics due to the use of equations which iterate until values go to zero. These denormals hurt performance without really adding value. So, physics programmers often find it valuable to disable denormals in order to regain lost performance.

That or add code to to terminate such iterations at a more reasonable value? We face these kinds of problems all the time in glibc’s libm.

Signal processing, especially audio processing, is another area where recursive iterations usually lead to dernormals. Disabling them is generally the best practical solution too, because it would be extremely expensive to check the signal and disable the feedback loops at every computation stage. Moreover, it’s usually a null signal that triggers the denormal slowness (think of this simple iteration: y[n] = x[n-1] * 0.1 + y[n-1] * 0.9), so curing the processing at one stage could worsen it at a subsequent stage!

But if you can’t disable denormals, you can still inject noise at some points to ensure that the signal stays away from the denormal range…

Pingback: Floating-point complexities | Random ASCII

Besides physics, audio synthesis and effects are very prone to denormal issues. For instance, the common low-pass filter algorithms that are crucial to the distinctive sound of analog-style synthesizers will quickly produce performance-bruising denormals if fed a signal followed by silence. Fortunately, for audio applications, the precision afforded by denormals isn’t necessary (FLT_MIN represents signals down around -140dB), so avoiding denormals by enabling flush-to-zero or by injecting arbitrary signal at very low amplitude works fine.

Pingback: Comparing Floating Point Numbers, 2012 Edition | Random ASCII

Pingback: Floating-Point Determinism | Random ASCII

“if (a != b) return 1.0f / (a – b);”

That’s called checking the wrong condition. You should’ve checked “if (a – b != 0.0f)”. Of course, it’s not obviously wrong so it will happen.

But the point is that given IEEE floats (with denormals supported) the two are equivalent. Checking whether a and b are equal is not wrong because if denormals are supported then two finite floats that are not equal will always have a non-zero difference.

But if your denormalized number has only the last bit set, you’ll get garbage results. That’s why it’s often better to treat such infinitely small numbers as zero anyway, as numbers of such small magnitude aren’t trustable.

The context of this thread is the claim that subtraction rather than comparison is better in this context. Can you give an example of when that is true? And can you explain what you are suggesting? It *sounds* like you are suggesting doing the subtraction and then skipping the divide if the result is “too small” but that’s not a complete suggestion without saying what counts as too small.

As long as the result of the subtraction is non-zero the result will, at worst, be a well-defined +/- infinity, which is not garbage at all, and gives excellent results in many cases.

I’m working on an SSE vector library, and for a vector of three floats (represented by four), there doesn’t seem to be an obvious value to set the “unused” value to.

-/+ 0.0 is most convenient (since only division needs special attention, and divisions are relatively rare), so I’m wondering if the zeroes count as denormalized numbers for the purposes of performance?

On the other hand, some value (like a default 1.0, and allowing operations to change it) can easily produce denomalized numbers.

I considered qNaN, but it seems that qNaN-qNaN is treated as 0 by MSVC (http://stackoverflow.com/questions/32195949/why-is-nan-nan-0-0). Since infinity – infinity is NaN, that’s out too.

qNaN is risky because it could be handled slowly by some processors, and it prevents you from enabling exceptions to find bugs. I’d go with zero – zero is definitely not counted as denormalized.

That stackoverflow article actually discusses Intel C++ 15 (not MSVC) producing zeroes from NaNs. But what is actually happening is that with /fp:fast they aren’t handling NaNs correctly and zeroes *may* be produced. Don’t count on it. For SSE vector math the handling of NaNs is mostly up to you anyway – the processor will handle them correctly if you let it.

That StackOverflow post is actually mine (I said MSVC erroneously, and indeed the effect is due to compiler optimization). The main reason to prefer NaN is that it is (almost) impossible to generate denormalized numbers (except by such compiler effects), while it is relatively easy with any constant. Didn’t you say above that NaNs are “handled at full speed” on SSE?

Yep, that is what my tests showed. That will probably continue to be the case, but no guarantees. The point about not generating denormals is a good one, and NaN is the ‘right’ number for an uninitialized value. I just think it’s a shame to then lose the ability to enable all of the floating-point exceptions, as discussed in https://randomascii.wordpress.com/2012/04/21/exceptional-floating-point/.

Is there no way to force enable floating point exceptions? Or is NaN itself a floating point exception, so an exception would be thrown every time?

If you enable illegal-operation floating-point exceptions then every operation on a NaN will trigger an exception. This is normally desirable – it’s a significant part of the benefit of enabling that exception. It can, for instance, let you detect usage of “uninitialized” floats (if you initialized them to a NaN). But it is incompatible with having the unused float in a float4 be set to NaN. Choices must be made.

FWIW I did go with qNaN in the last channel. There’s not really an option for me; unless I regularly (and carefully, tediously, and consistently) reinitialized it, I’d eventually get qNaNs anyway. I handle checks with asserts instead.

There is nothing really complicated in fast denormals support, this is proven with Berkeley Softfloat library and analogs. The algorithms are simple, straight and fast. If some implementation has problems with it, the only reason is leisure.

About AMD: I have rechecked time to calculate (1e-312 + -1e-314) on FX-8150 (Kubuntu14.04/x86_64, GCC 4.8). FPU spends the same time as for normals (~1ns/iteration, including cycle branch and saving to memory). SSE fails (188ns/iteration). Softfloat uses 21ns/iteration.

The interesting fact that is that 1 + 1e-308 spends 1ns/iteration, but 1e-308 + 1 spends 143ns/iteration! So, SSE addition in FX-8150 isn’t commutative in sense of latency. ;( I can do more testing if you ask.

I wrote a little program to test this phenomenon for myself, covering single and extended precision (wth analogous constants rather than identical ones) as well as double. I ran it on a number of machines I have to hand: Intel, AMD, ARM and PowerPC. I didn’t test any softfloat implementations, and I haven’t yet added tests for infinities, NaNs, or anything other than addition.

The best-behaved machines in my stable are an AMD A10-7850K (using Steamroller cores, which are a little newer than your Bulldozer), a PowerBook G4 (Motorola 7447A), and a Raspberry Pi 2 (ARM Cortex-A7), each of which exhibited essentially identical times for all cases tested. In the case of the AMD machine, this remained true whether I compiled for an i387 FPU or for SSE.

The PowerPC appeared to require one extra clock cycle to handle denormal results, resulting in a very small penalty (0.67ns) for that case only – entirely reasonable IMHO. Also intriguingly, GCC supports “double double” precision for a 128-bit extended precision on this machine, but this remains incapable of representing the tiny values (chosen to match the double-extended format) that are used in my test. This mode is *not* provided on ARM.

Conversely, my AMD E-450 (using the low-power Bobcat core) exhibited the non-commutative behaviour you observed: tiny-plus-one is about two orders of magnitude slower than one-plus-tiny, but only for single and double precision. Meanwhile tiny-plus-tiny is slower still for single and double, and *one* order slower than normal values for extended. Again, though the numbers are a little different between i387 and SSE modes, the behaviour is identical.

A possible clue to the non-commutivity lies in the particular instructions used: for single and double precision, they are load A, load-and-add B, store. For extended precision, they are instead load A, load B, add, store. (Actually, I can’t tell between A and B from the disassembly, so it could be the other way around.) It could well be that the load-to-use forwarding path doesn’t generate FP metadata in the way that a plain load does, triggering an expensive pipeline flush when a denormal value is subsequently detected. There’s also clearly a large penalty for *producing* a denormal result on Bobcat.

If they weren’t in storage right now, I’d also have run it on K7, K8 and K10 based machines in my collection, as well as a 486 and a Pentium-MMX.

I did run the test on an old Pentium-3 machine; since that was the first CPU to introduce SSE, its design would not have assumed i387 code was deprecated. I assume the i387 unit is identical to that in the Pentium-2, and was in turn inherited from the original Pentium-Pro. Nevertheless, it still exhibits large penalties for handling all denormalised cases, in all precisions, both on SSE (single-precision only, since SSE2 came later) and the i387 FPU. There appear to be separate penalties for using and producing denormal values, each of which is well over 100 cycles, but they are at least commutative.

My old EeePC, which uses an underclocked Celeron M, also shows very bad denormal value handling. This is not overly surprising, given that Intel’s Israeli team started with the Pentium-3 and probably didn’t meddle with anything they didn’t need to. Most likely Core 2’s i387 unit was also inherited this way. However, the Celeron M is *even worse* than the Pentium-3 on this measure.

My early Raspberry Pi, in contrast to the newer Pi 2, also seems to have a lot of trouble with denormal values. A flick through ARM’s TRM for the 1176JZF-S core reveals why: denormal inputs and potentially-denormal results are trapped to “support code”, ie. software emulation, unless flush-to-zero mode is enabled – and the support code is *extremely* slow. The same occurs for NaNs, unless “default NaN” mode is enabled, and several other less-usual cases involving enabled exceptions. Conversely, the Cortex-A7 TRM documents “trapless operation” and full hardware support for denormalised numbers, which is reflected in the Pi 2’s results.

AFAIK the second chart is wrong.

“Exponent field equals 0” is always a valid range:

•Denormal has it keep the pacing to bridge the FLT_MIN-0 gap.

•Normal has it keep the density doubling and covers only half the FLT_MIN-0 gap. Only when the mantissa field is 0 too is it treated as a special value: +/-0.

It is a tradeoff between a gap or consistency/precision.

I think you are incorrect, but since the behavior of a denormal value when denormals are not supported is outside of the IEEE standard I’m not sure I can prove this. However here is my logic:

Let’s say you disable denormals on your FPU, and then load a denormal value. There are three choices for what the FPU can do:

1) flush to zero

2) round up to FLT_MIN

3) interpret it as a value with an exponent one less than the exponent implied by an exponent field of 1

1) is what every chip I have heard discussed does.

2) would be a possible choice, but probably dangerous.

3) would be wacky since that would mean loading in a value that is guaranteed to be not what was intended.

The denormal modes are often described as FTZ for Flush To Zero and I think my chart is correct. If you find an FPU or float emulator that behaves differently then let me know.